Dark Patterns in Privacy: An Autonomy Problem

Now that dark patterns are expressly prohibited in EU and US laws (see more below), I would like to bring a new perspective on the topic.

The first edition of this newsletter, back in May 2022, was about deceptive design (dark patterns) in privacy. This is one of the topics of my Ph.D. research, and my academic paper on the matter (Dark Patterns in Personal Data Collection: Definition, Taxonomy, and Lawfulness) was cited by the EU and by the Organisation for Economic Co-operation and Development (OECD) reports on dark patterns.

For those who are new, in simple terms, I define dark patterns in privacy as:

"deceptive design practices used by websites and apps to collect more personal data or more sensitive data from a user"

They are everywhere, and most probably, you encounter some form of dark pattern in privacy on a daily basis when you are online.

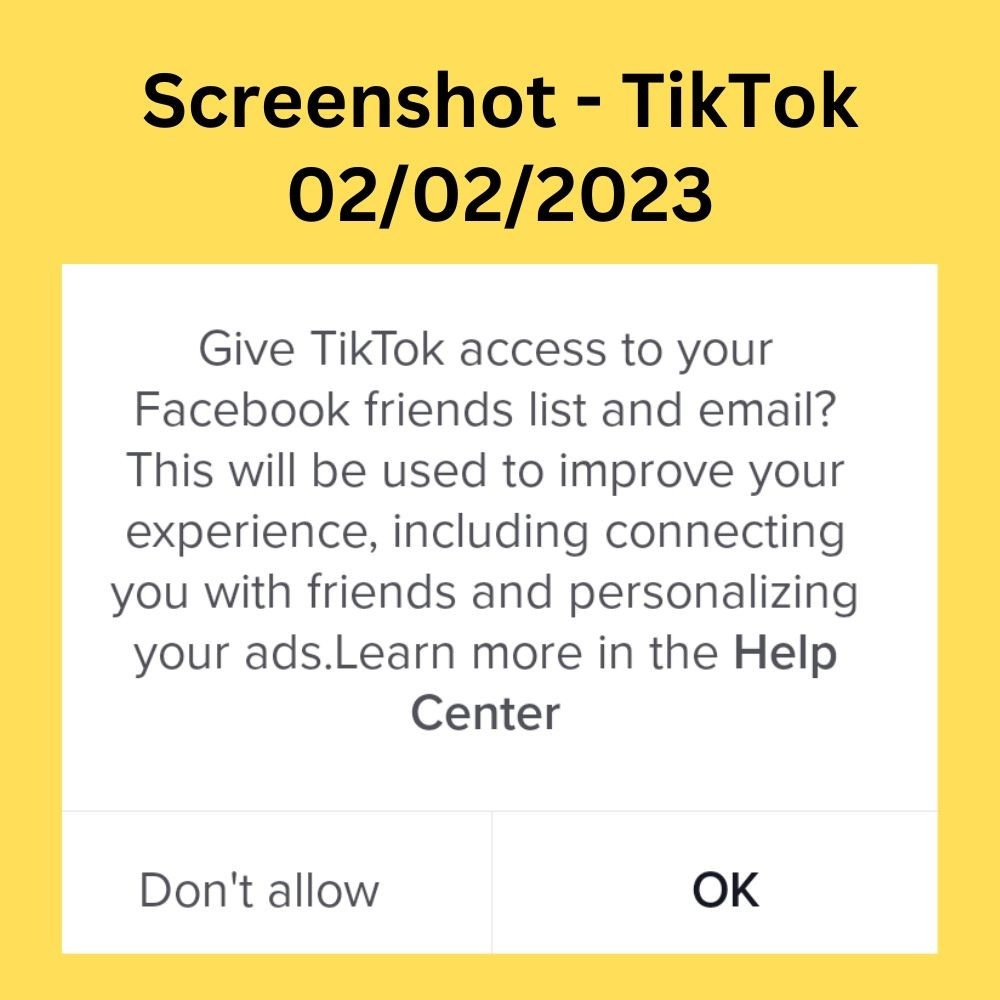

Here is an example of a cluster of dark patterns from my old friend TikTok (read my full TikTok analysis here):

In the same popup, there are 2 calls to action and 3 different explanations/assumptions:

➔ Calls to action:

It asks me to give access to my Facebook friends list

It asks me to give access to my email

➔ Explanations/assumptions:

It says that doing the above will lead to experience improvement

It says that connecting me with friends (from email and Facebook) is a form of experience improvement

It says that ad personalization is a form of experience improvement

My questions (and why there are dark patterns here):

1. What is the connection between giving access to a Facebook friends list and giving access to email? Why ask these two permissions in the same pop-up if these are two different contexts and types of data?

2. Why are personalized ads described as experience improvement? Will every additional access be described as an experience improvement?

3. Why the two calls to action are under one "OK" button? Shouldn't I have separate choices over my data?

4- Why are two different data processing purposes (experience improvement and personalized ads) under one "OK" button? Shouldn't I have separate choices over my data?

5. Why are the buttons asymmetric? "OK" is in capital letters and bold, while "Don't allow" is not capitalized and not bold. Why not use simply "allow" and "don't allow" (none in bold) to make it clear that there are permissions being asked and that I have a choice?

6- Does email access include all my email content or only my email contact list? It's not clear.

7- Bonus: I clicked on "Help Center," and a blank screen appeared on my mobile device. Screenshot and further comments here.

I have written various other articles about the topic, analyzing the cognitive biases behind dark patterns, how transparency can help us advance, how to design meaningful choices, fixing privacy settings, and so on.

In the last few months, there have been meaningful advances in the matter of dark patterns. Various academic papers and reports were published, the Digital Services Act (DSA) in the EU and the California Consumer Privacy Act in the US (CCPA, amended by the California Privacy Rights Act) are among the legislations with global influence that now expressly ban dark patterns, and large fines have been issued, such as the $520 million fine to Epic Games.

Despite these (welcome) advances, the issue of dark patterns is deeper, and it would be beneficial to look at it from a broader perspective: autonomy impairment.

Dark patterns are a way to bypass user autonomy through design. An organization creates an interface or feature that relies on cognitive biases to make the user do what the organization wants them to do. It does not allow the user to fully understand what is going on and express their conscious choice.

In the realm of data, there are various ways in which autonomy can be bypassed, not only through design.

When an organization creates a biased machine learning system (e.g., which was trained on biased data), it is also impacting user autonomy by creating harm, amplifying stigma, and restringing the opportunities of those affected by the bias/discrimination. So algorithm bias can also be seen as a way to bypass autonomy through code.

When a company launches a privacy-invasive device or software, e.g., one that collects or processes data in an unexpected way or that unnoticeably surveils passersby or third parties, it bypasses autonomy through architecture.

So our autonomy - one of the most central aspects of being human - is being threatened, a perspective which I do not see being sufficiently handled. This is one of the topics I research in my Ph.D., and I hope to offer a better regulatory framework. Stay tuned.

💡 If you would like to learn more about this topic, join our next live course in April, Privacy-Enhancing Design: The Anti-Dark Patterns Framework (4 weeks, 1 live session per week + additional material). Check out the program and register now using the coupon TPW-10-OFF to get 10% off. To learn more about our courses, or to organize a private cohort for your organization, visit: implementprivacy.com/courses

-

📅 Upcoming privacy events

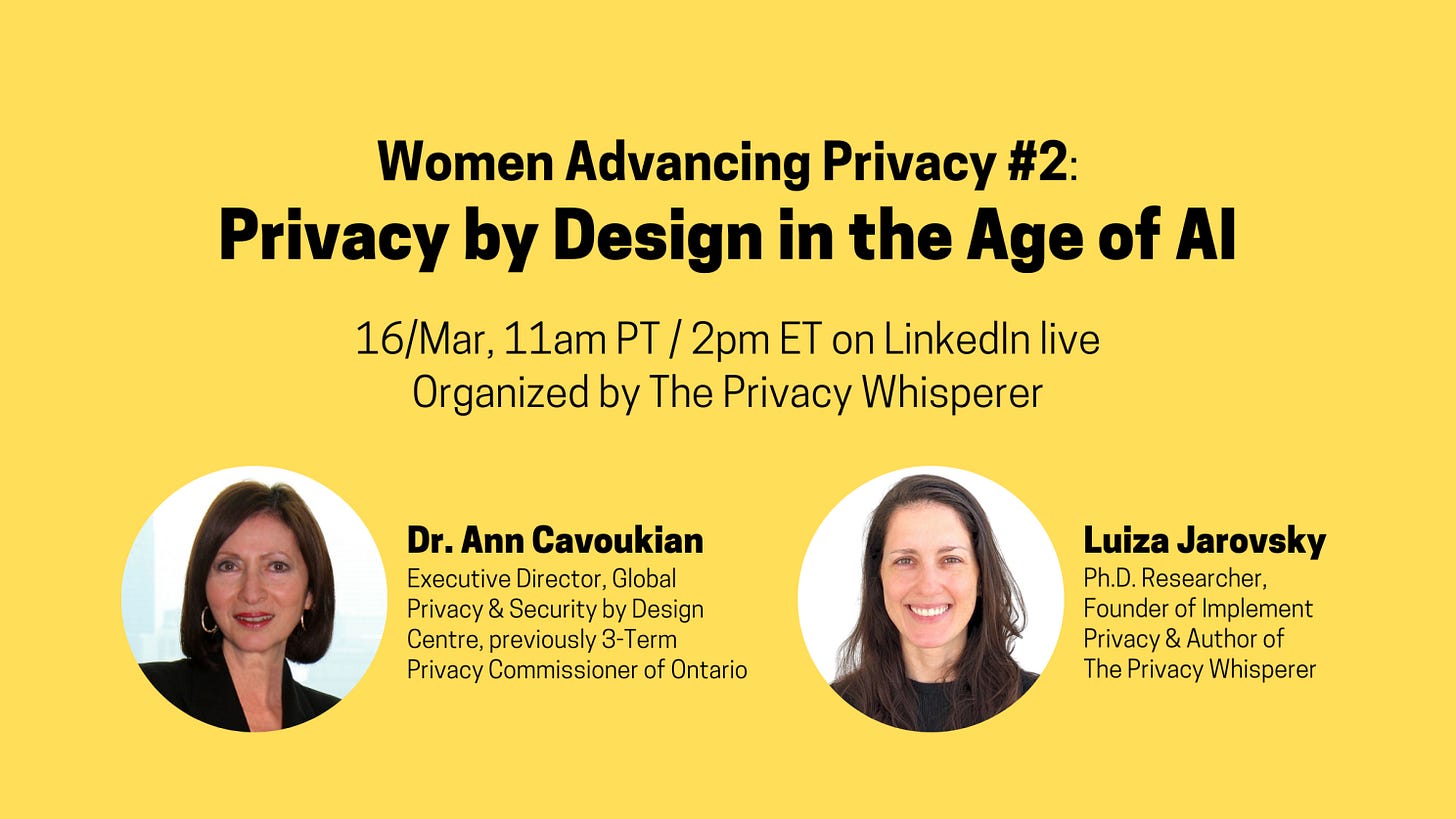

On 16/Mar, in the 2nd edition of 'Women Advancing Privacy', I will discuss with Dr. Ann Cavoukian, the inventor of Privacy by Design:

The origins of Privacy by Design

How it is essential for businesses, especially today

Her new Privacy by Design ISO certification

How we should think of Privacy by Design in the Age of AI

This will be a fascinating conversation, and I would like to invite everyone to join on Linkedin Live. To watch our previous events, check out my YouTube channel.

-

🔁 Trending on social media

Interact with the tweet here.

-

📌 Privacy & data protection careers

We have gathered relevant links from large job search platforms and additional privacy jobs-related info on our Privacy Careers page. We suggest you bookmark it and check it periodically for new openings. Wishing you the best of luck!

-

✅ Before you go:

Did you enjoy this article? Share it with your network so they can subscribe to this newsletter.

For more privacy-related content, check out our podcast and my Twitter, LinkedIn & YouTube accounts.

At Implement Privacy, I offer specialized privacy courses to help you advance your career. I invite you to check them out and get in touch if you have any questions.

See you next week. All the best, Luiza Jarovsky