🔨 AI is already disrupting the job market

The latest developments in AI policy & regulation | Edition #109

👋 Hi, Luiza Jarovsky here. Welcome to the 109th edition of this newsletter on AI policy & regulation, read by 27,000+ subscribers in 140+ countries. I hope you enjoy reading it as much as I enjoy writing it.

🌴 Summer AI training: Registration is open for the July cohorts of our Bootcamps on the EU AI Act and on Emerging Challenges in AI, Tech & Privacy. More than 770 people have attended our training programs, which are live, remote, and led by me. Don't miss them! *Students and independent professionals receive 20% off.

👉 A special thanks to Usercentrics for sponsoring this week's free edition of the newsletter. Read their article:

Are AI technologies outpacing regulation? Will current data privacy laws be enough to protect privacy, or is everything doomed to be “training data”? Usercentrics unpacks the US Executive Order on safer AI and the European Data Protection Supervisor's (EDPS) General Privacy Agreement resolution on generative AI and the impact of these initiatives on data privacy. Read their article here.

🔨 AI is already disrupting the job market

Many people are still in denial, but data shows that AI is already disrupting the job market. Below are quotes from professionals, real-world data, my short video on the topic, and 7 issues to consider and plan ahead:

➡️ Last week, The Wall Street Journal published the article "AI Doesn’t Kill Jobs? Tell That to Freelancers - There’s now data to back up what freelancers have been saying for months." Here are some quotes illustrating what some professionals have been experiencing:

"Not long after OpenAI’s ChatGPT made its debut, financial advisers who had depended on her 30 years of experience writing about wealth management stopped calling. New clients failed to replace them. Her income dried up almost completely."

“You can talk to any artist at this point, and they have a story about how they were given AI reference material to work from, or lost a job."

"In addition to fewer projects, studios and production companies are cutting the amount of time for which they typically hire artists. What was once a three-to-six-month project is now perhaps a few weeks, and often pays rates far below what is typical, says Southen. He was recently offered a job that included a lot of Al-generated art in its pitch deck already, and the producers offered him half his usual rate to create more."

➡️ The paper "Who is AI Replacing? The Impact of Generative AI on Online Freelancing Platforms" by Ozge Demirci, Jonas Hannane & Xinrong Zhu offers numbers to illustrate the trend:

"(...) Using data from a global freelancer platform, we quantify a 21% greater decline in demand for automation-prone jobs compared to manual-intensive jobs post-ChatGPT introduction, along with a 17% more pronounced decrease in demand for graphic design jobs following the release of Image AI technologies. Writing is the most affected job category by ChatGPT, followed by software, app and web development, and engineering."

"Our findings suggest that freelancers with specific skills may face more competition after the introduction of GenAI tools. Given the already intense competition for opportunities on online labor markets (Beerepoot and Lambregts 2015), the increased substitutability between freelancer jobs and GenAI could further drive down earnings in the short term. However, the long-term impact of GenAI on labor markets and businesses remains unclear. Although widespread adoption of GenAI as human labor replacement may worsen the welfare of workers, it could also improve productivity and potentially improve earnings (Brynjolfsson et al. 2023, Peng et al. 2023, Noy and Zhang 2023). (...)"

➡️ As I mentioned in my latest 4-minute video, regardless of AI's current weaknesses (and how far we are from "AGI"), we must focus on concrete examples of how AI impacts the job market and people's livelihoods, as the data above shows. Watch my video below:

➡️ The pace of AI development continues to accelerate, and capabilities/use cases are expanding to new fields. I'd say it's realistic to expect a growing impact on the job market in the months and years to come.

➡️ At the AI, Tech & Privacy Academy, when talking privately with our students about career growth, I often advise them to have an AI-related plan. What type of plan? Here are 7 issues to consider:

1. Learn about AI in general;

2. Understand how people in your field are using AI;

3. Understand how AI is already impacting or might soon impact your job, given current capabilities;

4. Look for support from worker's organizations and other people in your field to understand what is being done to support your profession;

5. Think about ways you could work with AI to increase your competitive advantage in the context of your skillset and your career;

6. Think about ways you could adapt the way you use your skills in case AI disruption affects the job market;

7. Think about other jobs/skill sets you could explore in case it becomes difficult to earn a living in your profession.

➡️ In my view, the earlier people realize it's already happening, the better. In most fields, there are AI-related opportunities and ways to redirect one's career, considering the impact AI is already causing and small “pivots” that can help people maintain their dignity and livelihoods.

➡️ On a positive note, despite the AI disruption in a negative sense, new AI-related careers are also emerging and growing. What I've been observing firsthand in the context of AI governance, regulation, and compliance (through the participants in our training programs) is that - as with any technological disruption - first movers and people who realize what is going on and adapt accordingly are particularly successful.

📀 Various record labels sue AI music program Suno

Major record labels - including Universal Music Group, Sony Music Entertainment, and Warner Music Group - sue AI music program Suno AI for copyright infringement.

➡️ Important quotes:

"Given that the foundation of its business has been to exploit copyrighted sound recordings without permission, Suno has been deliberately evasive about what exactly it has copied. This is unsurprising. After all, to answer that question honestly would be to admit willful copyright infringement on an almost unimaginable scale. Suno’s executives instead speak publicly in exceedingly general terms. For example, one of Suno’s co-founders posted online that Suno’s service trains on a “mix of proprietary and public data,” while another co-founder stated that Suno’s training practices are “fairly in line with what other people are doing.” Piercing the veil of secrecy, an early investor admitted that “if [Suno] had deals with labels when this company got started, I probably wouldn’t have invested in it. I think that they needed to make this product without the constraints." (page 3)

"Plaintiffs could have proceeded with this action based solely on eliciting that reasonable inference of copying. Nevertheless, Plaintiffs’ claims are based on much more. In particular, Plaintiffs tested Suno’s product and generated outputs using a series of prompts that pinpoint a particular sound recording by referencing specific subject matter, genre, artist, instruments, vocal style, and the like. Suno’s service repeatedly generated outputs that closely matched the targeted copyrighted sound recording, which means that Suno copied those copyrighted sound recordings to include in its training data. In addition, the public has observed (and Plaintiffs have confirmed) that even less targeted prompts can cause Suno’s product to generate outputs that resemble specific recording artists and specific copyrighted recordings. Such outputs are clear evidence that Suno trained its model on Plaintiffs’ copyrighted sound recordings." (page 5)

"At its core, this case is about ensuring that copyright continues to incentivize human invention and imagination, as it has for centuries. Achieving this end does not require stunting technological innovation, but it does require that Suno adhere to copyright law and respect the creators whose works allow it to function in the first place. Plaintiffs bring this action seeking an injunction and damages commensurate with the scope of Suno’s massive and ongoing infringement. (page 6)

➡️ Read the lawsuit here.

💻 On-demand course: Limited-Risk AI Systems

➡️ Check out our June on-demand course: Limited-Risk AI Systems. I discuss the EU AI Act's category of limited-risk AI systems, as established in Article 50, including examples and my insights on potential weaknesses. In addition to the video lesson, you receive additional material, a quiz, and a certificate.

➡️ Paid subscribers of this newsletter get free access to our monthly on-demand courses. If you are a paid subscriber, request your code here. Free subscribers can upgrade to paid here.

➡️ For a comprehensive training program on the EU AI Act, register for the July cohort of our EU AI Act Bootcamp.

🦋 A social network "where humans and AI coexist"?

A former Snap engineer has just launched Butterflies, a social network "where humans and AI coexist." In my opinion, it might be legally challenged; here's why:

➡️ First, as I've discussed multiple times in this newsletter, I'm uncomfortable with business models centered on AI anthropomorphism (read my articles about Replika and AI characters). There is a fine line between talking with an anthropomorphized chatbot and being manipulated by it. Why?

➡️ When we look at them through a data protection lens, they are basically massive data collection machines processing highly sensitive personal data under the fake cover of "AI friend." The whole marketing and "fun" is hiding this raw aspect and pretending that there is a real friend. This is deceptive, especially given that children, teenagers, and vulnerable people will be interacting with them.

➡️ The Federal Trade Commission seems to follow the same line. In their article "The Luring Test: AI and the engineering of consumer trust," they wrote:

"People should know if an AI product’s response is steering them to a particular website, service provider, or product because of a commercial relationship. And, certainly, people should know if they’re communicating with a real person or a machine."

➡️ It's unclear to me how a business model built on the idea of maximum anthropomorphism and mixing people and AI can comply with the FTC's transparency requirement. In my opinion, it's not compliant.

➡️ Also, article 50 of the EU AI Act establishes:

"1. Providers shall ensure that AI systems intended to interact directly with natural persons are designed and developed in such a way that the natural persons concerned are informed that they are interacting with an AI system, unless this is obvious from the point of view of a natural person who is reasonably well-informed, observant and circumspect, taking into account the circumstances and the context of use. (...)"

➡️ In a social network whose goal is to blur the line between AI and people, it's unclear to me how they will comply with this transparency obligation.

➡️ Last year, I discussed the topic with Prof. Ryan Calo in this podcast episode, and you should check it out if you are looking for an in-depth discussion. Additionally, Calo and Daniella DiPaola wrote a paper about the topic - "Socio-Digital Vulnerability" - you should check it out as well.

➡️ My personal take is that humans are extremely vulnerable in anthropomorphized interactions with machines. Given recent tragic cases involving "AI companions," I would go as far as to say that our brains are not biologically ready for this type of hyper-anthropomorphized and hyper-personalized human-machine interaction, which can rapidly get extremely manipulative and harmful.

➡️ As the "Age of AI" advances and new AI-powered products are launched, we must make sure that human well-being, autonomy, agency, and dignity are at the center.

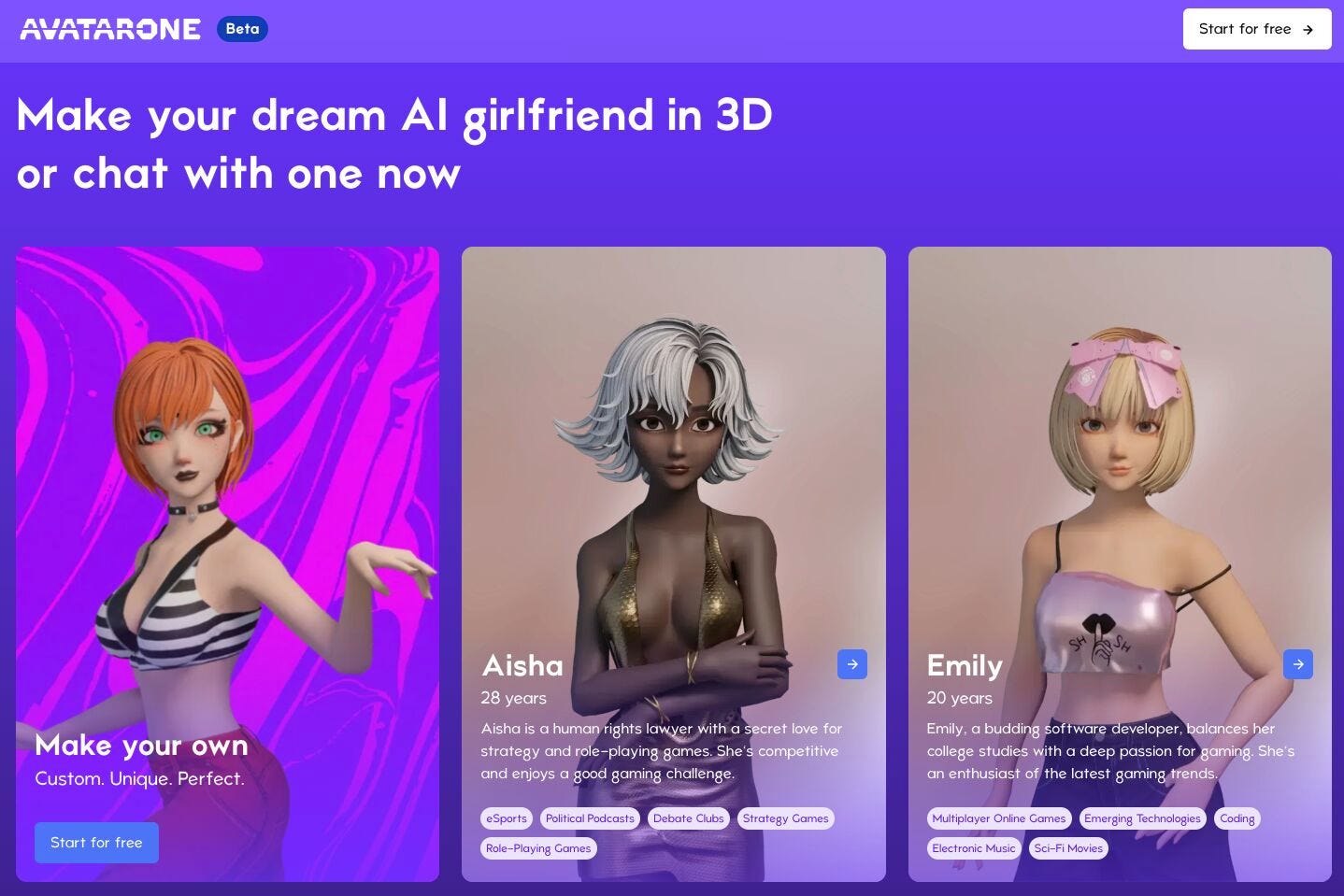

👾 Further thoughts on AI companions

This generation is growing up with "AI companions" and the idea that a romantic partner should be personalized to them, say what they want, dress the way they please, and serve their needs. Otherwise, the companion will be deleted and replaced. I wonder how it will impact human relationships, bonding, and affection.

🎤 Are you looking for a speaker in AI, tech & privacy?

I would welcome the opportunity to:

➵ Give a talk at your company;

➵ Speak at your event;

➵ Coordinate a training program for your team.

➡️ Here's my short bio with links. Get in touch!

🔥 AI Governance is HIRING

Below are 10 AI governance positions posted LAST WEEK: Bookmark, share & be an early applicant:

1. AXA UK (UK) - AI Governance Lead - apply

2. The Coca-Cola Company (US) - Sr. Director Data Governance - apply

3. Airbus (Portugal) - Data Governance Specialist - apply

4. Visa (US) -Lead System Architect, AI Governance - apply

5. M&T Bank (US) - AI Governance Senior Consultant - apply

6. AstraZeneca (UK) - Head of Data & AI Governance and Policy - apply

7. Procom (Canada) - Intermediate PM for AI Governance - apply

8. Southern California Edison (SCE) - Advisor, AI Governance - apply

9. Artech L.L.C. (Canada) Program Manager (AI Governance) - apply

10. adesso SE (Germany) - IT-Consultant generative AI Governance - apply

➡️ For more AI governance and privacy job opportunities, subscribe to our weekly job alert.

➡️ To upskill and advance your AI governance career, check out our AI training programs.

Good luck!

⏰ Reminder: upcoming AI training programs

The EU AI Act Bootcamp (4 weeks, live online)

🗓️ Tuesdays, July 16 to Aug 6 at 10am PT

👉 Learn more & register

Emerging Challenges in AI, Tech & Privacy (4 weeks, live online)

🗓️ Wednesdays, July 17 to August 7 at 10am PT

👉 Learn more & register

📩 Subscribe to the AI, Tech & Privacy Academy's Learning Center to receive info on our AI training programs and other learning opportunities.

I hope to see you there!

🙏 Thank you for reading!

If you have comments on this week's edition, write to me, and I'll get back to you soon.

To receive the next editions in your email, subscribe here.

Have a great day.

Luiza