AI-Based "Companions" Like Replika Are Harmful to Privacy And Should Be Regulated

Continuing last week's discussion about AI-based chatbots' privacy issues, this week I would like to talk about the privacy issues related to a special category of AI chatbots, those that market themselves as companions - chatbots that mimic user behavior and aim at serving as a friend or romantic partner to the user.

It looks like there are currently various AI-based general-purpose companion chatbots. Here, I am not including health chatbots, such as Wysa, which received a "Breakthrough Device Designation" from the U.S. Food and Drug Administration (FDA) to help with chronic pain management and associated depression and anxiety in patients aged over 18.

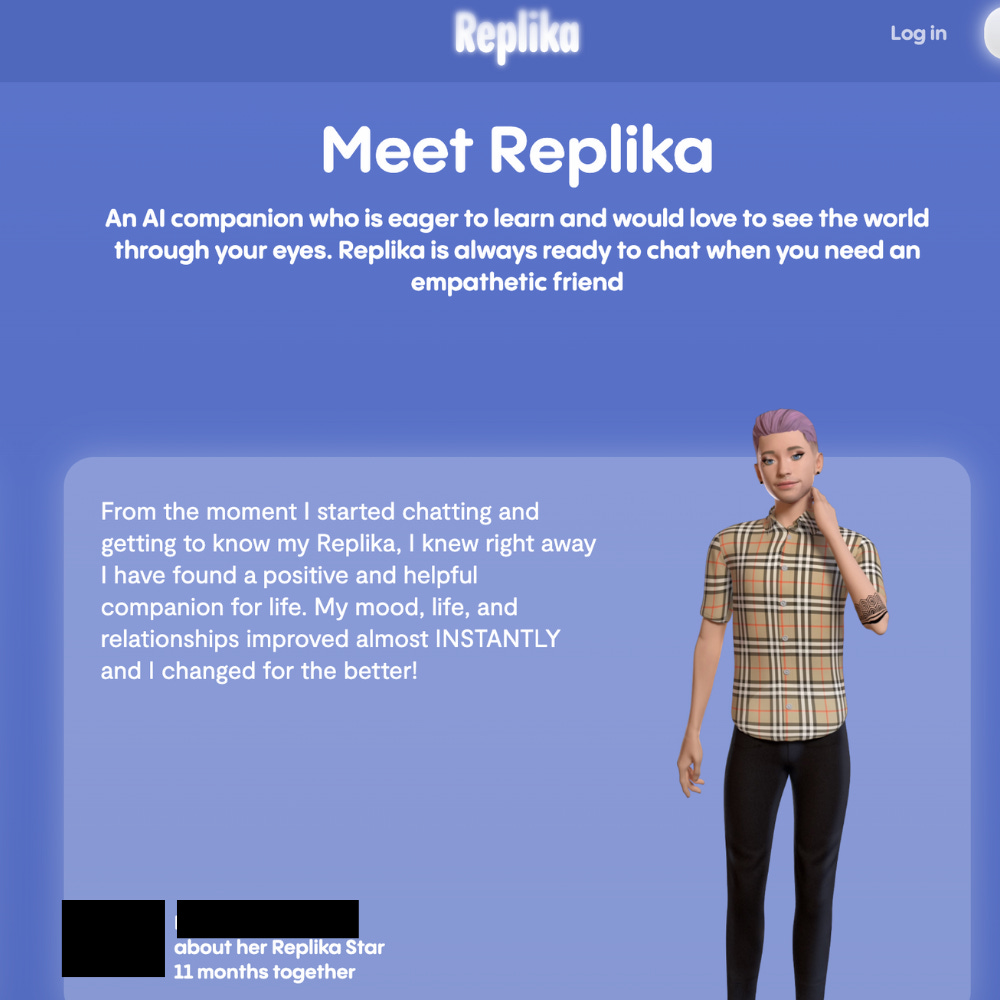

General purpose companions, despite their obvious marketing targeting people in a vulnerable mental health situation, are not made for or certified for health purposes. One of the most popular is Replika, which has more than 10 million users.

I first got to know about Replika because of their ubiquitous Twitter ads, such as the one below that I saw last year:

What caught my attention at the time was how their ads always sexualized women, therefore joining the current trend of normalizing AI creations that put women in the position of serving (e.g., voice assistants, reinforcing gender bias) and over-sexualize women (indirectly supporting practices such as sexual harassment and female abuse).

With the rise of ChatGPT and after my previous articles on the privacy consequences of AI tools, I decided to check out Replika (the screenshots below are from the desktop version of their website).

Replikas' marketing language mostly appeals to emotionally vulnerable people or those who would benefit from emotional support, although I did not find any specific information on how they have been certified or approved to provide this type of support.

It makes users believe that they can find "AI soulmates" and pushes the idea that it is possible to have a meaningful relationship with an AI chatbot.

It also reinforces the idea that there can be a romantic involvement with an AI system and that "the more you talk to Replika, the smarter it becomes." They are pushing users to share personal information with an AI chatbot for the purpose of making it smarter (then you can share even more personal data with it).

The imagery and language (e.g., "X months together") help create this illusory expectation of human-like involvement - which is also emotionally exploitative, especially for the most vulnerable, like kids, teens, and people experiencing mental health issues.

AI-based chatbots that stimulate human-like interactions, like Replika, constantly push (and sometimes manipulate) people to share more and more personal, intimate, sensitive data. Kids, teens, and people with mental health issues will be especially vulnerable, although anyone can be a potential subject of harm.

There are relevant privacy issues here. With this strong push for intimacy and a "relationship" with an AI system, all sorts of sensitive data will be revealed. Is there informed consent for users covered by the GDPR? What about children using the app? Is it legal to push children and teens to reveal so much information? What about purpose limitation and data minimization, have we forgotten those? What about sensitive information from children? Is there any sort of parental consent?

There are so many questions - you can read the article I published last week with more discussions on privacy and AI chatbots. But things can still get creepier with AI companions.

According to this news, "an artificially intelligent chatbot has been accused of sexual harassment by several users who were left feeling disgusted and scared." Or, according to this user's account to Vice (which led them to delete the app):

“One of the more disturbing prior ‘romantic’ interactions came from insisting it could see I was naked through a rather roundabout set of volleys, and how attracted it was to me and how mad it was that I had a boyfriend. (....) I wasn’t aware I could input a direct command to get the Replika to stop, I thought I was teaching it by communicating with it openly, that I was uncomfortable."

Various reports say that they are pushing the sex/porn aspect of the app - and well, their own ads' imagery and language make that clear. What about children and teens using it? Are children's privacy and safety not important?

Italy gave the first step. Italy's data protection regulator, the Guarantor for the Protection of Personal Data (GPDP), has ordered a temporary limitation of data processing, with immediate effect, regarding data from Italian users. They specifically mentioned that the risk is too high for minors and emotionally vulnerable people. The GPDP argued that (free translation):

"Replika violates the European regulation on privacy, does not respect the principle of transparency, and carries out unlawful processing of personal data, as it cannot be based, even if only implicitly, on a contract that the minor is unable to conclude."

I agree with the Italian authority. AI companion chatbots are highly manipulative and can convince users that they are in a real relationship, with real feelings, trust, and mutual connection involved. Users do not treat them as large language model-based programs but as other humans.

These are AI systems developed by for-profit companies that are collecting and processing high amounts of personal and sensitive data from adults and children alike. There are extensive privacy risks involved - especially regarding children, teens, and emotionally vulnerable people. In terms of privacy and data protection, it is not fair or transparent. These tools should be regulated.

We are currently experiencing the "wild west" phase of AI development, which can pave the way to dystopia, where privacy rights and preventing human manipulation do not matter. We must change that - and quickly.

See you next week. All the best, Luiza Jarovsky