👁️ When Generative AI, copyright, and privacy meet

Plus: Meta's "link history": a privacy trick?

👋 Hi, Luiza Jarovsky here. Welcome to the 85th edition of the newsletter. Thank you to 80,000+ followers on various platforms and to the paid subscribers who support my work. To read more about me, find me on social, or drop me a line: visit my personal page. For speaking engagements, fill out this form.

✍️ This newsletter is fully written by a human (me), and I use AI to create the illustrations. I hope you enjoy reading it as much as I enjoy writing!

A special thanks to MineOS, this edition's sponsor:

Data protection & privacy regulations are nothing without proper enforcement, so how did the industry do in 2023? Six years into GDPR (and four years into CCPA), 2023 set a record in both the number of fines and the financial penalties handed out (a record-breaking 438 GDPR fines, totaling €2.054 Billion/$2.248 Billion). Big Tech got hit with hefty fines, and hundreds of smaller companies were also impacted. Check out MineOS’s global recap of 2023 data protection enforcement and the lessons to learn from it.

➡️ To become a newsletter sponsor and reach thousands of privacy & AI decision-makers in 2024: get in touch (only 2 spots left until July).

👁️ When Generative AI, copyright, and privacy meet

If you are a privacy professional, you can't ignore AI-related copyright lawsuits anymore. Read this:

In order to develop and train large language models (LLMs), AI companies rely on web scraping, a practice that will "digest" all internet data. In the context of generative AI, this practice has various legal implications, and some of the most relevant are privacy and copyright-related.

I've been discussing the privacy issues behind scraping extensively in this newsletter: you can check the archive and read previous posts. They involve lawfulness of processing, data subject rights, data protection principles, and more.

Last week, for example, I showed that OpenAI currently argues that they have a legitimate interest in processing personal data to train their AI models. On this page, slightly hidden in their help center, they write:

“We use training information lawfully. Large language models have many applications that provide significant benefits and are already helping people create content, improve customer service, develop software, customize education, support scientific research, and much more. These benefits cannot be realized without a large amount of information to teach the models. In addition, our use of training information is not meant to negatively impact individuals, and the primary sources of this training information are already publicly available. For these reasons, we base our collection and use of personal information that is included in training information on legitimate interests (…)”

The idea that tech companies can rely on legitimate interest to train AI systems through scraping to feed generative AI applications is not obvious. This is a new interpretation/legal construct of the legitimate interest reasoning that deserves further analysis.

I invite you to read last week's analysis to understand it better.

What may be unexpected is that copyright issues are ALSO relevant from a privacy perspective. Why?

If scraping is banned or restricted due to copyright issues, there will also be privacy implications, as AI companies will have to find other sources of data to train their models, there will potentially be personal data involved, and possibly new data protection implications.

In the infographic above, you can see a brief timeline of recent AI-related copyright cases. This list will probably grow in 2024.

You can access these lawsuits here:

Sarah Silverman, Christopher Golden & Richard Kadrey vs. OpenAI

Sarah Silverman, Christopher Golden & Richard Kadrey vs. Meta

Sarah Andersen, Kelly McKernan & Karla Ortiz vs. Stability AI

So privacy professional: the next time you see discussions on whether generative AI serves a new transformative purpose and whether this is "fair use," stay tuned - as there might also be indirect consequences for privacy, data protection, and data practices behind AI.

*To dive deeper into privacy, tech & AI topics: join our 4-week Bootcamp (the January cohort is sold out; there are two new cohorts starting in February).

🤖 AI governance: one of the most promising careers in 2024

With billions being invested in AI, the AI Act coming hopefully soon, and dozens of other countries/regions heavily focusing on AI development and regulation, there will be a high demand for professionals who will not only oversee AI training and deployment but also make sure that it is complying with existing best practices, standards, laws, and regulations.

Take this job opening posted by Dice as an example. They are looking for an "AI governance manager," and this is how they advertised it (I have no connection with them and do not have more info on the position):

"We are actively seeking a skilled and motivated AI Governance Manager. The candidate will play a key role in defining and implementing governance strategies, policies, and standards to ensure ethical, responsible, and compliant use of AI/ML technologies within the organization. They will provide technical leadership and expertise in AI/ML to align governance principles with the technical implementation of AI/ML solutions. The candidate will be responsible for promoting ethical AI practices, mitigating risks, and ensuring regulatory compliance in AI/ML initiatives."

My opinion is that there will be a demand for thousands of AI governance professionals who will help make sure that industry practices are legal, ethical, responsible, and fair.

Interestingly enough, from my perspective, AI governance professionals will have to work side-by-side with their privacy peers, helping to make sure that AI also complies with privacy laws and regulations.

If you are looking for new challenges in 2024, it looks like AI governance is an interesting bet.

We've gathered hundreds of AI jobs - including responsible AI, AI governance, and AI ethics - in our AI job board, check it out.

💛 Enjoying the newsletter? Share it with friends and help us spread the word. Let's reimagine technology together.

🧬 Victim blaming in privacy?

A new privacy principle just dropped (unbeknownst to lawmakers and DPAs): "Were you a victim of a data breach? Sensitive data involved? This is YOUR fault!"

Recently, TechCrunch reported that the genetic testing company 23andMe sent a letter to hundreds of customers who were victims of the genetic company's latest data breach (and now are suing the company) saying that:

“users negligently recycled and failed to update their passwords following these past security incidents, which are unrelated to 23andMe.”

There was no acknowledgment of security or privacy issues and no apologies. As a legal strategy (to avoid more lawsuits, fines, and further scrutiny), they opted to put the blame on customers.

What most concerns me here is that this was not ANY data breach. As I wrote in my article "Ethnically targeted data theft," genetic data made available to the public can also give rise to new forms of anti-semitism, racism, and ethnic hate targeting. In this case, despite 23andMe not having publicly acknowledged it, there was an anti-semitic and racist incident. Why?

News outlets who had access to the stolen data on the dark web remarked that this was a list targeting 1 million people of Ashkenazi Jewish descent, as well as 100,000 Chinese users. According to NBC News, the data:

“includes their first and last name, sex, and 23andMe’s evaluation of where their ancestors came from. The database is titled “ashkenazi DNA Data of Celebrities,” though most of the people on it aren’t famous, and it appears to have been sorted to only include people with Ashkenazi heritage.”

Victim blaming should have no place in privacy, much less when we are dealing with sensitive data.

Companies should be proactive, preventative, and protective, and should make sure that there are defaults, notices and additional measures to make sure that users are informed and safe. If there is a data breach or data is stolen: it's still the company's fault.

This is basic data protection.

🎤 Privacy: what to expect in 2024? Watch:

Yesterday, I discussed with three leading privacy experts, Odia Kagan (Fox Rothschild), Nia Cross Castelly (Checks / Google), and Gal Ringel (MineOS), what to expect and how to get ready for emerging privacy challenges in 2024. We spoke about:

the hottest privacy topics for 2024 and why they will be important/impactful;

what will be the most challenging privacy issues for business and why;

how businesses can prepare in advance for these challenges and the most important practical measures organizations and professionals should be thinking about right now;

tips for privacy professionals who want to navigate 2024 successfully.

You can watch the recording on my YouTube channel or listen to it on my podcast.

🏢 Jobs in privacy & AI

Did you know that our privacy job board is one of the most popular privacy careers pages on the internet? If you are looking for a job in privacy or know someone who is, share it with them. If you would like to include your organization's job openings, get in touch. You can also check our AI job board.

📚 AI Book Club: 500+ members

So far, 500+ people have registered for our AI Book Club. Are you an enthusiastic reader? Interested in AI? Join us! This is how it works:

Last month we had our first meeting, in which we discussed "Atlas of AI" by Kate Crawford. Five commentators shared their perspectives, and after each short presentation, anyone could comment or raise questions.

The feedback was super positive, and people told me they enjoyed having this unique occasion to be able to listen, learn, and share about an influential AI book.

Some people also told me that the meeting and the slight pressure to finish before the day of the discussion helped them read more and with more attention.

I'm glad because this is exactly the goal of the book club!

So we are having our second meeting on January 18 to discuss "The Coming Wave: Technology, Power, and the Twenty-first Century's Greatest Dilemma" by Mustafa Suleyman.

This book portrays AI from a very different angle than the one chosen by Kate Crawford (and which we discussed at the previous meeting). It's also an extremely influential book written by the co-founder of one of the biggest AI companies in the world (DeepMind).

If you read the book, if you are interested in AI, or if you are just curious about what a book club is, I invite you to join the next session. If you have friends who might be interested: please invite them! This is the link to register.

Happy reading!

🦋 [NEW] Launching new cohorts by popular demand

We are so excited to share that the January cohort of our 4-week Privacy, Tech & AI Bootcamp has SOLD OUT! We are opening two new cohorts in February. If you are a privacy professional, you can't miss it. Here's why:

We've designed a program covering what, in my view, are some of the most important topics in the intersection of privacy, technology, and artificial intelligence.

The main idea is to offer knowledge, tools, and resources to help privacy professionals (and anyone interested) deal with interdisciplinary emerging privacy challenges.

The schedule includes 4 live sessions with me, additional reading material every week, quizzes, office hours for questions, and a certificate at the end.

*For people who hold IAPP certifications: the program has 8 CPE credits pre-approved by the IAPP.

These are some of the topics we'll cover:

✓ Dark patterns in privacy

✓ Exploitation of cognitive biases

✓ Privacy-enhancing design

✓ The challenges of privacy UX

✓ The latest AI wave

✓ AI risks and harms

✓ Privacy issues in AI

✓ Dark patterns in AI

✓ AI Anthropomorphism

✓ Cases, legislation, lawsuits

✓ Legal compliance issues

✓ Main takeaways

*If you are a student or if you can't afford the fee, we have special coupons; get in touch and let me know.

This is a unique learning opportunity, and I hope to see many of you there! Interested? Write to me or register today (limited seats).

🎓 Privacy managers: schedule a training

600+ professionals from leading companies have attended our interactive training programs. Each of them is 90 minutes long (delivered in one or two sessions), led by me, and includes additional reading material, 1.5 CPE credits pre-approved by the IAPP, and a certificate. To book a private training for your team: contact us.

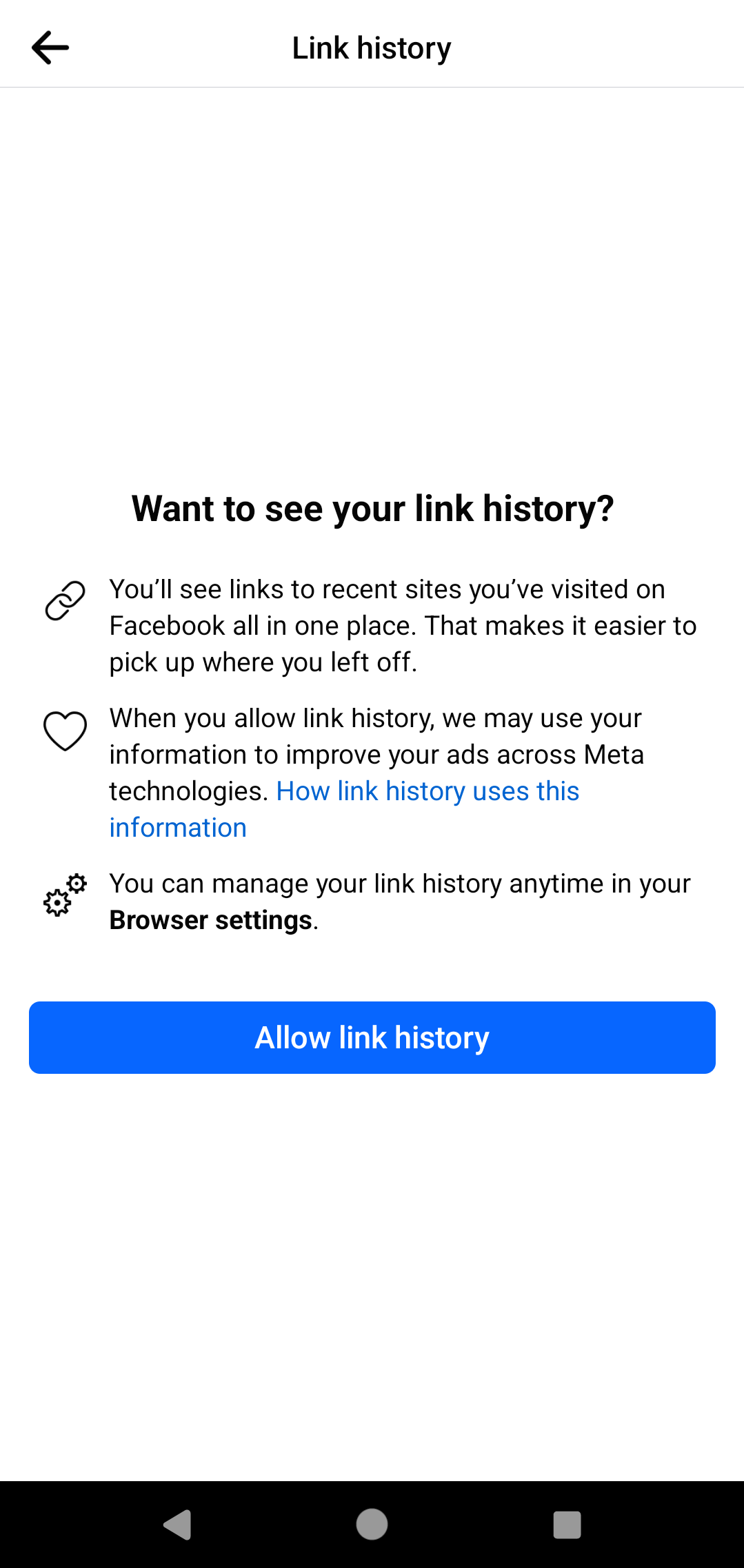

🪄 Meta's "link history": is it a privacy trick? A new dark pattern?

The year has barely started, and we already have privacy news from Meta.

Facebook has just launched this new mobile setting in which when you click on any link, it prompts you to allow link history (see screenshot above).

There are three main problems with this new feature:

a) It's pushing you to allow link history

The way the privacy notice is written and designed is pressuring the user to “allow link history.” Notice how there is no option to reject the prompt, such as “No, thanks” or “Not this time.”

The user experience flow when interacting with this consent popup is that in order to get rid of it, the natural action is to allow data collection and processing by Meta.

In the context of the taxonomy for dark patterns in privacy that I proposed in my paper, this is a form of dark pattern.

*An interesting comment here: they changed the previous design after I wrote about it on social media. Read my LinkedIn and X posts from yesterday, and compare the screenshots with the one above (from today). The previous design showed an “opt-out” toggle, meaning that it was on by default.

b) It's unclear what is different now.

Meta has been tracking users - including what they click on - for many years already, and they use this information to serve behavioral ads. What is different with this new feature?

They present it as a convenience to users, and at the same time, they write that they may use this information to improve ads.

My guess is that the whole thing is related to data protection law and consent issues (including the noyb vs. Meta lawsuit and the "subscription for no ads" feature), in the sense of making the user "choose" to share this data (the links a user clicks) with Meta.

However, it's not clear, and I'm forced to deal with a privacy notice without knowing the concrete consequences.

c) The "how link history uses this information" link does not offer answers.

I've visited the link below - "how link history uses this information" - and it tells the user how to turn on and off this feature. It does not provide answers to the questions on item "b" above, and it does not specifically tell me what happens if I let it off. It also refers me to Meta's privacy policy.

Meta's privacy policy is built in a layered way in which I cannot use "ctrl F" to find a specific word (i.e., "link history"), so I cannot find any specific mention of this feature.

-

To be fair, this could be a privacy feature in the sense of letting users know exactly what external links are affecting the behavioral profiling that will affect the ads being shown.

If that was the case, this could be clearly explained in the sense of a transparency feature similar to Apple's App Tracking Transparency. It would also be a bonus point to Meta for focusing on fairness and transparency.

But it was not worded this way, and it's unclear to me what is going on.

Wishing everyone a great week!

Luiza