🏁 EU AI Liability: A Game Changer

AI Policy, Compliance & Regulation | Edition #148

👋 Hi, Luiza Jarovsky here. Welcome to the 148th edition of this newsletter on the latest developments in AI policy, compliance & regulation, read by 38,900+ subscribers in 155+ countries. I hope you enjoy reading it as much as I enjoy writing it!

💎 In this week's AI Governance Professional Edition, I’ll explore the interplay between the new EU Product Liability Directive (published today) and the EU AI Act, highlighting why some AI companies might consider EU liability issues a dealbreaker. Paid subscribers will receive it on Friday. If you are not a paid subscriber yet, upgrade your subscription to receive two weekly editions (this free newsletter + the AI Governance Professional Edition), access all previous analyses, and stay ahead in the rapidly evolving field of AI governance.

🗓️ Two weeks left to register! This December, join me for a 3-week intensive AI Governance Training program (8 live lessons; 12 hours total), already in its 15th cohort. Join over 1,000 people who have benefited from our training programs. Learn more and register here.

🏁 EU AI Liability: A Game Changer

Today, the new Product Liability Directive was published in the Official Journal of the European Union, and it will enter into force in 20 days. What most people don't know is that it applies to AI systems, and it might have a major impact on the AI ecosystem in the EU.

If you have been following the news, you've likely heard tech executives argue that for countries to support AI development, AI companies cannot be held liable for the outputs of their AI systems—otherwise, they claim, they would go broke.

At the same time, legal liability systems can follow different approaches, including fault-based, strict liability, and hybrid versions of these. The legal nuances of the adopted approach will be extremely relevant and will determine, for example, if the victim will bear the full burden of proof or if they will be able to rely on a presumption of defectiveness.

╰┈➤ Why is EU AI liability a game changer? There will be an important interplay between this new Product Liability Directive, the AI Liability Directive, the EU AI Act, and additional national liability rules. The AI Liability Directive is not ready yet, so we do not have the full picture yet. However, the new changes brought by this Directive, when read together with some of the EU AI Act's rules, might prove strict when compared to other liability systems, and I can foresee companies deciding not to offer their AI products in the EU. I will expand on this discussion in this week's paid newsletter edition on Thursday.

╰┈➤ In any case, it is clear that the new Product Liability Directive published today introduces significant changes to the existing EU liability framework applied to AI systems. Below is a quick summary of six key issues related to EU AI liability that everyone in the AI field should know. I invite you to pay special attention to item 4:

1️⃣ The new directive expressly acknowledges that AI—and the need to compensate victims of AI-related harm—was one of the factors that made it necessary to update the old product liability directive. It also applies to AI. According to Recital 3:

"Directive 85/374/EEC [the old product liability directive] has been an effective and important instrument, but it would need to be revised in light of developments related to new technologies, including artificial intelligence (AI), new circular economy business models and new global supply chains, which have led to inconsistencies and legal uncertainty, in particular as regards the meaning of the term ‘product’. Experience gained from applying that Directive has also shown that injured persons face difficulties obtaining compensation due to restrictions on making compensation claims and due to challenges in gathering evidence to prove liability, especially in light of increasing technical and scientific complexity. That includes compensation claims in respect of damage related to new technologies. The revision of that Directive would therefore encourage the roll-out and uptake of such new technologies, including AI, while ensuring that claimants enjoy the same level of protection irrespective of the technology involved and that all businesses benefit from more legal certainty and a level playing field."

2️⃣ AI providers—defined as such according to the EU AI Act—should be treated as manufacturers (Recital 13).

3️⃣ "Where a substantial modification is made (...) due to the continuous learning of an AI system, the substantially modified product should be considered to be made available on the market or put into service at the time that modification is actually made." (Recital 40)

4️⃣ The "black box" paradox is also addressed and may result in a presumption of defectiveness. Recital 48 establishes that when:

a) the defectiveness of an AI product;

b) the causal link between the damage and the defectiveness; or

c) both

are difficult to prove, national courts may presume the defectiveness of a product. This assessment should be made by national courts on a case-by-case basis.

5️⃣ The new Product Liability is an EU Directive, and as such, it's not automatically applicable to all EU Member States. Each one will have to enact a national law to implement it.

6️⃣ We still need the AI Liability Directive, which has not been approved yet.

╰┈➤ I will expand this discussion—particularly the interplay between this new Product Liability Directive and the EU AI Act—in Thursday's paid edition of this newsletter. If you're not a paid subscriber yet and would like to receive the full analysis, upgrade your subscription today.

📜 The 1st Draft of GPAI Code of Practice

The EU has recently published the first draft of the General-Purpose AI (GPAI) Code of Practice. It’s official, and it’s ready for review. Here's what everyone in the AI field needs to know:

1️⃣ The European AI Office facilitated the drafting of the code, which was prepared by independent experts appointed as chairs and vice-chairs of four working groups.

2️⃣ The draft was developed based on contributions from providers of general-purpose AI models while also considering international approaches.

3️⃣ This is not the final version of the code, and feedback is welcome. The final version of the code is expected to establish clear objectives, measures, and KPIs.

4️⃣ What are codes of practice, and what should they cover?

➜ Answer: According to Article 56(2) of the EU AI Act:

"The AI Office and the Board shall aim to ensure that the codes of practice cover at least the obligations provided for in Articles 53 and 55, including the following issues:

(a) the means to ensure that the information referred to in Article 53(1), points (a) and (b), is kept up to date in light of market and technological developments;

(b) the adequate level of detail for the summary about the content used for training;

(c) the identification of the type and nature of the systemic risks at Union level, including their sources, where appropriate;

(d) the measures, procedures and modalities for the assessment and management of the systemic risks at Union level, including the documentation thereof, which shall be proportionate to the risks, take into consideration their severity and probability and take into account the specific challenges of tackling those risks in light of the possible ways in which such risks may emerge and materialise along the AI value chain."

5️⃣ What's the relevance of codes of practice in the context of the AI Act?

➜ Answer: Providers of general-purpose AI models may rely on codes of practice to demonstrate compliance with AI Act obligations until harmonized standards are published.

⛔ Effective Mitigations for AI's Systemic Risks

The paper "Effective Mitigations for Systemic Risks from General-Purpose AI" by Risto Uuk, Annemieke Brouwer, Tim Schreier, Noemi Dreksler, Valeria Pulignano, and Rishi Bommasani is a great read for everyone in AI:

╰┈➤ The study surveyed 76 domain experts on their views of the effectiveness of 27 risk mitigation measures in the context of general-purpose AI models.

╰┈➤ These were the measures that saw notable levels of agreement for specific risk areas:

1️⃣ "Third-party pre-deployment model audits:

62-87% agreed that independent safety assessments of models before deployment, with auditors given appropriate access for testing, would effectively reduce systemic risk in each risk area. 98% of experts thought the measure was technically feasible.

2️⃣ Safety incident reporting and security information sharing:

70-91% agreed that disclosure of AI incidents, near-misses, and security threats to relevant stakeholders would effectively reduce systemic risk in each risk area. 98% of experts thought the measure was technically feasible.

3️⃣ Whistleblower protections:

66-75% agreed that policies ensuring safe reporting of concerns without retaliation or restrictive agreements would effectively reduce systemic risk in each risk area. 99% of experts thought the measure was technically feasible.

4️⃣ Pre-deployment risk assessments:

70-86% agreed that comprehensive assessment of potential misuse and dangerous capabilities before deployment would effectively reduce systemic risk in each risk area. 99% of experts thought the measure was technically feasible.

5️⃣ Risk-focused governance structures:

59-79% agreed that implementation of board risk committees, chief risk officers, multi-party authorisation requirements, ethics boards, and internal audit teams would effectively reduce systemic risk in each risk area. 99% of experts thought the measure was technically feasible.

6️⃣ Intolerable risk thresholds:

51-78% agreed that clear red lines for risk or model capabilities set by a third-party that trigger immediate development or deployment halt would effectively reduce systemic risk in each risk area. 97% of experts thought the measure was technically feasible.

7️⃣ Input and output filtering:

51-84% agreed that monitoring for dangerous inputs and outputs would effectively reduce systemic risk in each risk area. 98% of experts thought the measure was technically feasible.

8️⃣ External assessment of testing procedure:

63-83% agreed that third-party evaluation of how companies test for dangerous capabilities would effectively reduce systemic risk in each risk area. 97% of experts thought the measure was technically feasible."

🎓 AI Literacy as a Legal Obligation

Did you know AI literacy is a legal obligation under the EU AI Act, and companies outside the EU will be affected? Here's what you need to know:

╰┈➤ Article 4 of the EU AI Act covers AI literacy obligations for providers and deployers of AI systems:

"Providers and deployers of AI systems shall take measures to ensure, to their best extent, a sufficient level of AI literacy of their staff and other persons dealing with the operation and use of AI systems on their behalf, taking into account their technical knowledge, experience, education and training and the context the AI systems are to be used in, and considering the persons or groups of persons on whom the AI systems are to be used."

╰┈➤ Recital 20 brings more information about the topic:

→ "In order to obtain the greatest benefits from AI systems while protecting fundamental rights, health and safety and to enable democratic control, AI literacy should equip providers, deployers and affected persons with the necessary notions to make informed decisions regarding AI systems. Those notions may vary with regard to the relevant context and can include understanding the correct application of technical elements during the AI system’s development phase, the measures to be applied during its use, the suitable ways in which to interpret the AI system’s output, and, in the case of affected persons, the knowledge necessary to understand how decisions taken with the assistance of AI will have an impact on them.

→ In the context of the application this Regulation, AI literacy should provide all relevant actors in the AI value chain with the insights required to ensure the appropriate compliance and its correct enforcement. Furthermore, the wide implementation of AI literacy measures and the introduction of appropriate follow-up actions could contribute to improving working conditions and ultimately sustain the consolidation, and innovation path of trustworthy AI in the Union.

→ The European Artificial Intelligence Board (the ‘Board’) should support the Commission, to promote AI literacy tools, public awareness and understanding of the benefits, risks, safeguards, rights and obligations in relation to the use of AI systems. In cooperation with the relevant stakeholders, the Commission and the Member States should facilitate the drawing up of voluntary codes of conduct to advance AI literacy among persons dealing with the development, operation and use of AI."

╰┈➤ Article 4 will apply in less than 3 months, on February 2nd.

╰┈➤ Companies covered by the EU AI Act and classified as providers or deployers of AI systems (hint: not only EU-based companies; check Article 2) should prepare for this legal obligation.

*If you are looking for AI governance training, the 15th cohort of my intensive training starts in two weeks; see below.

🗓️ Two weeks left to register!

If you are dealing with AI-related challenges, you can't miss our acclaimed live online AI Governance Training—now in its 15th cohort. In December, we’re offering a special intensive format: all 8 lessons with me (12 hours of live learning) condensed into just 3 weeks, wrapping up before the holidays.

→ This is an excellent opportunity to jumpstart 2025 and advance your career, especially for those who couldn’t participate earlier due to other commitments.

→ Our unique curriculum, carefully curated over months and constantly updated, focuses on AI governance's legal and ethical topics, helping you elevate your career and stay competitive in this emerging field.

→ Over 1,000 professionals from 50+ countries have advanced their careers through our programs, and alumni consistently praise their experience—see their testimonials.

🗓️ Are you ready? There are two weeks left to register for the December cohort. Learn more and register today:

*You can also sign up for our learning center to receive updates on future training programs along with educational and professional resources.

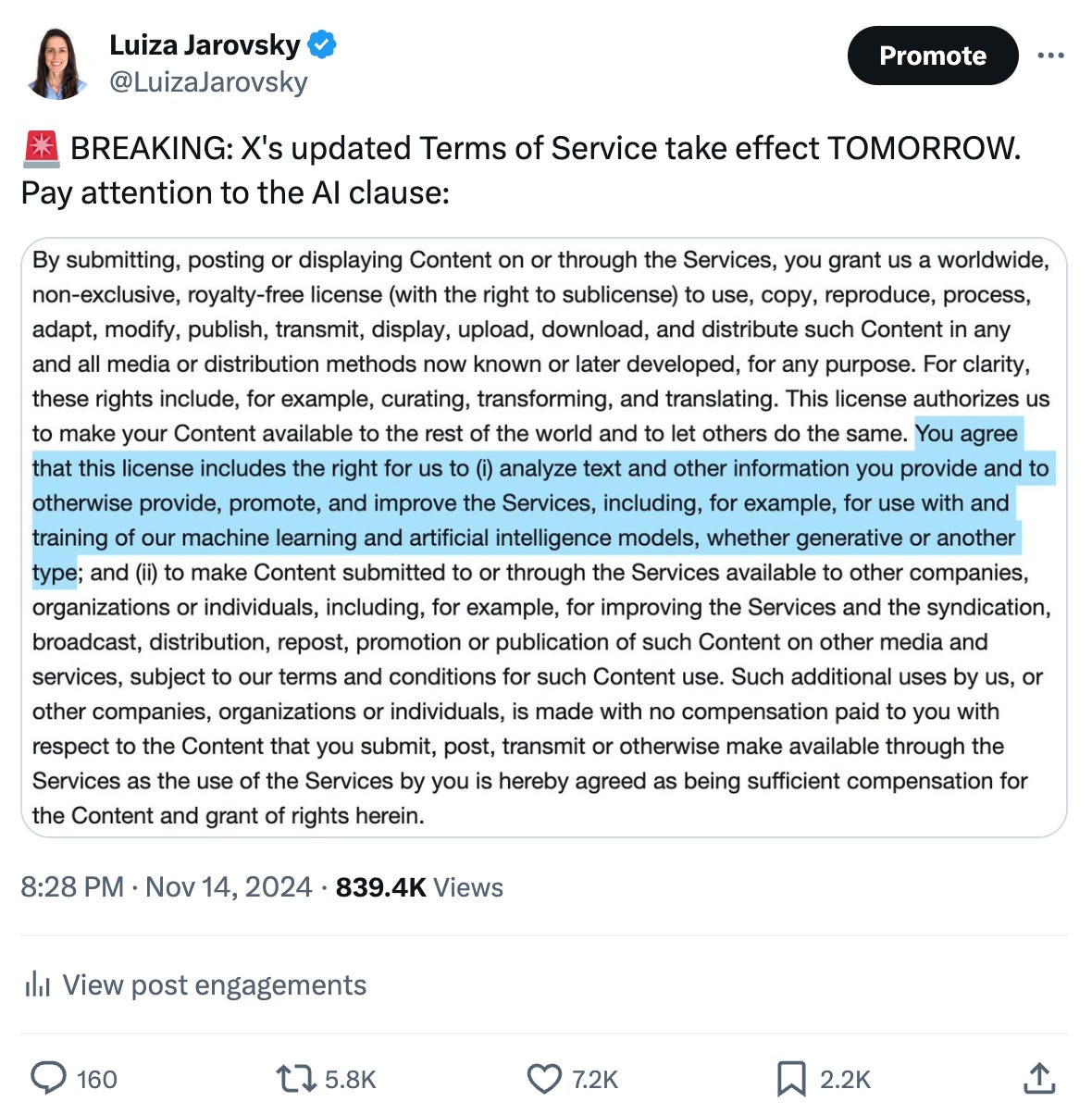

🐦 X's updated Terms of Service

X's updated Terms of Service took effect on November 15th. Pay attention to the AI clause:

What are your thoughts on this clause? Join the discussion on LinkedIn or Twitter/X.

🌶️ Fair Enough AI

The paper "Fair Enough AI," by Tal Zarsky and Jane Bambauer, discusses the lack of concrete fairness standards and the inevitable tradeoffs in fairness decisions, and it's a must-read for everyone in the AI field, and it's full of 🌶 spicy statements:

"Given the cross-cutting goals and societal aspirations that affect how decision-making will be perceived, defining and creating a “fair” algorithm is primarily a policy task rather than a matter of technology or pure logic. This fact has been absorbed in the legal scholarship for some time. The trouble is, recent AI regulatory frameworks have demonstrated an unwillingness to state which types of unfairness will be tolerated in order to avoid other forms of unfairness. Implementing one measure to promote fairness might at time generate or exacerbate fairness on another dimension. 🌶 We suspect that vagueness and abdication of decision-making will be a feature of the AI public policy debates for the foreseeable future. 🌶 Setting priorities not only raises disagreements between regulators, it causes a good deal of heartburn for each individual lawmaker, too, who will have to answer to media inquiries, firms, and voters who come armed with examples of bias, opaqueness, inaccuracy, and privacy intrusions which will follow, no matter what option she chooses. 🌶The public is not prepared for a frank admission that it is acceptable for a large AI company to decide, in advance, that it is ok to implement an algorithm that will be wrong more often for one group than another. 🌶 Nor is it prepared to hear that the same company decided in advance to reduce accuracy for everybody in order to relieve some forms of bias (but not all)"

"🌶 Some charges of unfairness are more valid than others. An accusation that an algorithm is inaccurate, biased, overly opaque, or too gamable will be valid if the faults are unnecessary—that is, if they are known or reasonably discoverable and can be corrected without significantly degrading other forms of fairness. Thus, while we have emphasized that ethical tradeoffs must be made during AI design, 🌶 that is only true for applications and designs that have already made every Pareto-efficient improvement. If an AI application needlessly compromises accuracy, bias, or some other aspect of fairness, it deserves criticism. Any time a company can make improvements for minimal costs along the other dimensions of fairness, they should. The criticisms that worry us are those that are made without any attempt to assess whether the perceived problem is easy to fix (without tradeoffs) or is difficult, requiring compromise between values."

🎙️ This Thursday: Live Talk with Gary Marcus

If you are interested in AI, particularly in how we can ensure it works for us, you can't miss my live conversation with Gary Marcus [register here]:

→ Marcus is one of the most prominent voices in AI today. He is a scientist, best-selling author, and serial entrepreneur known for anticipating many of AI's current limitations, sometimes decades in advance.

→ In this live talk, we'll discuss his new book "Taming Silicon Valley: How We Can Ensure That AI Works for Us," focusing on Generative AI's most imminent threats, as well as Marcus' thoughts on what we should insist on, especially from the perspective of AI policy and regulation. We'll also talk about the EU AI Act and U.S. regulatory efforts and the false choice, often promoted by Silicon Valley, between AI regulation and innovation.

→ This will be the 20th edition of my AI Governance Live Talks, and I invite you to attend live, participate in the chat, and learn from one of the most respected voices in AI today. Don't miss it!

👉 To join the live session, register here. I hope to see you on Thursday!

🎬 Find all my previous live conversations with privacy and AI governance experts on my YouTube Channel.

📚 AI Book Club: What Are You Reading?

📖 More than 1,800 people have joined our AI Book Club and receive our bi-weekly book recommendations.

📖 The 14th recommended book was The Quantified Worker - Law and Technology in the Modern Workplace by Ifeoma Ajunwa.

📖 Ready to discover your next favorite read? See our previous reads and join the book club here.

📋 A Legal Framework for eXplainable AI

The paper "A Legal Framework for eXplainable AI," by Aniket Kesari, Daniela Sele, Elliott Ash, and Stefan Bechtold, is an excellent read for everyone in AI; here's why:

╰┈➤ As we observe the fast and ubiquitous way in which AI-powered systems are being adopted, the black box effect—the complexity of explaining AI decisions and outcomes—becomes even more relevant. Explainable AI, or XAI, is the attempt to tackle the black box effect.

╰┈➤ According to a recent publication by the European Data Protection Supervisor (EDPS), explainable AI is:

"the ability of AI systems to provide clear and understandable explanations for their actions and decisions. Its central goal is to make the behaviour of these systems understandable to humans by elucidating the underlying mechanisms of their decision-making processes."

╰┈➤ Among the challenges of explaining an automated decision, the authors highlight three of them:

1️⃣ "The technical challenge of finding a method that allows for human-understandable explanations of complex algorithms"

➜ "largely driven by software developers’ desire to understand and debug their own products and systems"

2️⃣ "The legal challenge of determining whether the law imposes certain requirements on an explanation"

➜ "sometimes determined by statute or case law, although often the law only states in the abstract that automated decision-making systems need to provide transparency and explainability"

3️⃣ "The question of what kinds of explanations are useful to a human decision subject, such that the subject can better understand and, thereby, accept or challenge an automated decision."

➜ "a behavioral and political one that implicates broader values for when and why we require reason-giving in legal contexts"

╰┈➤ The paper then proposes a much-needed legal taxonomy for XAI, taking into consideration the following dimensions:

➜ Scope: Global vs. Local

➜ Depth: Comprehensive vs. Selective

➜ Alternatives: Contrastive vs. Non-contrastive

➜ Flow: Conditional control vs. Correlation

╰┈➤ In the context of trustworthy and human-centric AI (a topic at the core of AI regulations such as the EU AI Act), the authors also stress the importance of putting affected individuals on center stage:

"This is crucial for several reasons. First, it aligns the development of AI technologies with the principles of user-centric design, emphasizing the need to make AI systems understandable and accessible to those directly affected by their outputs. By prioritizing the perspective of the end-users, XAI can address the real-world impact of AI decisions, fostering a more inclusive and democratic approach to technology development."

🙈 Can AI Ignore Copyright?

If you are interested in AI copyright, you can't miss last week's special AI Governance Professional edition, where I covered the main unsolved issues in the context of AI and copyright infringement, as well as three recent legal decisions that might signal how things might evolve in the next months and which might help us understand the future of copyright in the age of AI.

Read the preview here. If you're not a paid subscriber, upgrade your subscription to access all previous and future analyses.

🔥 Job Opportunities in AI Governance

Below are 10 new AI Governance positions posted in the last few days. This is a competitive field: if it's a relevant opportunity, apply today:

🇬🇧 Mastercard: Director, AI Governance - apply

🇺🇸 Lenovo: Director, AI Governance - apply

🇮🇪 Logitech: AI Governance Manager - apply

🇺🇸 Dropbox: Sr. AI Governance Program Manager - apply

🇮🇳 Novo Nordisk: Head of AI governance - apply

🇺🇸 Barclays: AI Governance & Oversight VP - apply

🇸🇰 PwC Slovakia: AI Governance Manager - apply

🇨🇭 Xebia: Data & AI Governance Consultant - apply

🇺🇸 Allegis Group: Lead Privacy Analyst, AI Governance - apply

🇩🇪 Questax: Digital & AI Governance Advisor - apply

🔔 More job openings: subscribe to our AI governance & privacy job boards and receive our weekly email with job opportunities. Good luck!

🙏 Thank you for reading!

If you have comments on this edition, write to me, and I'll get back to you soon.

AI is more than just hype—it must be properly governed. If you found this edition valuable, consider sharing it with friends and colleagues to help spread awareness about AI policy, compliance, and regulation. Thank you!

Have a great day.

All the best, Luiza