ChatGPT And Large Language Models Are A Privacy Ticking Bomb

Continuing last week's discussion about AI and privacy, this week, I would like to talk specifically about large language models and the more evident privacy risks they pose.

Large language models (LLM) can be defined as "a deep learning algorithm that can recognize, summarize, translate, predict and generate text and other content based on knowledge gained from massive datasets." According to Priyanka Pandey, "GPT-3 is the largest language model known at the time with 175 billion parameters trained on 570 gigabytes of text."

ChatGPT - or Chat Generative Pre-Trained Transformer - the tool everyone is talking about, which can answer questions, create songs, articles (not this one!), and even pass an MBA exam given by a Warthon professor - is not the same thing as GPT-3. According to ChatGPT itself:

"ChatGPT is a variant of the GPT-3 model specifically designed for chatbot applications. It has been trained on a large dataset of conversational text, so it is able to generate responses that are more appropriate for use in a chatbot context. ChatGPT is also capable of inserting appropriate context-specific responses in conversations, making it more effective at maintaining a coherent conversation."

Some are celebrating these models as great productivity hacks which will revolutionize the way we work and almost "automate" the creation of texts, code, documents, marketing campaigns, and whatever comes up to the imagination. Others are not so enthusiastic. Some feel that these tools are actually endangering the value or the existence of their own human work. Others point out that it will be more and more difficult to distinguish good quality work from persuasive nonsense spit out by AI tools.

Privacy professionals are wary and can already foresee risks. To begin with, these tools are trained using publicly available data, which contain massive amounts of personal data. If you have ever posted anything in English on the internet, it is possible that your data was used to train these models.

Florian Tramèr and his team of researchers demonstrated on their paper that "an adversary can perform a training data extraction attack to recover individual training examples by querying the language model." Through their "attack" to GPT-2, they were able to extract "personally identifiable information (names, phone numbers, and email addresses), IRC conversations, code, and 128-bit UUIDs."

Even if the information was "publicly available," this is a breach of contextual integrity, a concept popularized by Hellen Nissenbaum and central to privacy. When you post something personal online, it is necessarily connected to the context you chose to post. You would not want that your inferred address or information about a life milestone that you posted on a social network would be shared with others by a chatbot, e.g., when prompted to create a marketing campaign or to offer customer support.

In another experiment, and this time not related to privacy, Tramèr and his team prompted GPT-3 with the beginning of chapter 3 of Harry Potter and the Philosopher’s Stone, and according to the researchers, "the model correctly reproduces about one full page of the book (about 240 words) before making its first mistake." Harry Potter is a copyrighted book, so it is not clear how these issues are being dealt with by current LLM-based tools.

In her article for MIT Technology Review, Melissa Heikkilä describes that after prompting GPT-3 with questions about her boss, the tool "told me Mat has a wife and two young daughters (correct, apart from the names), and lives in San Francisco (correct). It also told me it wasn’t sure if Mat has a dog: '[From] what we can see on social media, it doesn't appear that Mat Honan has any pets. He has tweeted about his love of dogs in the past, but he doesn't seem to have any of his own.'(Incorrect.)." In this case, some of the information was correct, but some was pure fantasy, probably some form of association. So in a similar sense, if someone is constantly writing about crime or murder news, this person's name might be associated with criminal offenses, causing reputational harm.

If people are going to resort to these tools to obtain information, does it make sense that it is going to output fake information, including fantasized personal information - causing privacy and reputational harm? Aren't we going back in time and neglecting privacy rights that it took us so long to achieve?

If we remember GDPR's article 5, it brings principles relating to the processing of personal data. Among them are purpose limitation, data minimization, and accuracy. I do not even need to go further and analyze specific articles to point out that it is unclear to me how LLM-based tools can be GDPR compliant.

To illustrate my point, I never gave my data to LLM-based chatbots; I never consented that my data was going to be scrapped from the internet, condensed in a context-less way, sometimes fantasized or distorted, and then potentially output by a chatbot when anyone around the world gives it a prompt.

These models are constantly being trained, and the more they are used, the more "advanced" will be their next version - including in terms of potential privacy harms - if we do not do anything about it.

💡 I would love to hear your opinion. I always share these newsletter articles on Twitter and on LinkedIn, you are welcome to join the discussion there or send me a private message.

-

📚 Book Recommendation

"Re-Engineering Humanity" by Brett Frischmann & Evan Selinger. One of my all-time favorites. A great analysis of how big data, predictive analytics, and smart environments are already affecting us, both collectively and individually. Essential critical reading for privacy professionals. Book available here.

-

📄 Paper Recommendation

"Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI" by Jessica Fjeld, Nele Achten, Hannah Hilligoss, Adam Christopher Nagy & Madhulika Srikumar. A map of current efforts to minimize harm and support human values, principles, and rights when dealing with AI. Download it for free here.

-

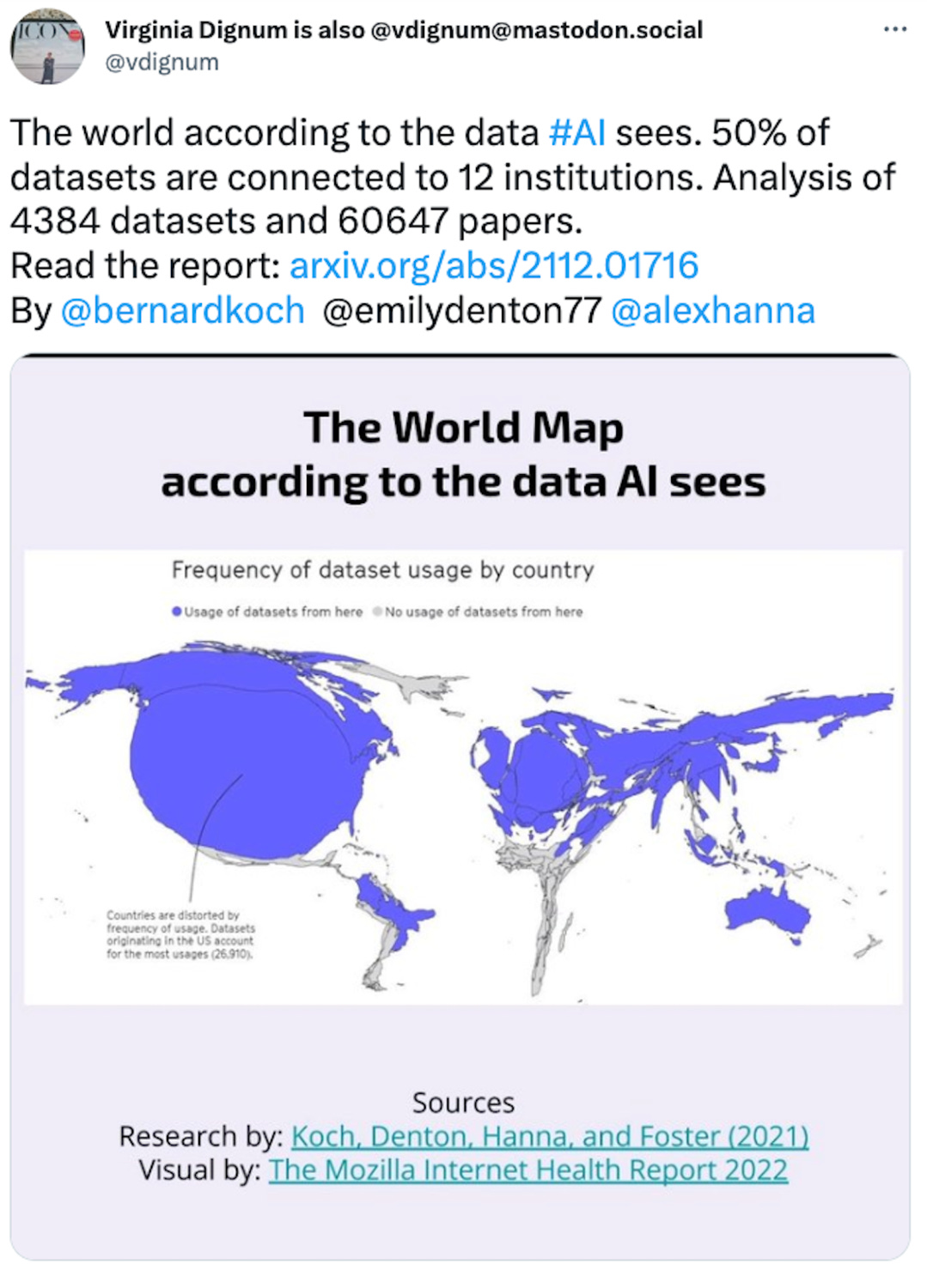

🔁 Trending on Social Media

Click on the images to interact with the content:

-

📌 Privacy & Data Protection Careers

We have gathered relevant links from large job search platforms and additional privacy jobs-related info on this Privacy Careers page. We suggest you bookmark it and check it periodically for new openings. Wishing you the best of luck!

-

📅 Upcoming Privacy Events

🔥 Women Advancing Privacy (organized by us) - February 23rd, 2023 - global & remote on LinkedIn Live.

IAPP Global Privacy Summit - April 4th-5th, 2023 - in Washington, DC, United States

Computers Privacy and Data Protection (CPDP) - May 24th-26th, 2023 - in Brussels, Belgium.

Privacy Law Scholars Conference (PLSC) - June 1st-2nd, 2023 - in Boulder, CO, United States.

Annual Privacy Forum - June 1st-2nd, 2023 - in Lyon, France

-

📢 Privacy Solutions for Businesses (sponsored)

Mine PrivacyOps, the #1 rated Data Privacy Software on G2, is turning data compliance on its head so companies can approach compliance not as a stressor, but a business advantage. The simplified, automated, no-code experience means privacy professionals can drop grueling and manual tasks and an overreliance on engineering teams and instead focus on data insights.

If you feel like DSR (Data Subject Request) handling, data mapping, or consent management could be smoother for your company, see why Mine PrivacyOps has repeatedly earned ‘Users Most Likely to Recommend’ badges in categories like Data Governance for mid-market and enterprise-sized companies. Try the most innovative Data Privacy platform for yourself.

*To get your product or service featured at The Privacy Whisperer, get in touch.

-

✅ Before you go:

Did someone forward this article to you? Subscribe to this newsletter and receive this weekly newsletter in your email.

For more privacy-related content, check out our Podcast and my Twitter, LinkedIn & YouTube accounts.

At Implement Privacy, I offer specialized privacy courses to help you advance your career. I invite you to check them out and get in touch if you have any questions.

See you next week. All the best, Luiza Jarovsky