👋 Hi, Luiza Jarovsky here. Welcome to the 72nd edition of The Privacy Whisperer, and thank you to 80,000+ followers on various platforms. Read about my work, invite me to speak, tell me what you've been working on, or just say hi here.

This week's edition is sponsored by Didomi:

Benchmark your consent collection performance. Didomi's comprehensive research, enriched by millions of data points, provides an in-depth analysis of the consent landscape in 2023. Understand the significance of consent rates, compare your performance with industry peers, and unveil the most effective consent banner formats. Download the whitepaper and improve your data privacy strategy today.

🤖 Against AI anthropomorphism

The post above - from someone on OpenAI's AI safety team - is a great opportunity to raise awareness about dark patterns in AI.

I proposed that dark patterns in AI are AI-based applications or features that attempt to make people:

believe that a particular sound, text, picture, video, or any sort of media is real/authentic when it was AI-generated (deepfakes);

believe that a human is interacting with them when it's an AI-based system (anthropomorphism).

The post above is an example of anthropomorphism, as the author is attributing human characteristics ("emotional, personal conversation"; also suggesting that the conversation is probably therapy) to an AI-based system (ChatGPT). To learn more about the topic, read my article "Dark Patterns in AI: Privacy Implications."

Anthropomorphism can cause real-world harm, such as when an AI chatbot suggests people commit harm against themselves or others, when it leads people to behave in a way that is not in their best interests, or when it exploits people in vulnerable situations. There was a recent case where a Belgian man committed suicide after an AI chatbot “called Eliza” encouraged him to sacrifice himself to stop climate change.

One interesting example in the context of data protection compliance was Replika's - an AI-based companion - ban in Italy. The Italian Data Protection Authority concluded that the risk was too high for minors and emotionally vulnerable people. I discussed the topic in my article "AI-Based 'Companions' Like Replika Are Harmful to Privacy And Should Be Regulated."

AI-based systems are not human beings, and users should be constantly reminded that they are dealing with a computer equipped with sophisticated maths - not people.

Companies that develop AI should be required to implement safety measures - at all levels, including communication - to make sure that people are not at risk of harm due to anthropomorphism or deepfakes.

Unfortunately, this is not happening yet.

📖 AI book club

In the 1st edition of our AI Book Club, we will read “Atlas of AI” by Kate Crawford and discuss it on December 14th. See my post about it, register for the AI book club, and invite friends to participate.

🎥 Top 10 privacy movies

Which ones are missing (and which ones would you remove from the list)? Send me your suggestions!

1- The Conversation (1974): "A paranoid, secretive surveillance expert has a crisis of conscience when he suspects that the couple he is spying on will be murdered"

2- Ememy of the State (1998): “A lawyer becomes targeted by a corrupt politician and his N.S.A. goons when he accidentally receives key evidence to a politically motivated crime”

3- The Truman Show (1998): "An insurance salesman discovers his whole life is actually a reality TV show"

4- Minority Report (2002): "In a future where a special police unit is able to arrest murderers before they commit their crimes, an officer from that unit is himself accused of a future murder"

5- The Lives of Others (2006): "In 1984 East Berlin, an agent of the secret police, conducting surveillance on a writer and his lover, finds himself becoming increasingly absorbed by their lives"

6- The Social Network (2010): "As Harvard student Mark Zuckerberg creates the social networking site that would become known as Facebook, he is sued by the twins who claimed he stole their idea and by the co-founder who was later squeezed out of the business"

7- Disconnect (2012): "A drama centered on a group of people searching for human connections in today's wired world"

8- The Circle (2017): "A woman lands a dream job at a powerful tech company called the Circle, only to uncover an agenda that will affect the lives of all of humanity"

9- The Great Hack (2019): "The Cambridge Analytica scandal is examined through the roles of several affected persons."

10- The Social Dilemma (2020): "Explores the dangerous human impact of social networking, with tech experts sounding the alarm on their own creations"

🖥️ Privacy & AI in-depth

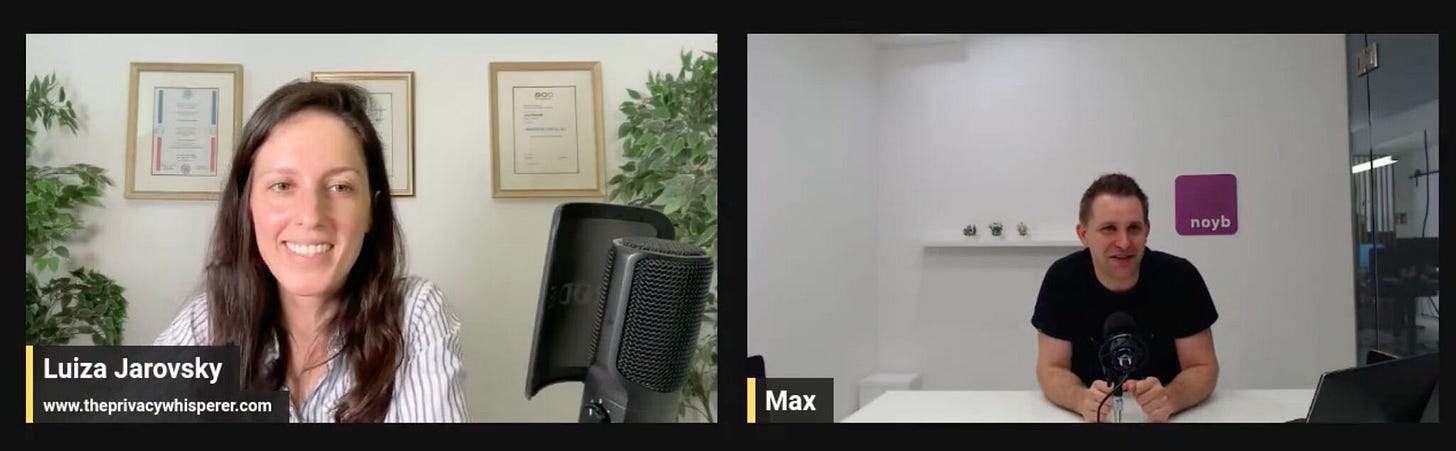

Every month, I host a live conversation with a global expert in privacy or AI. I've spoken with Max Schrems, Dr. Ann Cavoukian, Prof. Daniel Solove, Dr. Alex Hanna, Prof. Emily Bender, and many other industry leaders. Watch the recordings on my YouTube channel or my podcast, and register for the upcoming session on October 25th with Katharine Jarmul. Who would you like to see next?

🤖 Humans Vs. AI

AI can be helpful with certain tasks in certain contexts, but it has limitations, and human work is behind every step. The image below also gives a hint about important skills to focus on in the years to come. Would love to hear your thoughts.

📌 Jobs in Privacy & AI

Are you looking for a job in privacy? Want to start a career in AI? Check out our job boards. Good luck!

🔎 Case Study: GoDaddy

[Every week, I discuss tech companies’ best and worst practices - so that readers can avoid their mistakes and spread best practices further. The case studies are available to Paid Subscribers and some people in the Leaderboard].

It's not new that GoDaddy - a domain registrar and web hosting company - uses UX dark patterns. Today I would like to comment on one of these dark patterns that is so unfair that it blows my mind it is still showing up to people trying to buy a domain.