What about Privacy & Fairness? An Open Letter to AI Leaders

I recently read Bill Gates' latest blog post, "The Age of AI Has Begun," and today's open letter from the Future of Life Institute, "Pause Giant AI Experiments," signed by Elon Musk, Steve Wozniak (Apple's co-founder) and hundreds of other tech leaders, researchers, and professors. I also read the mission statement of OpenAI (the company behind ChatGPT), "Planning for AGI and Beyond," written last month by their CEO, Sam Altman. I want to raise essential aspects that I found missing in these documents, specifically related to the protection of privacy, autonomy, and human dignity in the context of AI development and expansion.

Without a broader discussion, including ethics, fairness, transparency, and privacy issues, as well as involving various voices, communities, and points of view, we might be building technology that will end up generating negative externalities that are more difficult to fix than the problems it was trying to tackle in the first place. Perhaps, to some extent, similar to social media issues that we still do not know how to fix, but with AI-powered potential for damage.

In the three documents, I did not see any meaningful discussions on how to tackle the following:

bias: biased and discriminatory information being output by AI tools, perpetrating historical and systemic forms of prejudice, exclusion, and discrimination;

homogeneity of thoughts and ideas: with the growth of AI, people will obtain and spread information from these AI tools and will be disincentivized to produce new forms of knowledge or to look for alternative sources;

lack of democratic participation of users: control of AI will follow the control of tech, which today is in the hand of a small and unrepresentative elite. Without mechanisms for active participation and intervention, AI will be an additional force amplifying inequalities;

falsification of reality: tools such as deepfakes and chatbots can be applied in the context of realistic falsifications of pictures, videos, and conversations, fostering manipulation, disinformation, polarization, democratic destabilization, hate, intimate privacy violations, stigma, violence, etc.;

personification: tools such as chatbots and deepfakes can be used to manipulate and harm people by pretending to be human;

AI hallucinations with the potential to cause disinformation and reputational harm;

personally identifiable information in the training dataset: disrespecting the contextual integrity of when they were made available online, as well as disregard for data protection principles;

neglect for data subjects' rights, such as transparency, access, rectification, erasure, and so on;

neglect for data protection principles, such as lawfulness, fairness, transparency, purpose limitation, data minimization, accuracy, storage limitation, and accountability;

intellectual property infringement and lack of compensation to artists, writers, journalists, and creators whose work is being scrapped and used to train the AI systems;

etc.

In my view, these are some of the issues that should be top of mind for anyone developing or investing in AI. My area of expertise is privacy, so the points above are only part of the bigger picture, as I can see from my privacy-focused and personal perspective.

There are brilliant experts in fields such as AI ethics, sociology, anthropology, psychology, law, and philosophy that should be consulted and be an integral part of the AI discussion. It will be difficult to concretize any optimistic AI perspective if we do not tackle these and so many other issues coming from non-engineering points of view and communities with different histories, experiences, and concerns around the world.

Tech design has been heavily influenced by computer science and engineers, while other disciplines have had a diminished structural impact. The decision-making power has also been restricted to a small, non-representative, non-elected elite. If we want to build machines that serve and support humans, human societies, and human values, this must change, and people from the humanities and social sciences must have a more central role, as well as people from different communities around the world, manifesting different voices, opinions, and ideas.

Additionally, tech design and tech development have not been democratic in any true sense. It is usually the same tech elite that controls the technologies and already controls AI development. In social media technologies, this lack of participation has contributed to inequality, discrimination, disinformation, polarization, violence, and radicalization - to name a few issues. If AI wants to be a positive social force, democratic participation should happen by design - and urgently.

Bill Gates ends his blog post by stating the three principles that should be guiding the AI conversation:

We should try to balance fears about the downsides of AI with its ability to improve people’s lives

With reliable funding and the right policies, governments and philanthropy can ensure that AIs are used to reduce inequity

We’re only at the beginning of what AI can accomplish. Whatever limitations it has today will be gone before we know it

I argue that these are optimistic statements, assumptions, and perhaps goals, but in my view, they do not offer a value-based approach to help guide the AI conversation. We could begin with:

Transparency, fairness, and autonomy: AI should have humans in mind and support human development and human autonomy. Humans should know when AI is involved; AI design should be transparent and fair, the policies, risks, and warnings should be clearly communicated, etc.

Accountability, responsibility, privacy, and safety: organizations that offer AI tools should be held accountable for the social and individual impact their technology causes; privacy by design, security, and effective policies to protect people and societies should be in place from the very beginning; measure to evaluate risks and harm should be continuous, etc.

Democratic participation and interdisciplinarity: AI should always be an interdisciplinary project involving experts from various fields of knowledge, including humanities and social sciences - which have been traditionally sidelined in the technology design process. People impacted by AI tools from different communities and parts of the world should participate in their design process, help evaluate risks and propose improvements before, during, and after launch, be able to intervene and claim violations or unfairness, etc.

This is my point of view, and various other opinions and perspectives - outside the AI/tech bubble - should be heard.

I look forward to seeing multiple voices, communities, disciplines, and points of view taken into consideration so that we can collectively build an AI future that will be supportive of human beings, human values, and human societies. It is clear that the age of AI has begun. But now, the age of AI that supports privacy, fairness, and human dignity has to begin.

--

🎤 Upcoming events

In the next edition of 'Women Advancing Privacy', I will discuss with Prof. Nita Farahany her new book "The Battle for Your Brain: Defending the Right to Think Freely in the Age of Neurotechnology," as well as issues related to the protection of cognitive liberty and privacy in the context of current AI and Neurotechnology challenges.

Prof. Farahany is a leader and pioneer in the field of ethics of neuroscience. This will be a fascinating conversation that you cannot miss. I invite you to join our LinkedIn Live session and bring questions. To watch our previous events, check out my YouTube channel.

--

🔁 Trending on social media

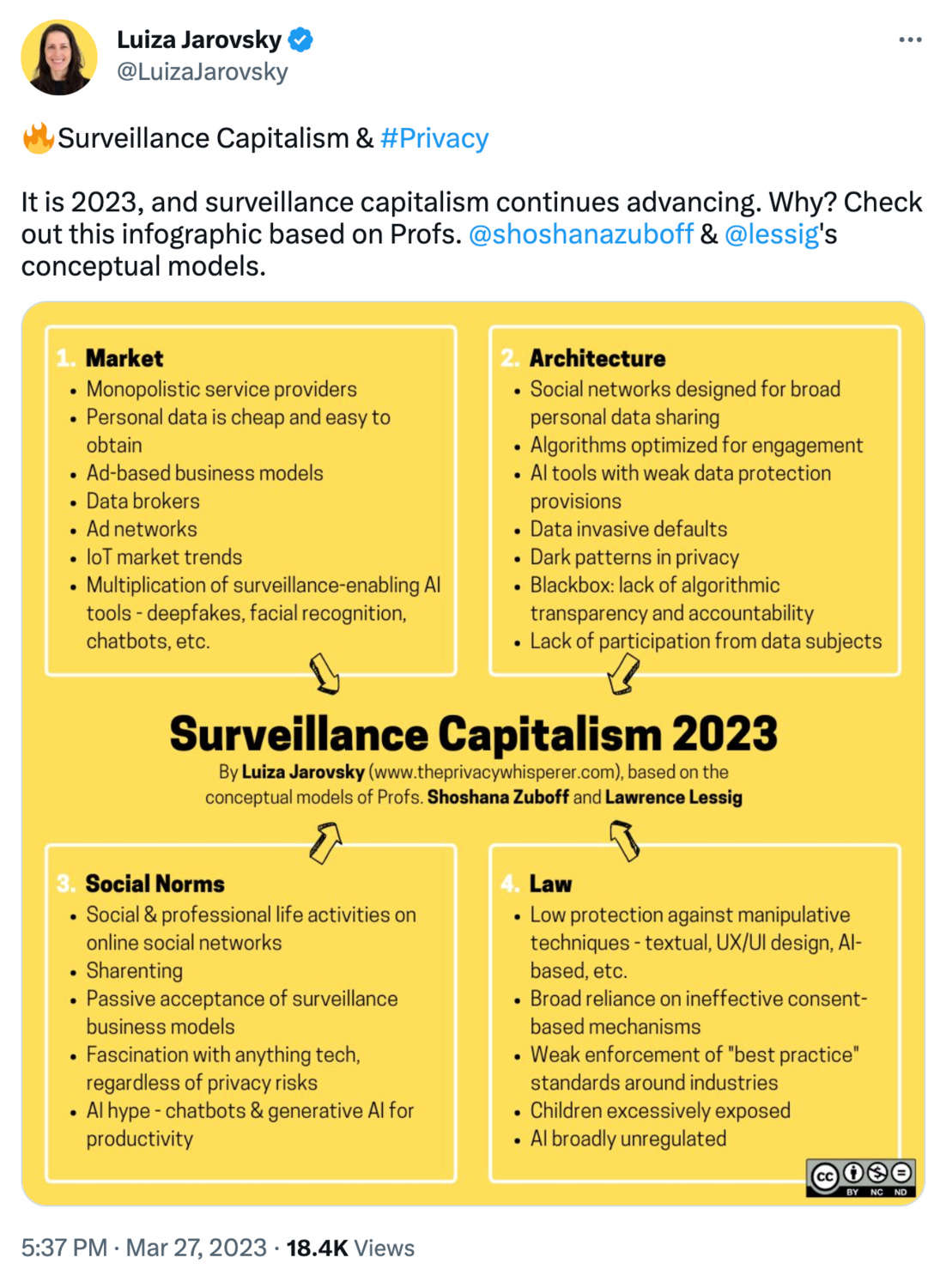

Surveillance Capitalism and Privacy - interact with this Tweet here.

--

📌 Privacy & data protection job search

We have gathered various links from job search platforms and privacy-related organizations on our Privacy Careers page. We are constantly adding new links, so we suggest you bookmark it and check it periodically for new openings.

--

✅ Before you go:

If you enjoyed this newsletter, consider sharing and inviting people to subscribe to The Privacy Whisperer.

For more privacy-related content, check out The Privacy Whisperer Podcast and my Twitter, LinkedIn & YouTube accounts.

At Implement Privacy, I offer privacy courses that can help you broaden your privacy-related knowledge and advance your career. Check them out.

See you next week. All the best, Luiza Jarovsky