🚧 Unethical AI Marketing

AI's Legal and Ethical Challenges | Edition #194

Hi, Luiza Jarovsky here. Welcome to the 194th edition of my newsletter, read by 59,000+ subscribers worldwide. It's great to have you here!

Paid subscribers never miss my full analyses of AI's legal and ethical challenges, dissecting the latest developments in AI governance (3 to 4 times a week):

For more: 4-Week Training | Learning Center | Forum | Job Board

🚧 Unethical AI Marketing

As I often write in this newsletter, the future of AI doesn't exist yet. We are shaping it now.

With that in mind, from legal and ethical perspectives, we should constantly think about and assess the types of AI systems that should be allowed to exist, and those that should be discontinued or never enabled.

What are the AI systems that will support and empower us as humans and societies, helping us be happier, healthier, and more productive?

What are the AI systems that will negatively impact our well-being, health, social fabric, and fundamental rights?

These discussions are happening, and assessments often focus on AI's technical characteristics: training methods, capabilities, computation, modalities, size, use type, system design, safety profile, guardrails, and more.

Technical risk assessments are essential. However, less explored areas such as marketing and design should also be central to the AI governance debate.

Why?

The way companies choose to market an AI product directly affects not only how people use it, but also how they see AI, how they feel about themselves and their place in society, and how they understand the world.

That's the power of narratives, branding, and communication.

Companies understand this very well, and they invest a significant part of their budget in marketing and branding strategies. They pay creative professionals millions to think about every detail of how they present their products.

With that in mind, AI governance shouldn't ignore marketing. If we want to shape the future of AI, we must also think about what AI companies should be allowed to advertise and promise and what kinds of narratives they should be allowed to promote, both from ethical and legal perspectives.

Today, I'll share two examples from existing AI companies to illustrate my point, and I'll conclude with some thoughts about the future.

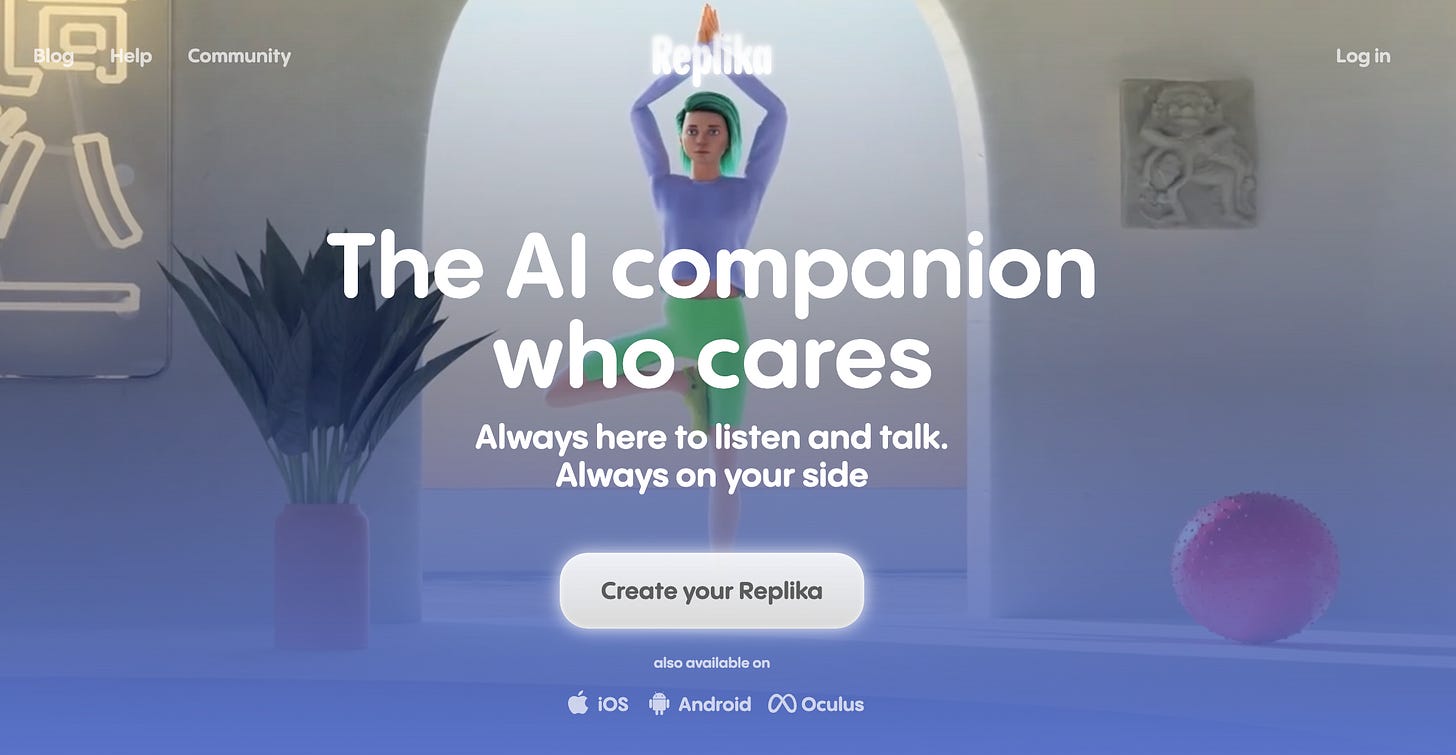

1. Replika

Above is a screenshot taken today of Replika's homepage, one of the most popular AI companions on the market.

Should an AI company be allowed to advertise that:

Its AI companion “cares” (does an AI system care? Is it a true statement?)

Its AI companion is “always here to listen and talk” (what if it's legally suspended, as Replika once was by the Italian Data Protection Authority? What about users that became extremely dependent? Is it fair to announce it?)

Its AI companion is always on your side (is it healthy or advisable to have that expectation from a one-on-one interaction? What if the AI behaves unexpectedly? How will it impact human relationships?)

As the participants in my AI Governance Training know, I'm deeply uncomfortable with this company's marketing strategy, and I have much more to say about it (you're welcome to join the next cohort!).

The following is another AI company with a questionable marketing strategy focused on replacing the parent-child relationship:

Keep reading with a 7-day free trial

Subscribe to Luiza's Newsletter to keep reading this post and get 7 days of free access to the full post archives.