🌷Top 10 privacy, tech & AI books of 2023

Happy holidays!

👋 Hi, Luiza Jarovsky here. Welcome to the 83rd edition of the newsletter! Thank you to 80,000+ followers on various platforms and to the paid subscribers who support my work. To read more about me, find me on social, or drop me a line: visit my personal page. For speaking engagements, fill out this form.

✍️ This newsletter is fully written by a human (me), and I use AI to create the illustrations. I hope you enjoy reading as much as I enjoy writing it!

A special thanks to Didomi, this edition's sponsor:

Take your privacy governance to the next level with Didomi's Advanced Compliance Monitoring, a comprehensive solution that streamlines your cookie and tracker management, saving you substantial time and resources typically spent on manual monitoring. This compliance monitoring tool efficiently oversees your vendor list, automatically identifying new active vendors and adding them to your Consent Management Platform, republishing your consent banner accordingly. Learn more by visiting Didomi's page.

📢 Support this newsletter: become a monthly sponsor and reach thousands of privacy & AI decision-makers throughout the year. Get in touch.

🌷Top 10 privacy, tech & AI books of 2023

Continuing this newsletter's tradition, today I want to share my list of top 10 books in privacy, tech, and AI published in 2023. (The list is not in order of preference). If you want to get smarter, understand the current zeitgeist in privacy, tech & AI, and broaden your horizons: read them all.

*if you are an enthusiastic reader, you should sign up for our AI book club: there are more than 350 people registered, and we meet online every month to discuss an AI-related book. In January, we'll discuss “The Coming Wave” by Mustafa Suleyman. Join us!

“Your Face Belongs to Us: A Secretive Startup's Quest to End Privacy as We Know It” by Kashmir Hill

“Unmasking AI: My Mission to Protect What Is Human in a World of Machines” by Joy Buolamwini

“The Worlds I See: Curiosity, Exploration, and Discovery at the Dawn of AI” by Fei-Fei Li

“The Chaos Machine: The Inside Story of How Social Media Rewired Our Minds and Our World” by Max Fisher

“The Battle for Your Brain: Defending the Right to Think Freely in the Age of Neurotechnology” by Nita Farahany

“More than a Glitch: Confronting Race, Gender, and Ability Bias in Tech” by Meredith Broussard

“Fancy Bear Goes Phishing: The Dark History of the Information Age, in Five Extraordinary Hacks” by Scott Shapiro

“Practical Data Privacy: Enhancing Privacy and Security in Data” by Katharine Jarmul

“Extremely Online: The Untold Story of Fame, Influence, and Power on the Internet” by Taylor Lorenz

“The Coming Wave: Technology, Power, and the Twenty-first Century's Greatest Dilemma” by Mustafa Suleyman

What's your top privacy, tech & AI book of 2023?

Happy reading!

🐻 Generative AI vs. privacy

Generative AI developers are trying to obfuscate privacy, and we should fight back. Read this:

Were we notified that our Facebook and Instagram photos were being used to train an AI system? No.

Should we have been notified? Yes.

Why? Because maybe we wouldn't have posted the same photos or perhaps we would have chosen another service.

People should have a choice. One of the central approaches to privacy (Alan Westin's) says that privacy is:

"the claim of individuals, groups, or institutions to determine for themselves when, how, and to what extent information about them is communicated to others."

Westin wrote this in his book “Privacy and Freedom” in 1967 when home computers didn't even exist yet. It was revolutionary.

It was also prescient: modern privacy laws and the "notice and consent" (US) / "informed consent" (EU) models very much follow the premise that Westin proposed.

GDPR principles and data subject rights also follow this central idea.

Then, suddenly, generative AI and applications based on large language models (LLMs) become ubiquitous, and their developers decide that all the content posted on the internet is fair game.

There is no contextual integrity anymore (check Helen Nissenbaum), and whatever is posted in a certain context (for example, Facebook) can be scraped and used in another context (LLM training).

No warnings or notices are required (perhaps buried in a privacy policy)?

Where is our choice? Agency? Transparency?

The intersection of AI and privacy issues is the topic of two of the Privacy, Tech & AI Bootcamp's modules (next cohort in January); you can register here.

Being human also means having choices, and we should fight back.

💛 Enjoying the newsletter? Share it with friends and help us spread the word. Let's reimagine technology together.

📎 Job opportunities

Are you looking for a job in privacy? Transitioning to AI governance? There are hundreds of open positions available worldwide. Check out our global privacy job board and AI job board. Good luck!

🎓 Privacy managers: check out our training programs

620+ professionals from leading companies have attended our interactive training programs. Each of them is 90 minutes long (delivered in one or two sessions), led by me, and includes additional reading material, 1.5 CPE credits pre-approved by the IAPP, and a certificate. To book a training program: contact us.

📚 AI Book Club

350+ people have signed up for our AI Book Club, and last week we had our 1st meeting (discussing "Atlas of AI" by Kate Crawford).

Thank you to everyone who joined, listened, and participated, especially the book commentators Gregory Manwelyan, Oana Iordachescu, Adeteju Enunwa, Marlon Domingus, and Melanie Tan! The feedback was overwhelmingly positive, and I'm so glad we can have this unique and productive space to discuss AI, privacy, ethics, fairness, and the future of technology.

Our next meeting will be on January 18, and we'll talk about "The Coming Wave" by Mustafa Suleyman & Michael Bhaskar. To join the book club, register here.

🚫 Say NO to sharenting: protect children's privacy

With the holiday season and the flood of children's pictures being posted on social media, avoiding sharenting is an important reminder. Why? Read this:

If you have kids (or take care of kids), it is a bad idea to document their lives on social media. This behavior is called hashtag#sharenting, and it can have negative consequences for the child.

Most adults don't realize they are sharing their child's pictures online to get the dopamine hit that comes with likes, comments & shares. There is no positive outcome for the child to be seen by the parent's online connections (or strangers).

There is also the problem of the lack of agreement, as children are sometimes too small to understand what is going on, and even when they can understand and tell their parents how they feel about it, they are frequently not consulted by the parent (who just goes, takes a picture and posts to get likes).

It is possible that a few years from now, the child will be very unhappy to discover that so many private moments were shared online by the parent.

This is already happening, and some are suing the parents, as the first generation of children born in the social media age is now turning 18 and discovering that their private lives were used to get publicity and social validation for the parents.

I do not think that most parents have bad intentions.

We, the parents, are from a different generation, and what we find acceptable and cute, they might see as wrong and inexcusable.

Whatever is uploaded online, even only “for friends,” has the potential to circulate forever. It takes only a screenshot or re-upload.

There are also serious privacy and security issues involved in sharing anything about a child or teenager online, including identity fraud, exposing the child to predators, and cyberbullying.

Depending on the age of the child, there could emerge mental health and trust issues with a parent or caretaker who cannot respect the child’s boundaries and personal wishes.

I wrote an article about the topic in my newsletter and would like to invite you to check it out: "Your Child's Privacy is Worth More Than Likes" (link below).

Alternatives to sharenting:

if you want to connect with relatives and close friends, why not send the picture or video privately using a secure channel?

if you want to talk about parenthood online, why not do so without exposing the child?

Best solutions:

why not enjoy being with the child without posting anything? They will love it;

the world will remain the same if you do not post any child's pictures online. If you really need to post something, post a selfie of yourself.

If you think this topic is relevant to your network, consider sharing: here are my LinkedIn and my X posts on the topic.

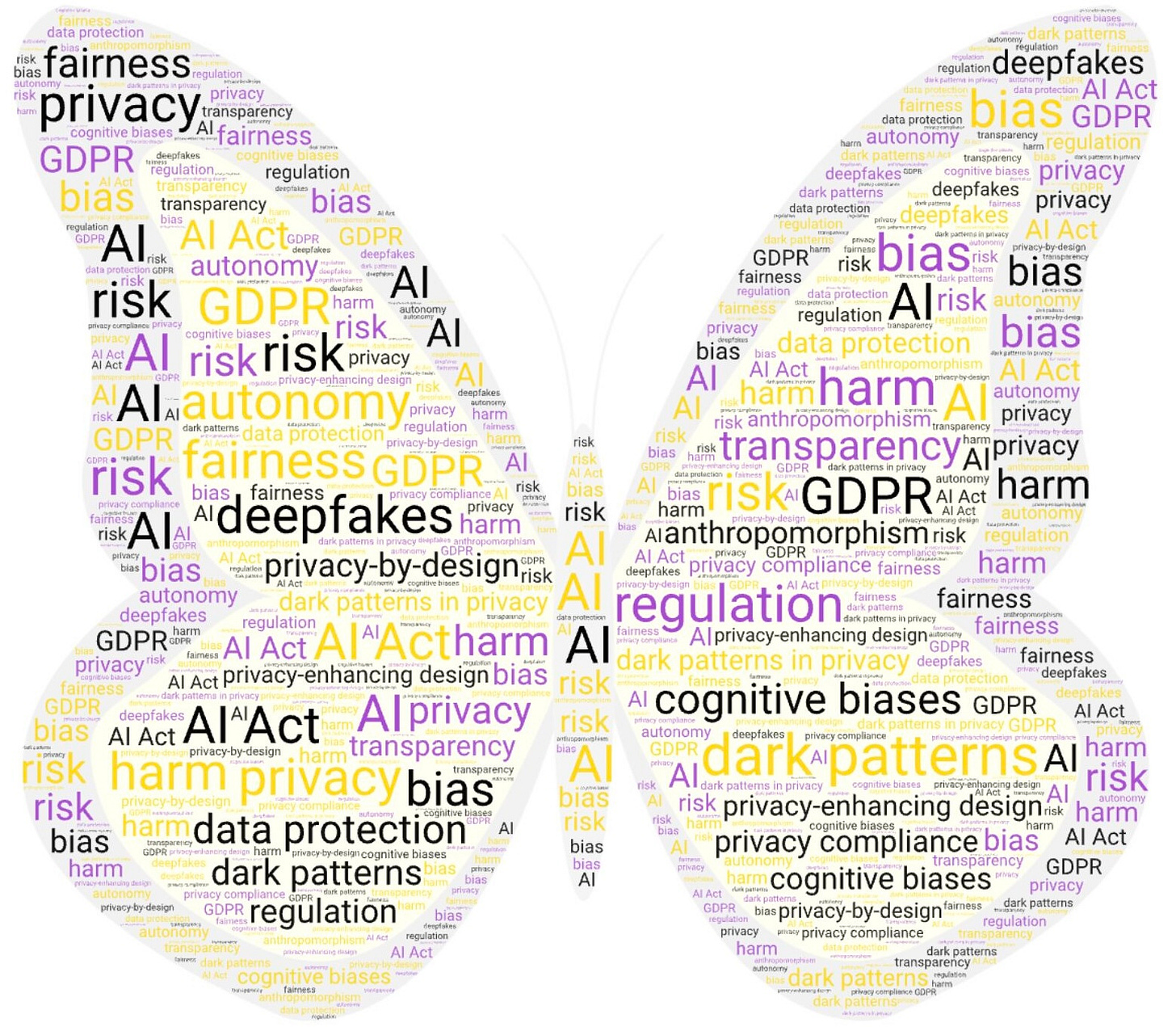

🦋 4-week Privacy, Tech & AI Bootcamp

Are you a privacy professional? You can't miss our 4-week Privacy, Tech & AI Bootcamp. Read this:

the Bootcamp includes four live sessions with me (one hour per week), additional reading material (around one hour per week), quizzes, 8 CPE credits pre-approved by the IAPP, and a course certificate;

among the topics I will cover are: dark patterns in privacy, cognitive biases, privacy-enhancing design, privacy advocacy, AI risks and harms, privacy issues in AI, dark patterns in AI, AI regulation, and more. You can check out the full program in the link below;

learning with live sessions and with peers from around the world is usually rewarding for most participants; it also helps them stay motivated to pay attention, read the extra material, and continue learning afterward;

with the proposed AI Act being finalized, AI is one of the most important topics for 2024, including for privacy professionals, who will have to tackle AI issues and integrate the intersection between AI and data protection in their compliance routine. In the Bootcamp, I cover extensively the topic of privacy issues in AI, dark patterns in AI, reports, regulatory efforts, lawsuits, and cases;

dark patterns are also a hot topic both in the context of privacy and beyond, with the DSA and the AI Act mentioning online manipulation. I cover the topic thoroughly, including theory, practice, and examples on how to identify and revert them;

the first cohort starts on January 31 (live sessions on Wednesdays at 1pm ET/6pm UK); those who miss a session can watch the recording later;

most participants manage to get reimbursed by their organizations; if you are self-employed, a student, or if your organization does not offer reimbursement for training and development, write to me;

this is our second 4-week training program aimed at privacy upskilling (the first one was in April and focused exclusively on dark patterns - it sold out);

*The butterfly: I've always liked butterflies as I saw them as free and gracious. Learning is a form of freedom, so this is the symbol I chose for the Bootcamp. Inside the butterfly, you can see some of the key topics I will cover during these 4-weeks.

I hope to see you there! Read more about the program and save your spot here.

🎤 Privacy & AI live talks

On January 8, I will discuss with three leading privacy experts, Odia Kagan (Fox Rothschild), Nia Cross Castelly (Checks / Google), and Gal Ringel (MineOS), what to expect and how to get ready for 2024's privacy challenges. We'll talk about:

the hottest privacy topics for 2024 and why they will be important/impactful;

what will be the most challenging privacy issues for business and why;

how businesses can prepare in advance for these challenges and the most important practical measures organizations and professionals should be thinking about right now;

tips for privacy professionals who want to navigate 2024 successfully.

This is a free event, and if you are a privacy professional, you cannot miss it! Register using this link to be notified when it starts, participate live, comment in the chat, and receive the recording to re-watch.

*Every month, I host a live conversation with a global expert - I've spoken with Max Schrems, Dr. Ann Cavoukian, Prof. Daniel Solove, and various others. Access the recordings on my YouTube channel or podcast.

🚨 New article on legal aspects of AI-based automated decision-making

The article "Between Humans and Machines: Judicial Interpretation of the Automated Decision-Making Practices in the EU" was written by. Dr Sümeyye Elif Biber and published online on 12/12/23. It discusses legal aspects surrounding AI-based automated decision-making (ADM), especially the judicial role in the interpretation and delimitation of the human role.

A reminder that Art. 22 of the GDPR says that:

"The data subject shall have the right not to be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her."

It also establishes that the main rule (above) shall not apply if the decision:

"is necessary for entering into, or performance of, a contract between the data subject and a data controller;" or "is authorised by Union or Member State law to which the controller is subject and which also lays down suitable measures to safeguard the data subject’s rights and freedoms and legitimate interests;" or "is based on the data subject’s explicit consent."

With that context in mind, here are two interesting quotes from the article:

"(...) the judging framework of AI-driven ADM systems is built on the basis of actions and behaviours not attributable to a single individual. This situation means that the outcome of an ADM system examined above will never be a personal decision but a social one that encompasses not only a single social community but diverse communities. Therefore, it is necessary to protect not only individual interests but also collective interests. This situation proves that such systems have the potential to produce significant consequences for individuals, minorities, and society in general." (page 14)

-

"From a methodological point of view, the judges' inquiry regarding automation and meaningful human participation has presented an interactional legal ground where the relevant normative actors interact with one another. The courts have considered the normative aspect of automation, the relevant provisions of the ECHR, the GDPR and the relevant domestic legislation to clarify the roles of automation and humans in decision-making processes. In this sense, their reasoning has not been limited to scope of national legislation, but also included supranational and international legal provisions." (page 36)

This is one more aspect of the intersection between AI and data protection, especially in the context of Article 22 of the GDPR. I've been covering the topic weekly in this newsletter; to dive deeper, register for the Privacy, Tech & AI Bootcamp.

Wishing you happy holidays and a great reading season!

All the best,

Luiza