The State of the AI Race

A series of new lawsuits filed against AI companies in November 2025 give us a glimpse into the current state of the AI race and the emerging tensions that might shape the future of AI | Edition #253

Hi everyone, Luiza Jarovsky, PhD, here. Welcome to our 253rd edition, trusted by more than 86,800 subscribers worldwide.

As the old internet dies, polluted by low-quality AI-generated content, you can always find raw, pioneering, human-made thought leadership here. Thank you for helping me make this a leading publication in the field!

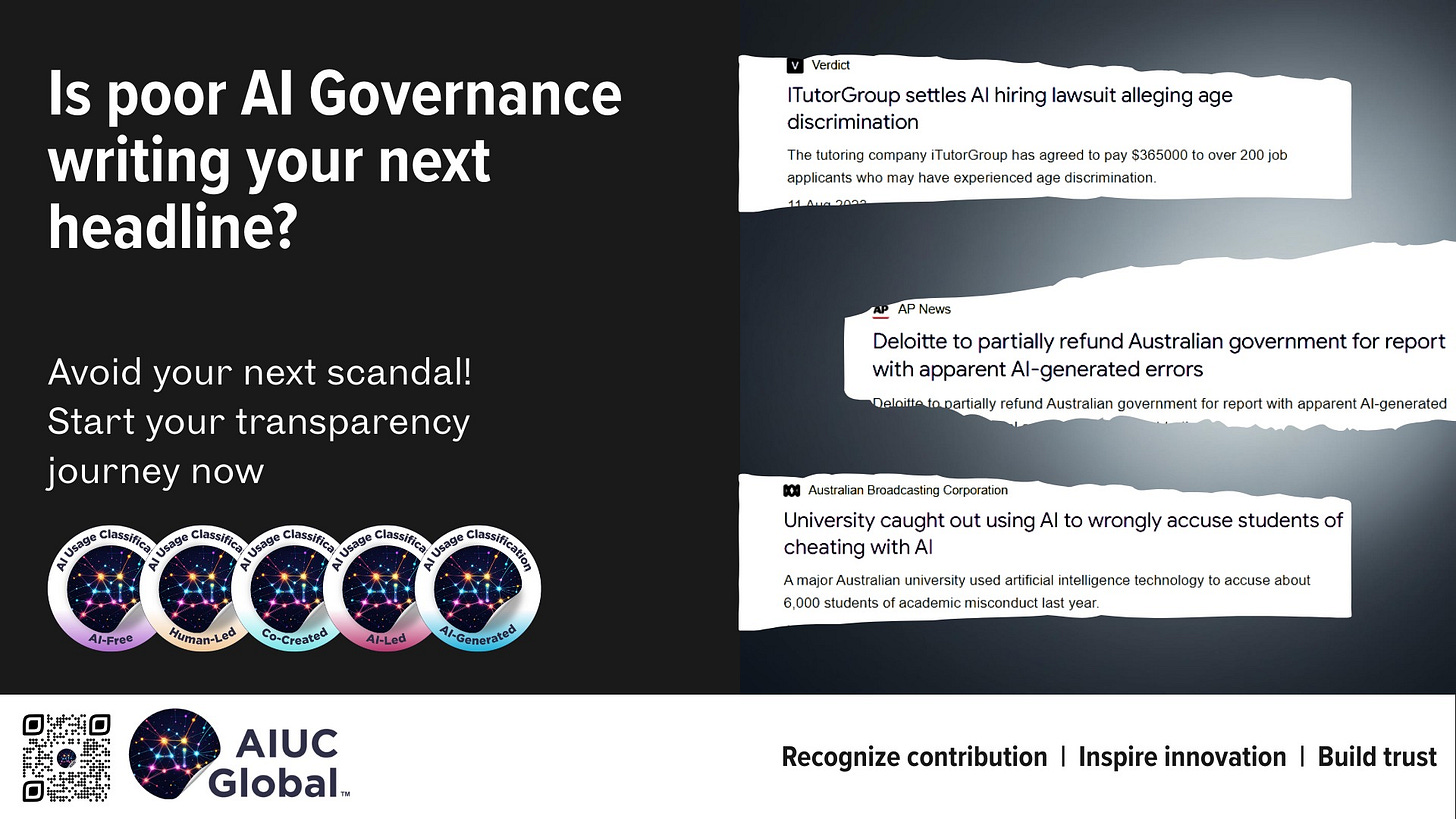

Before we start, a special thanks to AIUC Global, this edition’s sponsor:

Start using more nuanced language and empower your team to openly discuss risks, avoiding negative headlines and encouraging innovation opportunities. Build accountability from the start! Adopt the new AI Usage Classifications today. Claim your 20% discount with the coupon code LUIZA20.

The State of the AI Race

AI governance is on fire this month, but not in the way many of us would like. There are so many new legal and ethical challenges that it is sometimes difficult to keep up with and write about all of them in depth here.

Since the beginning of the generative AI wave, lawsuits have been a thermometer and a source of information to help us understand the main social, ethical, cultural, and legal tensions behind AI-powered technological disruption, and this time is no different.

While in the AI copyright realm, important legal cases have recently reached a conclusion, such as the ones involving Meta, Anthropic, Stability AI, OpenAI, and Udio (still tens of others to be decided), in other areas, things seem to have become much more heated in the past few weeks.

In November alone, there were seven new lawsuits against OpenAI alleging wrongful death, assisted suicide, involuntary manslaughter, and other product liability, consumer protection, and negligence claims.

The lawsuits reflect the fact that the lack of proper AI chatbot regulation is incompatible with the risks they pose, especially when children and vulnerable groups are involved; when user-chatbot interactions are long, intimate, and intense; and when companies want to make them as sycophantic and enticing as possible to retain users and “win” the AI race.

In the past few days, there were three new lawsuits against AI companies, novel in their nature and allegations, that highlight some of the emerging tensions that might shape the future of AI.

Here are the main arguments of these three new lawsuits and why everyone in the field of AI should be aware of them:

This is a privacy lawsuit filed by a Google user based in the U.S., Thomas Thele, and the main allegation is that around October 10 of this year:

“Google secretly turned on Gemini for all its users’ Gmail, Chat, and Meet accounts, enabling AI to track its users’ private communications contained in those platforms without the users’ knowledge or consent.”

Coincidentally, a few days ago, I wrote about Gmail’s “smart features” settings and how to disable them (I discovered the lawsuit after my post).

The post went viral on LinkedIn and on X, and the important part is that although Google says that the feature is “OFF” by default in some areas (including the EU and the UK), many commented on my post saying that they were based in these areas and that the feature was ON without ever having activated it.

Here is why this lawsuit matters more than it may seem:

Keep reading with a 7-day free trial

Subscribe to Luiza's Newsletter to keep reading this post and get 7 days of free access to the full post archives.