🎭 The OpenAI drama

Plus: AGI, AI regulation, and AI governance

👋 Hi, Luiza Jarovsky here. Welcome to the 80th edition of this newsletter! Thank you to 80,000+ followers on various platforms and to the paid subscribers who support my work. Read about my work, invite me to speak, or just say hi here.

✍️ This newsletter is fully written by a human (me), and illustrations are AI-generated.

A special thanks to the Center for Financial Inclusion, this edition’s sponsor:

When designing new financial products, how can teams ensure they are taking into account the user’s privacy needs and concerns? How can privacy be reframed as an essential tenet during ideation and design, rather than seen as a compliance exercise? The Center for Financial Inclusion’s new Privacy as Product Playbook helps designers of digital financial services bring privacy needs front and center. Read it here.

📢 To become a newsletter sponsor, get in touch.

🎭 The OpenAI drama

If you have connected to any social network or news channel in recent days, you probably already know that Sam Altman, OpenAI's CEO, was suddenly fired. But 2 days later, he was reinstated. Meanwhile, OpenAI's employees were posting heart emojis on X (Twitter), and rumors of a secret AGI project were circulating. The drama continues. What is going on?

I'll summarize it in quick bullets below:

November 17

Sam Altman is fired. On OpenAI's website, you can read: “Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.”

Mira Murati, the company's CTO, became the interim CEO

OpenAI's co-founder Greg Brockman quits

Microsoft hires Sam Altman and Greg Brockman to join an advanced AI research team

November 19

OpenAI's investors push to reinstate Sam Altman as the company's CEO

November 20

Emmett Shear, Twitch's co-founder, becomes OpenAI's new CEO

Hundreds of OpenAI employees threaten to quit unless the board reinstates Altman and Brockman and resigns

November 23

Sam Altman is back as the CEO of OpenAI

After it all looked “solved,” there is, of course, still the question of why he was fired in the first place. Perhaps a piece of the puzzle, Reuters reported:

“Ahead of OpenAI CEO Sam Altman’s four days in exile, several staff researchers wrote a letter to the board of directors warning of a powerful artificial intelligence discovery that they said could threaten humanity”

They are talking about “Q*” (pronounced Q-Star), a mysterious project that sources have mentioned as one of the reasons for Sam Altman's firing, as it was groundbreaking and could potentially lead to artificial general intelligence - AGI (more on that in my last commentary of this newsletter).

-

From my point of view, what matters to all of us, regardless of gossip, rumors, firing, and reinstatement, is that:

the most influential AI company in the world is interested in moving fast and breaking things (as most companies are);

governance, safety concerns, and guardrails are not part of the core strategy but necessary hassles to stay in business;

the company's internal governance systems seem more fragile than they make it appear (not ideal in the context of AI development);

the critical discussions that happen every day around the world, including the ones we engage every week in this newsletter and beyond it, help shape the debate, the narrative, the people, the tech environment, and the future of AI;

Critical thinking, advocacy, and action are more important than ever. As the slogan of this newsletter says: let's keep reimagining technology in light of privacy, transparency, and fairness.

👾 Grok enters the room

As the OpenAI drama unfolds, Elon Musk opportunely announced that Grok, his AI chatbot, will be integrated into X (Twitter) next week. What you need to know:

Grok will be an in-app feature for X's Premium+ subscribers;

Grok is the first AI chatbot developed by Musk's AI company xAI;

according to xAI's website: "Grok is an AI modeled after the Hitchhiker’s Guide to the Galaxy, so intended to answer almost anything and, far harder, even suggest what questions to ask! Grok is designed to answer questions with a bit of wit and has a rebellious streak, so please don’t use it if you hate humor!";

Grok seems to have been developed with weaker guardrails. Example: it outputs how to produce illegal drugs "for educational purposes" (you can see a screenshot on Elon Musk's X account);

on xAI's website, they state that: "a unique and fundamental advantage of Grok is that it has real-time knowledge of the world via the X platform. It will also answer spicy questions that are rejected by most other AI systems." It is unclear if "spicy questions" might also mean that they are lenient with hate speech and other forms of harmful content;

with the integration into X, we'll soon have more examples to check;

launching it during the OpenAI drama is an interesting strategy and shows that another rivalry between tech giants (OpenAI+Microsoft vs. X+xAI+other Musk companies) is only starting.

See below a mockup of how its interface will look like, according to this screenshot from Nima Owji's X account:

The integration with X (Twitter) seems worrying to me for two main reasons:

social networks already have an inherent fact-checking problem and, as I've discussed in recent weeks, disinformation and misinformation fly freely and are usually amplified there;

if Grok gets most of its “updated” information from X, AI-related hallucinations will be multiplied by social media disinformation, and internet content will be as far from the truth as possible.

We will have wild years ahead.

🫶 Enjoying the newsletter?

Refer to your friends. It only takes 15 seconds (writing it takes me 15 hours). When your friends subscribe, you get free access to the paid membership.

📎 Job opportunities

Are you looking for a job in privacy? Transitioning to AI? Check out hundreds of opportunities on our global privacy job board and AI job board. Good luck!

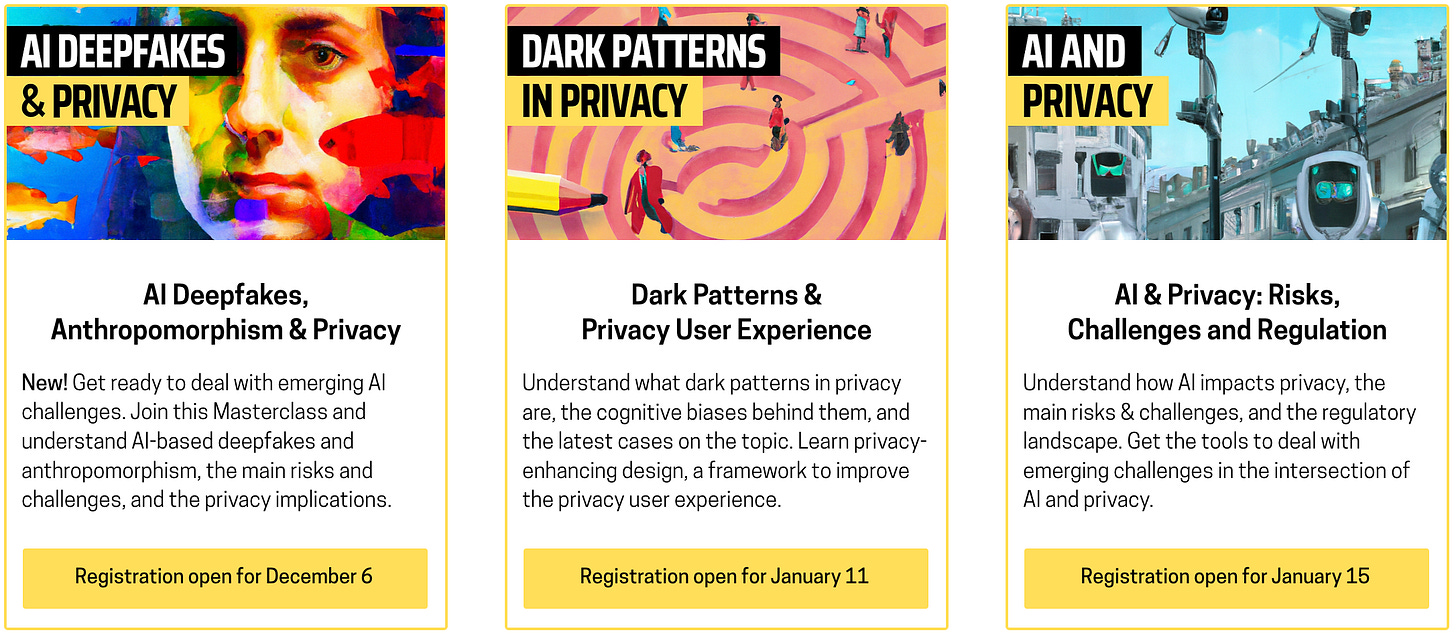

🎓 Training programs

More than 620 professionals have attended our training programs. Each of them is 90 minutes long, led by me, and you receive additional reading material, 1.5 pre-approved IAPP credits, and a certificate. They help you keep yourself up to date and upskill: read more and save your spot.

📚 AI Book Club

250+ people have already registered for our AI Book Club.

In the 1st meeting on December 14, we'll discuss "Atlas of AI," by Kate Crawford;

In the 2nd meeting on January 18, we'll discuss "The Coming Wave: Technology, Power, and the Twenty-first Century's Greatest Dilemma" by Mustafa Suleyman & Michael Bhaskar.

There will be book commentators, and the goal is to have a critical discussion on AI-related challenges, narratives, and perspectives.

Have you read these books? Would you like to read and discuss them? Join the book club!

🖥️ Privacy & AI in-depth

Every month, I host a live conversation with a global expert - I've spoken with Max Schrems, Dr. Ann Cavoukian, Prof. Daniel Solove, and various others. Access the recordings on my YouTube channel or podcast.

👾 AGI, AI regulation, and AI governance

Part of the OpenAI drama, as I described above, was related to the mysterious project Q* and the conflicting interests around it. Today I would like to discuss the topic, focusing on AI governance and privacy by design.

Keep reading with a 7-day free trial

Subscribe to Luiza's Newsletter to keep reading this post and get 7 days of free access to the full post archives.