The AI hype is dangerous for privacy

Plus: privacy & AI intersections

👋 Hi, Luiza Jarovsky here. Read about my work, invite me to speak, tell me what you've been working on, or just say hi here.

🔥 AI, ChatGPT, and legal liability

Given the risk of legal liability (for example, the lawyer who used fake ChatGPT-generated legal cases), ChatGPT should be required to explicitly tell users to fact-check information before using it. In my opinion, the image above (ChatGPT's main user interface), especially the expression "May occasionally generate incorrect information," does not properly inform users that ChatGPT's output data is information digested from the internet without a specific author or responsibility for the content. This is a new product with new types of risks to users. People are not used to this "authorless and possibly invented" information, especially given: a) the massive popularity of the technology; b) the fact that it is being integrated into Microsoft's well-established products; and c) the fact that Sam Altman, OpenAI's CEO, publicly mentions that he uses ChatGPT as a replacement for Wikipedia - a research tool. There is also automation bias, "an over-reliance on automated aids and decision support systems." People will tend to believe that the information provided by ChatGPT is true. For those that say that search engines do not have these warnings: the author of the page behind each search result will be responsible for the content it provides. In the context of ChatGPT, this responsibility does not exist (at least not yet), and the risk to the user is different. Given the new risks and still unprepared users, consumer law/data protection law should require ChatGPT to act in a much more proactive and protective way, being upfront about risks and what users can do to protect themselves.

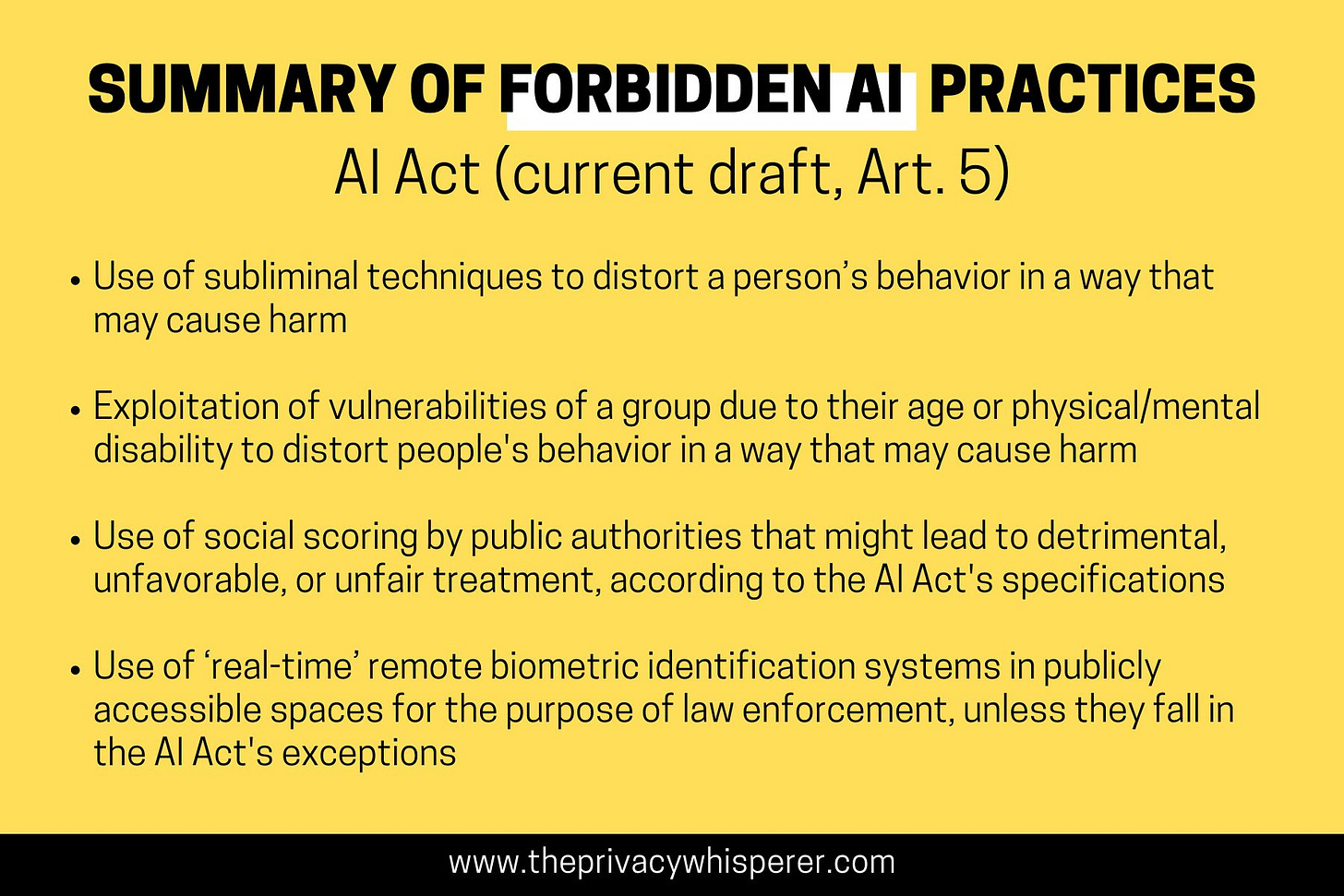

🔥 The AI Act and tomorrow's vote

The European Union's AI Act has a risk-based approach with four types of risk: unacceptable risk (forbidden AI practices), high risk, low risk, and minimal risk. In the image above, you have the list of the banned practices (in the current draft of the regulation), you can read more about them in the AI Act, art. 5. There is an EU Parliament voting session on the AI Act expected for tomorrow (June 14), so this might not be the final version of the legislation. On tomorrow's voting session, Amnesty International's Mher Hakobyan said: “There is a risk the European Parliament may upend considerable human rights protections reached during the committee vote on May 11, opening the door for the use of technologies which are in direct conflict with international human rights law.” As I mentioned a few times in this newsletter, in my view, powerful AI players (such as Google and OpenAI) are probably trying to influence this upcoming vote so that practices such as foundation models are regulated more lightly. For example, Google Bard is currently not available in any of the EU countries or Canada, which might be a “sign” of Google's current position on the AI Act. Also, according to Politico: “Google will have to postpone starting its artificial intelligence chatbot Bard in the European Union after its main data regulator in the bloc raised privacy concerns.” In the same sense, Sam Altman, OpenAI's CEO, has recently said in relation to the AI Act: “we will try to comply, but if we can’t comply, we will cease operating [in the EU]." It looks like tomorrow's voting session at the EU Parliament can shake up the “AI regulation” debate, and I am waiting to hear the outcomes.

🔥 Privacy & AI intersections

In the last few months, I have been conducting live conversations with global privacy experts about topics related to the intersections of privacy and AI. I spoke with Dr. Ann Cavoukian about Privacy by Design in the age of AI; with Prof. Nita Farahany about brain privacy and cognitive liberty in the context of AI-based neurotechnology advances; with Dr. Gabriela Zanfir-Fortuna about AI Regulation in the EU and US; and last week with Prof. Daniel Solove about privacy harms in the context of AI. These conversations were truly fascinating, and I can say that I leaned a lot with each of them. These conversations are all publicly available on my YouTube channel and podcast, and I highly recommend you watch/listen to them if you want to get valuable and up to date perspectives on emerging challenges and points of view around privacy & AI. Tomorrow, I will announce my July guest on LinkedIn - stay tuned.

🔥 The AI hype is dangerous for privacy

The AI hype cycle has taken the world. After experiencing the crypto, NFT, and metaverse hype cycles, last year ChatGPT inaugurated the latest cycle, which has some unique characteristics and specific risks. First, different from

Keep reading with a 7-day free trial

Subscribe to Luiza's Newsletter to keep reading this post and get 7 days of free access to the full post archives.