👠 Scarlett Johansson vs deepfakes

Plus: screenless AI phones and data protection issues

🙌 Hi, Luiza Jarovsky here. Welcome to the 78th edition of this newsletter, and thank you to 83,000+ followers on various platforms. Visit my personal page to read about my work, invite me to speak, become a newsletter sponsor, or just say hi.

🎓 Launching today: our new Masterclass AI Deepfakes, Anthropomorphism, and Privacy Implications. Save your spot!

✍️ This newsletter is fully written by a human (me), and illustrations are AI-generated.

A special thanks to MineOS, this week's newsletter sponsor:

Without an AI code of conduct, managing how your employees use AI tools can be a data privacy nightmare. Because the AI boom is just starting, we’re still in uncharted territory for how a code of conduct should work and what it should look like. MineOS brings you key strategies to ensure ethical and efficient AI deployment at your business while maximizing its power, so your company can balance AI innovations and data governance principles. See what an AI Code of Conduct should look like in MineOS’s recent article.

👠 Scarlett Johansson vs deepfakes

Scarlett Johansson is the latest public figure impacted by deepfakes.

What happened: an app called Lisa AI: 90s Yearbook & Avatar posted on X a 22-second ad featuring images of the actress and an AI-generated voice similar to hers promoting their app (the ad is now deleted).

What her lawyers said: her representatives told Variety that Johansson “is not a spokesperson for the app,” and her lawyer stated, “we do not take these things lightly. Per our usual course of action in these circumstances, we will deal with it with all legal remedies that we will have.” According to Variety, she has taken legal action.

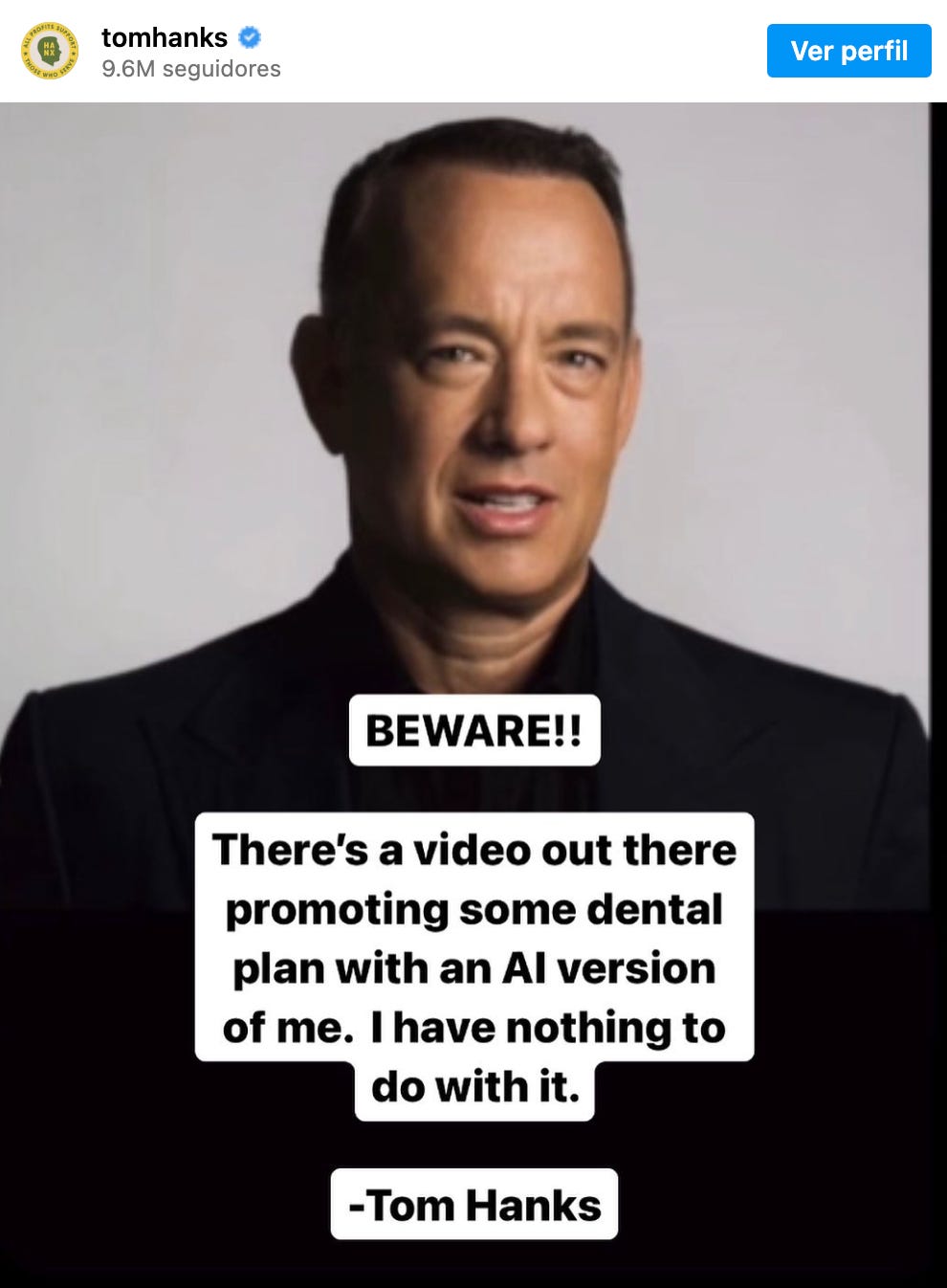

Other celebrities have been targeted: recently, it happened with Tom Hanks, as an AI version of the actor was used to promote a dental plan. He then posted the image below on Instagram:

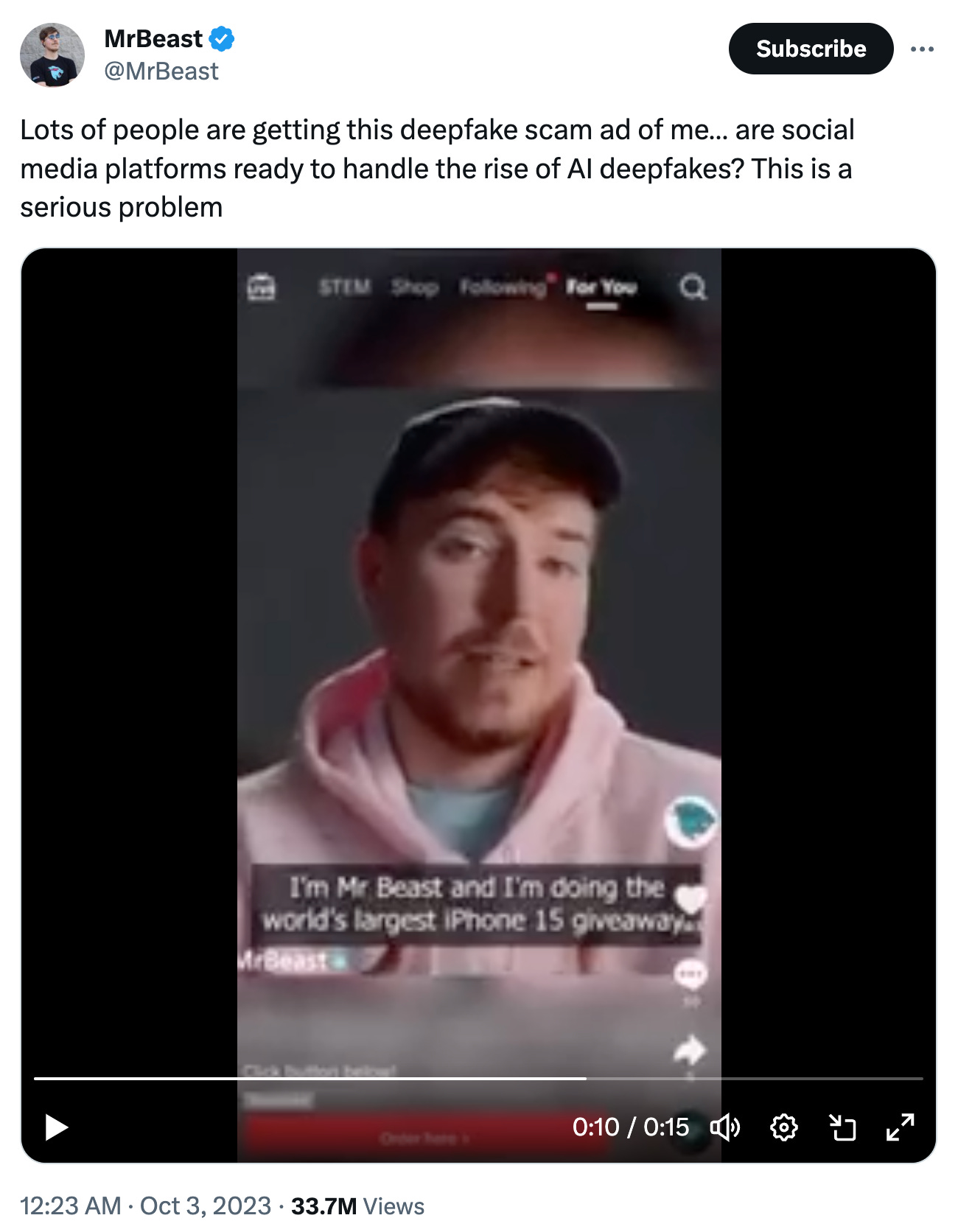

It also happened with Mr Beast, as an AI deepfake using his image was announcing a fake iPhone giveaway.

The problem with deepfakes: this is such an important topic, and I've been constantly writing about deepfakes in this newsletter. When we lose the ability to distinguish fake from real, various forms of harm can emerge. From financial and psychological scams to disinformation, non-consensual sharing of intimate imagery, election disruption, real-world violence, and diplomatic conflicts, there are so many potential negative consequences that emerge from deepfakes.

It's not a new phenomenon: deepfakes are not new per se, but recent developments in AI technology made the AI content look more real and difficult to detect. Moreover, the current AI wave (led by generative AI) made AI deepfakes cheaper to produce and widely available, so the problem got worse. We are seeing the results on social media: the flood of AI-based disinformation is influencing public opinion on ongoing conflicts, causing real-world hate, violence, and harm.

The 'Age of Fake' has arrived: people should be much more careful when consuming online content. Until proven otherwise, you should assume online images and videos are potentially fake.

Dive deeper: if you want to understand the broader privacy implications of AI deepafakes, register for my 90-minute Masterclass: AI Deepfakes, Anthropomorphism, and Privacy Implications. Most participants get reimbursed by their companies or organize group sessions: get in touch.

❌ AI chatbots cannot negotiate contracts

Unpopular opinion: AI chatbots cannot negotiate legal contracts and cannot replace lawyers, in opposition to what recent news says.

Negotiating contracts is also about:

understanding and developing a business strategy

building relationships between the people involved

having a more comprehensive view of risks, liability, and limitations of your business and the other side's business

shaping short, medium, and long-term business strategies

designing better processes and communication between internal stakeholders

building and strengthening the legal strategy

and more.

An AI chatbot can parrot human language to comment on and modify individual contractual clauses and exchange emails to "negotiate" those modifications.

However, a lawyer would have to double-check each modification for errors and discrepancies, verify coherence with the rest of the legal work, and be able to incorporate each step of the legal reasoning into the broader legal and business strategy.

Also, a lawyer would have to be constantly monitoring and shaping the internal parameters and the "default" behavior of the AI system in order to curb negligence, errors, biases, and bugs.

It would probably be more time-efficient and functional if the lawyer - who will have to defend that position in other iterations - negotiated him/herself.

So, for now, AI chatbots cannot negotiate contracts and cannot replace lawyers.

📌 Job opportunities

Are you looking for a job in privacy? Starting a data protection career? Check out hundreds of opportunities on our global privacy careers page. Quick tips:

- Make sure to check remote work in other locations (not only the place where you live) as there might be suitable opportunities;

- When you are on a job search website, make sure to check multiple keywords that are directly connected to the job you are looking for (e.g., privacy lawyer, data privacy analyst);

- Our list has many links which are updated daily. Make sure to bookmark our job board and check it out at least once a week;

- Many job search pages have the option to activate alerts. Make sure to turn them on for all the jobs that might interest you;

- When you find a suitable job, make sure to check if any of your LinkedIn connections work there and could provide an internal recommendation or referral - this is usually very helpful;

- As always, when sending your CV, make sure it's well written, short, using clear language, and communicating your skills in a straightforward way (there are many websites with tips on how to do that);

- Don't give up. The opportunity you are looking for might be one click away. Use the job-hunting phase to invest in personal and professional growth.

Wishing you good luck!

🎓 LAUNCHING TODAY: NEW MASTERCLASS

Today we are officially launching our new Masterclass: AI Deepfakes, Anthropomorphism, and Privacy Implications. Join us on December 6 for this unique 90-minute live Masterclass that will help you get ahead of emerging AI challenges. You will access additional material, a quiz, and a certificate. Places are limited: save your spot. Most participants get reimbursed by their companies or organize group training sessions: get in touch and check all our Masterclasses.

🎥 Identifying Dark Patterns in Privacy

I was invited to give a lightning talk about 'Identifying Dark Patterns' at the 45th Global Privacy Assembly in Bermuda, where data protection & privacy commissioners from around the world met. Watch the 5-minute recording, and if you want to dive deeper into the topic, register for my upcoming Masterclass on Dark Patterns and Privacy User Experience on January 11 or book a group training session.

📖 Join our AI Book Club

We are now reading “Atlas of AI” by Kate Crawford, and the next AI book club meeting will be on December 14, with six book commentators. To participate, register here.

🖥️ Privacy & AI in-depth

On November 28, I will talk with Prof. Ryan Calo about Humans, Robots, and Vulnerability in the Age of AI. We'll discuss his recent paper with Daniella DiPaola, Socio-Digital Vulnerability, and other topics in the context of Prof. Calo's scholarship. To join the session, register here. Every month, I host a live conversation with a global expert - I've spoken with Max Schrems, Dr. Ann Cavoukian, Prof. Daniel Solove, and various others. Access the recordings on my YouTube channel or podcast.

📱Screenless AI phones and data protection issues

The company Humane has just launched a screenless wearable device called AI Pin, which aims to replace your smartphone. Among various critical issues we can raise about it, data protection consent has caught my attention:

Keep reading with a 7-day free trial

Subscribe to Luiza's Newsletter to keep reading this post and get 7 days of free access to the full post archives.