👋 Hi, Luiza Jarovsky here. Welcome to the 89th edition of this newsletter on Privacy, Tech & AI, and thanks to 18,100+ email subscribers and 62,000+ followers on LinkedIn, X, and YouTube, who have joined me on this journey.

A special thanks to Didomi, this edition's sponsor. Check out their webinar:

Get a better understanding of the Google Consent Mode v2 with Didomi’s upcoming webinar on February 15th at 11am ET (5pm CET). Hosted by Betsy Annen and Sabrina Bouguessa from Google, Florian Obligis from M13H, and Jeff Wheeler from Didomi. The speakers will provide practical information and an action plan to help you navigate these changes seamlessly. Ensure your company is ready and equipped to effectively implement Google's Consent Mode v2. Secure your spot

✨ Saying "no, thanks" to AI can be empowering

Every day that passes, I see more and more AI-based features, and I have been thinking about how futile some of them are, how they can negatively impact our autonomy and personal growth, and the importance of saying no to them.

AI on LinkedIn

Yesterday, for example, I went on LinkedIn to edit my profile headline, and it offered me to write it with AI:

I was a bit surprised by this functionality and thought about why anyone would use AI to write a 2-line personal headline. Shouldn't the headline have the person's style and touch? Also, isn't it important, from a personal and a professional perspective, to think about how we want to introduce ourselves to peers and potential employers? And here, I mean that the thinking itself and the self-reflection that goes with it are important, not only the end result.

In this LinkedIn example, the AI version will be optimized for one of LinkedIn's criteria of a “good headline” based on data from other users. We do not know if it will be optimized to get more profile views, more job offers or more friend requests.

Due to the automation bias, my guess is that most people will be happy with the AI-suggested headline and will consider it good; however, because of the opaqueness of AI models, we will not know if our headline now actually looks like most LinkedIn spammers (as they get many profile views of people trying to check their credibility or block them). Also, the headline could sound AI-made, especially for people who know us personally (do we want that?). Lastly, another consequence is that with time, all headlines will look the same, AI-optimized, without the diversity of (sometimes unexpected) personal touches.

Still talking about LinkedIn, I've noticed AI-written comments on my posts. My guess is that people do it because a) they can quickly comment on multiple posts without having to engage with the content (supposedly showing the LinkedIn algorithm that they are active and deserve an algorithmic boost); or b) because they want to appear knowledgeable to the author of the post or to their own network. For me, personally, these AI-written comments appear unflattering, lazy, and unprofessional.

Shouldn't we be honest about the topics we care and know about and the topics we have no understanding of? Isn't it the purpose of commenting on LinkedIn - a professional social network - to maintain honest social relationships? Shouldn't we be ourselves when we are interacting socially? Isn't writing your own comment part of what it means to be ourselves? I think that many people are mindlessly jumping on the AI hype train and forget to think about how it will impact them personally and how it will appear to others.

AI on Google Maps

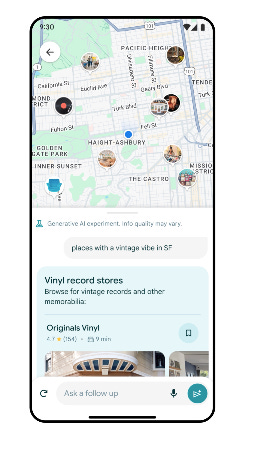

Another recent example of AI ubiquity is Google's recent announcement that it is integrating generative AI into Google Maps. People will be able to type things such as “places with a vintage vibe in SF.” Here's a screenshot from the announcement:

At first glimpse, it looks cool. Because of the AI hype, we are now conditioned on an almost unconscious level to see any AI-based feature as cutting-edge.

But if you think about it for a while, why would we let an opaque AI model - which we do not know how it was trained - filter results and decide what options fit our preferences and which ones should be shown or not? Search engines do organize and prioritize information, but there is some open notion of “rules to follow” (you can optimize a website using SEO techniques) and positive competition, as popular websites and those that people spend more time browsing will be shown first. This is lost in most AI-based functionalities.

There is also the privacy aspect of it. Why let companies know so much of what you are looking for (beyond what they already know), to the point of knowing even your current “vibe” right now? Shouldn't we leave at least a bit of ourselves private?

Asking for recommendations from an AI chatbot is like asking the whole internet as a passive and voiceless collective. Shouldn't there be some healthy space for social interaction? My guess is that your real-life friends and family know more about your personal preferences and what you mean by “vintage vibe” than an AI chatbot. In a hyper-digitalized world, where most people won't meet their neighbors in the public square like in the past, this quick online message asking for a recommendation might be what was missing to rekindle an old friendship. But perhaps technology is destiny, and we should choose an AI companion instead.

Autonomy and choice

AI is here to stay, and it's everywhere. In some instances, it's imposed on us, and we do not have a choice.

For example, in the context of policing or border control or if we are applying for insurance, housing, a job, or any type of government benefit. In those cases, when AI is deployed, the debate on whether it's fair or even necessary is at the regulatory and policy level. As individuals, we rarely have a choice.

Also, in commercial contexts, we are already shaped by many types of AI-based algorithms - and we do not have full control over them either. One of these categories is social media's algorithms which are often AI-based. They have changed the type of content we post online, as well as the content we consume. They have changed how we write, read, and think, how is socially acceptable to communicate with others online, what type of interactions we have online, and what social relationships mean, online and offline. We have partial control in these cases, as we can choose to avoid these platforms.

But there is at least one category of AI that still allows us to have plenty of autonomy to say “no, thanks” whenever we want to keep the experience exclusively human: optional AI functionalities added to increment the product, frequently marketed as efficiency-enhancers.

I'm not here advocating for a ban on AI or suggesting that the most authentic way will always be AI-free. But being human has a lot to do with having choices and having some space to understand and express who we are.

My final take on this is that from many perspectives, including personal growth, interpersonal connection, self-awareness, critical thinking, and many others, AI tools can potentially set you back, regardless of what their developers promise. Saying “no, thanks” to AI can be empowering.

🔥 Essential privacy, tech & AI resources (free):

1. Join our AI Book Club (650+ members)

2. Check out and subscribe to our privacy and AI job boards

3. Sign up for this week's live session on online manipulation (700+ attendees)

4. Watch or listen to my discussions with global experts on privacy & AI

5. Read my daily posts about privacy, tech & AI on LinkedIn and on X

🦋 Aim for excellence: join our 4-week Bootcamp

Learn with peers and advance your career: join our 4-week Privacy, Tech & AI Bootcamp and obtain tools to deal with the main regulatory and technological challenges. This comprehensive training includes 4 live sessions, self-study material, quizzes, 8 CPE credits (pre-approved by the IAPP), and a certificate.

We designed this Bootcamp especially for those who want to go beyond the basics and focus on critical thinking, growth, and leadership. Check out the full program, choose a cohort, and register here (limited seats).

🔥 AI Briefing

Here's our curation of the most important AI trends and news this week: