📢 New EU Regulation Impacting AI

AI Governance Must-Reads | Edition #150

👋 Hi, Luiza Jarovsky here. Welcome to the 150th (!) edition of this newsletter on the latest developments in AI policy, compliance & regulation, read by 39,900+ subscribers in 155+ countries. I hope you enjoy reading it as much as I enjoy writing it.

💎 In this week's AI Governance Professional Edition, I’ll explore challenges in the context of human oversight of AI systems, why some of the EU AI Act's provisions might be ineffective, and what policymakers should have in mind. Paid subscribers will receive it on Thursday. If you are not a paid subscriber yet, upgrade your subscription to receive two weekly editions (this free newsletter + the AI Governance Professional Edition), access all previous analyses, and stay ahead in the rapidly evolving field of AI governance.

⏰ 7 days left to register: in December, join me for a 3-week intensive AI Governance Training (8 live lessons; 12 hours total), already in its 15th cohort. Join 1,000+ people who have benefited from our programs.

🏷️ Use the coupon code BLFRI2024 and get a special 20% Black Friday discount—valid only until next Monday:

📢 New EU Regulation Impacting AI

The EU shows no signs of slowing down! On November 20, the Cyber Resilience Act was published in the Official Journal of the EU. It will enter into force 20 days after its publication.

Not many people spoke about it, but it's a Regulation focused on ensuring the cybersecurity of products that have digital elements. It applies to AI as well, and it might be another regulation—in addition to the EU AI Act—to shake up the legal AI ecosystem in the EU. Here's what everyone in AI should know:

╰┈➤ According to the EU Commission, the Cyber Resilience Act (CRA):

"aims to safeguard consumers and businesses buying or using products or software with a digital component. The Act would see inadequate security features become a thing of the past with the introduction of mandatory cybersecurity requirements for manufacturers and retailers of such products, with this protection extending throughout the product lifecycle."

╰┈➤ Its goal is to guarantee:

→ "harmonised rules when bringing to market products or software with a digital component;

→ a framework of cybersecurity requirements governing the planning, design, development and maintenance of such products, with obligations to be met at every stage of the value chain;

→ an obligation to provide duty of care for the entire lifecycle of such products."

╰┈➤ Article 12 covers the intersection with the EU AI Act and establishes:

→ "Without prejudice to the requirements relating to accuracy and robustness set out in Article 15 of Regulation (EU) 2024/1689 [the EU AI Act], products with digital elements which fall within the scope of this Regulation and which are classified as high-risk AI systems pursuant to Article 6 of that Regulation shall be deemed to comply with the cybersecurity requirements set out in Article 15 of that Regulation where:

➤ those products fulfil the essential cybersecurity requirements set out in Part I of Annex I;

➤ the processes put in place by the manufacturer comply with the essential cybersecurity requirements set out in Part II of Annex I; and

➤ the achievement of the level of cybersecurity protection required under Article 15 of Regulation (EU) 2024/1689 is demonstrated in the EU declaration of conformity issued under this Regulation. (...)"

╰┈➤ An additional important intersection with the EU AI Act that many will miss is Article 12's second paragraph. It establishes that high-risk AI systems that follow the conformity assessment based on internal control (see Article 43, paragraph 2 of the EU AI Act) will be subject to the conformity assessment procedures established in the EU Cyber Resilience Act regarding its essential cybersecurity requirements.

╰┈➤ It's an EU Regulation, similar to the GDPR and the EU AI Act, and is therefore directly applicable to all EU Member States. It will become enforceable on December 11, 2027, with the exception of a few articles.

⚙️ Tackling AI-powered Deepfakes

The U.S. National Institute of Standards and Technology (NIST) published the report "Reducing Risks Posed by Synthetic Content," a must-read on measures against AI-powered deepfakes. Here's what you need to know:

╰┈➤ The approaches discussed in the report fit these three main areas:

→ Recording and revealing the provenance of content, including its source and history of changes made to the content;

→ Providing tools to label and identify AI-generated content; and

→ Mitigating the production and dissemination of AI-generated CSAM & NCII (Non-Consensual Intimate Images) of real individuals.

╰┈➤ Regarding risks and harms of synthetic content, it states:

"Risks and harms of synthetic content can be influenced by a range of factors. These include the target audience for the content; the context in which content is used or misused; the sophistication of the actor creating and/or disseminating the content; and any social, economic, and health-related (including mental health) costs incurred in association with the creation and/or dissemination of the content. Harms also vary in scope: some are concentrated on particular individuals—such as when CSAM or NCII depict real individuals— while other harms are diffuse across society, such as disinformation that affects a wide array of individuals who consume it. (...)"

"Synthetic content can also carry risks for cybersecurity and fraud. In particular, synthetic images, voices, or video may be used to fool biometric authentication systems or to mislead human recipients into facilitating fraudulent transactions (e.g., via voice cloning)."

╰┈➤ Regarding the most effective techniques:

"Which techniques are most effective will also vary depending on who is using the techniques for what purpose they are using them, and how widely others are using them. Techniques for provenance data tracking, which record the origins and history of digital content, and for detecting such tracked data may be helpful to establish whether content is synthetic or authentic for broad audiences. This does not directly translate to trustworthiness, as authentic content can still be harmful or misleading, but these techniques may reduce some risks by providing greater transparency. Other synthetic content detection techniques, meanwhile, may be more suitable for use by analysts (e.g., in social media platforms or specialized civil society organizations) to determine whether specific content is AI-generated and what responses may be appropriate. (...)”

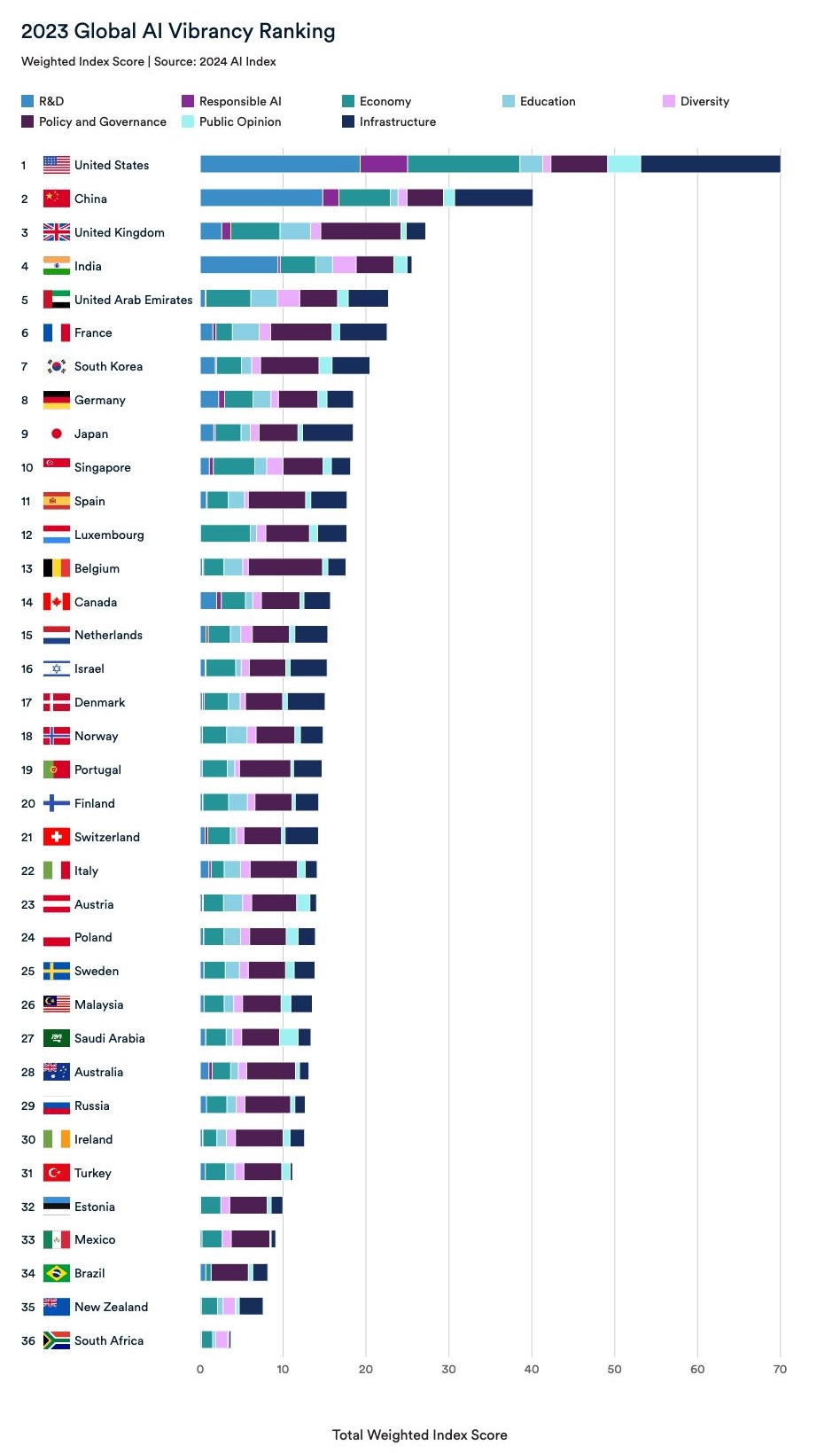

🥇 Which Countries Are Leading in AI?

The Stanford Institute for Human-Centered Artificial Intelligence has released its Global AI Vibrancy tool and paper, offering the type of data you've been waiting for! Which countries are leading in AI? Find out below—[spoiler: the 🇪🇺 EU might not be as far behind as some suggest].

"The Global AI Vibrancy Tool is an interactive visualization that facilitates cross-country comparisons of AI vibrancy across 36 countries, using 42 indicators organized into 8 pillars. It provides a transparent evaluation of each country’s AI standing based on user preferences, identifies key national indicators to guide policy decisions, and highlights centers of AI excellence in both advanced and emerging economies. As one of the most comprehensive indices of AI vibrancy globally, the tool offers valuable insights for understanding and fostering AI development."

⚖️ ANI Sues OpenAI

The Indian news agency Asian News International (ANI) has filed a copyright infringement lawsuit against OpenAI in New Delhi. As copyright disputes involving AI continue to grow, this case offers insights into OpenAI's legal strategy and what might lie ahead.

╰┈➤ The arguments:

→ The first hearing in this case took place in the New Delhi High Court on November 19, and according to ANI, "the court is required to decide the legitimacy of the use of publicly available proprietary content by AI platforms."

→ According to OpenAI's lawyers, ANI's website has been internally blocked since September, meaning its content was no longer used to train AI.

→ ANI counters that the content is "permanently stored in the memory of ChatGPT" and cannot be deleted. This raises an interesting parallel with the EU debate on GDPR and the right to be forgotten. Once an AI model has been trained on specific data, it is arguably impossible to remove that data without deleting/retraining the entire model. From a copyright perspective, previous infringement could likely not be remedied, as it's embedded in the model.

╰┈➤ The backstage:

→ As AI companies close licensing deals with selected news media platforms, we start to see more and more other media platforms—those not covered by these deals—suing AI companies over copyright infringement.

→ As many news media undergo financial difficulties, licensing deals with AI companies promise fresh revenue and a revival of their business model. For example, Reddit closed a $60 Million licensing deal with Google; in May, News Corp and OpenAI announced a multi-year licensing agreement. There are many more examples, and the companies left out of these deals will be incentivized to sue and attempt to have a piece of the pie.

╰┈➤ OpenAI's position:

→ According to Reuters, a spokesperson for OpenAI declared: "We build our AI models using publicly available data, in a manner protected by fair use and related principles, and supported by long-standing and widely accepted legal precedents."

→ As copyright lawsuits against AI companies like OpenAI continue to mount, it appears they will persist with their existing practices, repeatedly asserting that their scraping methods qualify as fair use.

→ The next hearing in this case will happen in January 2025.

🔥Unpopular Opinion

AI companies making these kinds of claims should be banned. AI anthropomorphism should be heavily regulated.

Join the discussion on LinkedIn, Bluesky, or Twitter/X.

⏰ 7 Days Left to Register

If you are dealing with AI-related challenges, you can't miss our acclaimed live online AI Governance Training—now in its 15th cohort.

In December, we’re offering a special intensive format for participants in the Americas & Europe: all 8 lessons (12 hours of live learning with me) condensed into just 3 weeks, finishing before the holidays. A similar cohort runs in late January for Asia-Pacific & Europe.

→ Our unique curriculum, carefully curated over months and constantly updated, focuses on AI governance's legal and ethical topics, helping you elevate your career and stay competitive in this emerging field.

→ Over 1,000 professionals from 50+ countries have advanced their careers through our programs, and alumni consistently praise their experience—see their testimonials.

⏰ There are 7 days left to join the December cohort. Are you ready?

🏷️ Use the coupon code BLFRI2024 and get a special Black Friday discount of 20% off, valid until next Monday:

*You can also sign up for our learning center to receive updates on future training programs along with educational and professional resources.

🏛️ AI Risks, Benefits, and Policy Imperatives

The AI governance report you needed is here! The OECD published "Assessing potential future AI risks, benefits and policy imperatives," and it's a must-read for everyone in AI. Here's what it covers:

╰┈➤ AI’s potential future benefits

→ Accelerated scientific progress

→ Better economic growth, productivity gains and living standards

→ Reduced inequality and poverty

→ Better approaches to urgent and complex issues, including mitigating climate change and advancing other SDGs

→ Better decision-making, sense-making and forecasting

→ Improved information production and distribution

→ Better healthcare and education services

→ Improved job quality

→ Empowered citizens, civil society and social partners

→ Improved institutional transparency and governance, instigating monitoring and evaluation

╰┈➤ Potential future AI risks

→ Facilitation of increasingly sophisticated malicious cyber activity

→ Manipulation, disinformation, fraud and resulting harms to democracy and social cohesion

→ Races to develop and deploy AI systems cause harms due to a lack of sufficient investment in AI safety and trustworthiness

→ Unexpected harms result from inadequate methods to align AI system objectives with human stakeholders’ preferences and values

→ Power is concentrated in a small number of companies or countries

→ Minor to serious AI incidents and disasters occur in critical systems

→ Invasive surveillance and privacy infringement

→ Governance mechanisms and institutions unable to keep up with rapid AI evolutions

→ AI systems lacking sufficient explainability and interpretability erode accountability

→ Exacerbated inequality or poverty within or between countries

╰┈➤ Priority policy actions

→ Establish clearer rules, including on liability, for AI harms

→ Consider approaches to restrict or prevent certain “red line” AI uses

→ Require or promote the disclosure of key information about some types of AI systems

→ Ensure risk management procedures are followed throughout the lifecycle of AI systems that may pose a high risk

→ Mitigate competitive race dynamics in AI development and deployment that could limit fair competition and result in harms

→ Invest in research on AI safety and trustworthiness approaches, including AI alignment, capability evaluations, interpretability, explainability and transparency

→ Facilitate educational, retraining and reskilling opportunities to help address labour market disruptions and the growing need for AI skills

→ Empower stakeholders and society to help build trust and reinforce democracy

→ Mitigate excessive power concentration

→ Targeted actions to advance specific future AI benefits

🎙️ Taming Silicon Valley and Governing AI

Last week, I had a live conversation with Gary Marcus. If you're interested in AI—especially in ensuring it works for us—you should watch the recording. Here's what we discussed:

→ Gary is one of the most prominent voices in AI today. He is a scientist, best-selling author, and serial entrepreneur known for anticipating many of AI's current limitations, sometimes decades in advance.

→ In this live talk, we discussed his new book "Taming Silicon Valley: How We Can Ensure That AI Works for Us," focusing on Generative AI's most imminent threats and Gary's views on essential priorities, particularly in AI policy and regulation. We also discussed the EU AI Act, U.S. regulatory efforts, and the false choice often promoted by Silicon Valley between AI regulation and innovation.

→ Watch and share the recording using this link.

🎬 Find all my previous live conversations with privacy and AI governance experts on my YouTube Channel.

📚 AI Book Club: What Are You Reading?

📖 More than 1,800 people have joined our AI Book Club and receive our bi-weekly book recommendations.

📖 The 14th recommended book was The Quantified Worker - Law and Technology in the Modern Workplace by Ifeoma Ajunwa.

📖 Ready to discover your next favorite read? See our previous reads and join the book club here.

🧸 Children's Privacy in the Age of AI

Here's an uncomfortable reminder about children's privacy, especially considering emerging AI-powered threats, that every parent should read:

If you have kids (or take care of kids), it is a bad idea to document their lives on social media. This behavior is called sharenting, and it can have negative consequences for the child.

Most adults don't realize they are sharing their child's pictures online to get the dopamine hit that comes with likes, comments, and shares. There is no positive outcome for the child to be seen by the parent's online connections (or strangers).

There is also the problem of the lack of agreement from the child. Some children are too small to understand what is going on. Older children can understand and share their thoughts; however, they are often not consulted, and many parents feel it's their right to post whatever they want about their children online.

It is possible that a few years from now, the child will be very unhappy to discover that so many private moments were shared by the parent on social media.

Depending on the age of the child, mental health and trust issues might emerge from having to deal with a parent or caretaker who cannot respect their boundaries and personal wishes.

We, the parents, are from a different generation, and what we find acceptable and "cute", they might see as wrong and inexcusable. Whatever is uploaded online, even only “for friends,” has the potential to circulate forever. It takes only a screenshot or re-upload.

There are also serious privacy and security issues involved in sharing anything about a child or teenager online, including identity fraud, exposing the child to predators, and cyberbullying.

Specifically regarding AI, children's pictures and videos available online will often be scraped by tech companies training AI. They might be output or leaked by these AI systems, potentially causing harm now or in the future.

Malicious actors can also inadvertently use the child's pictures and videos to create explicit AI-powered deepfakes, potentially causing psychological and physical harm and long-term trauma.

╰┈➤ Alternatives to sharenting:

→ If you want to connect with relatives and friends, why not send the picture or video privately?

→ If you want to talk about parenthood online, why not do so without exposing the child?

╰┈➤ Best solutions:

→ Why not enjoy being with the child without posting anything? They will love it.

→ The world will remain the same if you do not post your children's pictures online. If you really need to post something, post a selfie of yourself.

🚢 Will Liability Wreck AI Companies?

If you are interested in AI, you can't miss last week's special AI Governance Professional Edition, where I explored the interplay between the new EU Product Liability Directive and the EU AI Act, highlighting why some AI companies might consider EU liability issues a dealbreaker.

Read the preview here. If you're not a paid subscriber, upgrade your subscription to access all previous and future analyses in full.

🔥 Job Opportunities in AI Governance

Below are 10 new AI Governance positions posted in the last few days. This is a competitive field: if it's a relevant opportunity, apply today:

🇨🇦 Dropbox: Senior AI Governance Program Manager - apply

🇬🇧 Deliveroo: AI Governance Lead - apply

🇺🇸 Nike: Sr. Principal, AI Governance (Remote Option) - apply

🇩🇪 Redcare Pharmacy: AI Governance Lead - apply

🇺🇸 Harnham: Senior Product Manager, AI Governance - apply

🇺🇸 American Modern Insurance: AI Governance Lead - apply

🇪🇸 Allianz Technology: Local Data Privacy & AI Ethics - apply

🇫🇮 Nordea: Responsible AI Lead - apply

🇮🇳 Glean: Product Manager, AI Governance - apply

🇸🇪 Essity: Compliance Officer, Data Protection & AI Ethics - apply

🔔 More job openings: subscribe to our AI governance & privacy job boards and receive weekly job opportunities. Good luck!

🙏 Thank you for reading!

If you have comments on this edition, write to me, and I'll get back to you soon.

AI is more than just hype—it must be properly governed. If you found this edition valuable, consider sharing it with friends and colleagues to help spread awareness about AI policy, compliance, and regulation. Thank you!

Have a great day.

All the best, Luiza