Meta’s AI Wants Your Camera Roll

Plus: What is happening in AI | Edition #243

👋 Hi everyone, Luiza Jarovsky here. Welcome to the 243rd edition of my newsletter, trusted by more than 82,100 subscribers worldwide. It's great to have you here!

🔥 Paid subscribers have full access to all my essays and curations here, and can ask me questions to cover in future editions.

🎓 This is how I can support your learning and upskilling journey in AI:

Join my AI Governance Training [apply for a discounted seat here]

Strengthen your team’s AI expertise with a group subscription

Receive our job alerts for open roles in AI governance and privacy

Sign up for weekly educational resources in our Learning Center

Discover your next read in AI and beyond with our AI Book Club

👉 A special thanks to AgentCloak, this edition’s sponsor:

As companies begin to roll out AI agents into production, compliance teams need to ensure they meet EU AI Act data minimization requirements and cross-border Sovereign AI limits. AgentCloak uses proven digital twin technology to bidirectionally cloak sensitive data. Launch agents with confidence with agentcloak.ai

*To support us and reach over 82,100 subscribers, become a sponsor.

🔥 Before we start, do not miss the most important AI developments from the past few days, curated and commented on by me:

EU AI Act compliance: Contrary to rumors, implementation of the EU AI Act is advancing, and the EU has launched a Single Information Platform to help companies comply. It includes an AI Act explorer, a ‘Compliance Checker,’ and a database of national resources.

AI factories in the EU: The EU has launched six new AI factories in the Czech Republic, Lithuania, the Netherlands, Romania, Spain, and Poland, bringing the total to 19 (!). The bloc now aims to surpass the U.S. and China in the global AI race. Will they manage to?

California did it again: California’s two most important AI laws are here. Most people are not aware, but they could shape the global AI regulation landscape more than the EU AI Act.

“I expect some really bad stuff to happen”: In a recent podcast interview, Sam Altman shared his pessimistic views about the future of AI, and I bet that OpenAI’s legal department is not happy.

Erotica comes to ChatGPT: Sam Altman says that OpenAI is not the ‘elected moral police of the world’ and that ChatGPT will allow erotica for adults.

Boris Johnson’s bad example: The UK’s former prime minister made clear that he does not understand how AI works or the risks it poses.

Embodied AI is coming: With the rise of agentic AI, the paper “Embodied AI: Emerging Risks and Opportunities for Policy Action,” by Jared Perlo et al., is a great read for everyone interested in AI policy and regulation.

Cartography of Generative AI: Don’t miss this very cool project, designed by a collective of creatives and researchers, mapping the human origins and extractive nature of generative AI. It is based on “Atlas of AI“ by Kate Crawford, our first AI Book Club pick.

Schools should not be “AI-first”: A school that adopts AI as a goal (not as a tool) will create a learning environment where children are not encouraged to face a variety of intellectual and creative challenges simply because they can be quickly solved by AI.

Scraping vs. Privacy: Daniel Solove and Woodrow Hartzog published the final version of their excellent paper “The Great Scrape: The Clash Between Scraping and Privacy,” which offers important insights on the intersection of privacy and AI.

AI vs. excellence: Many think that using AI (or adopting an “AI-first” attitude) will help them grow or become more successful. But the “illusion of expertise” created by AI will often create distractions and push people further away from true excellence.

AI safety update: The latest update to the International AI Safety Report led by Yoshua Bengio focuses on advances in general-purpose AI systems’ capabilities and the implications for critical risks.

Meta’s AI Wants Your Camera Roll

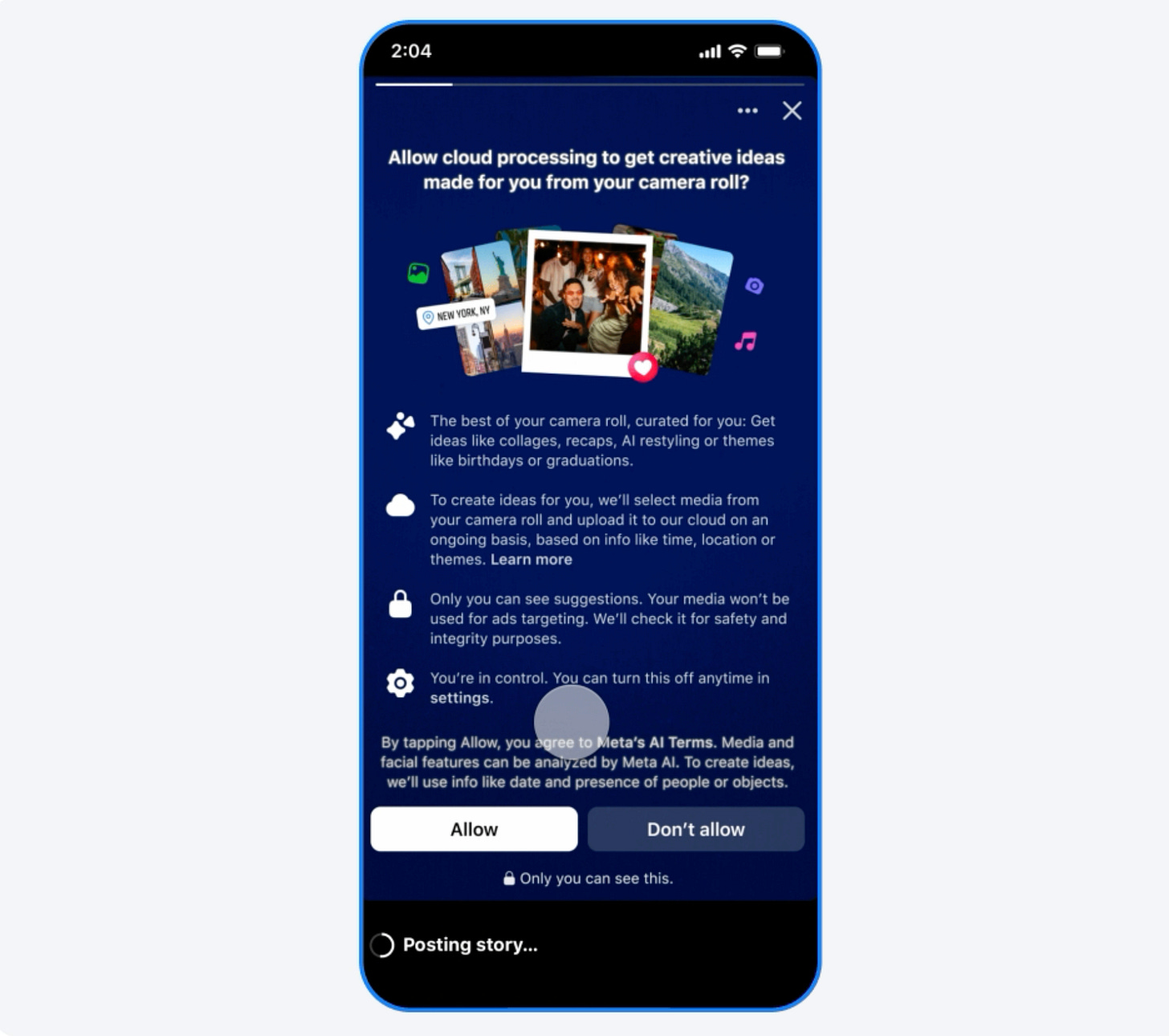

Facebook recently launched a new feature that allows people to give it access to their camera roll so they can receive suggestions of photos and videos, including collages and edits, to post on social media.

The feature is opt-in, and it requires ongoing access to the camera roll so Meta’s AI can automatically make suggestions.

According to the official announcement:

“To get creative ideas for you, we’ll select media from your camera roll and upload it to our cloud on an ongoing basis, based on info like time, location, or themes.”

My first question was: will Meta use photos and videos from Facebook users’ camera rolls to train its AI?

In the announcement, Meta mentions that if the user chooses either to edit the photo or video with Meta’s AI tool or to share it, the photo or video will be used by Meta to train its AI.

Additionally, a screenshot from the consent pop-up screen shown in the announcement (below) says: “Media and facial features can be analyzed by Meta AI.”

After reading the announcement, it was still unclear to me exactly how they were going to process camera roll media, including for AI training, so I checked Meta’s Privacy Policy.

Then things got much worse.

There, “camera roll content” is mentioned 13 times (!) in the context of data that can be collected from users and processed by Meta. It says that Meta can use camera roll content to:

Personalize Meta’s products

Improve Meta’s products

Promote safety, integrity, and security across Meta’s products

Communicate with users

Transfer, store, or process information across borders, including with partners, third parties, and service providers

Provide measurement, analytics, and business services

Share information across the Meta companies

Conduct business intelligence and analytics

Identify the user as a Meta product user and personalize ads (*the consent pop-up specifically mentions that camera roll content will not be used for ad targeting, so it is unclear how exactly it will affect advertising)

Research and innovate for social good

Anonymize information

Share information with others, including law enforcement, and respond to legal requests

Process information when required by law

Regarding the AI aspect specifically, since Meta’s AI will have to constantly analyze media from the camera roll to select which ones to suggest, I found both the announcement and the Privacy Policy to be ambiguous.

It is not clear to me whether Meta could argue that it might use camera roll content to “improve Meta’s AI” (see the second item above, as Meta AI is a product), but not to “train it,” regardless of the practical differences.

But here is what most people are not noticing:

Keep reading with a 7-day free trial

Subscribe to Luiza's Newsletter to keep reading this post and get 7 days of free access to the full post archives.