👾 Is AI making us less human?

Plus: this week's AI Briefing, free resources, and more

👋 Hi, Luiza Jarovsky here. Welcome to the 91st edition of this newsletter on privacy, tech & AI, and thanks to 18,438 email subscribers and 63,630 social media followers who are with me on this journey.

🌐 Excited for more content? Follow me on LinkedIn, X, YouTube, Instagram, Threads & TikTok (and come say hi).

A special thanks to Ketch, this edition's sponsor. Check out their report:

After a tumultuous 2023, what’s in store for privacy leaders in 2024? Dive in with Ketch's 2024 Data Privacy Trend Report. From digital advertising complexity, to dark patterns, to AI, get prepared for the year ahead with predictions & actionable advice from industry experts. Download your copy.

To sponsor this newsletter, get in touch.

👾 Is AI making us less human?

OpenAI broke the internet again

Last Thursday, OpenAI announced Sora, their text-to-image AI tool, and they broke the internet for the second time in less than two years (the first time was in November 2022, when they launched ChatGPT).

The AI tool is not available to the public yet, but Sam Altman (OpenAI's CEO) and other team members have been sharing examples on Twitter. If you have not seen these AI-generated videos yet, they are mind-blowing.

Glitches

There are definitely glitches that reveal that they are AI-generated. For example, I posted one of these videos and asked people to comment on the signs that it is AI-made; read what they said on X and LinkedIn (and try to spot more issues yourself).

This is an entertaining but also serious exercise that people will need to immediately start mastering: distinguishing fake from real.

Scams

It might seem easy, such as when you see this robotic dog or animals fantastically riding bicycles. However, two weeks ago, a multinational company suffered a US$25.6 million loss after scammers staged a deepfake video meeting in which an AI deepfake CFO convinced an employee to make 15 transfers to five different bank accounts in Hong Kong. Depending on the skills of the malicious agent trying to deceive, anyone can be a potential victim.

Being human

Beyond deception, synthetic AI videos have another deep impact on us, which has to do with how we trust others and the world and how we build a perception of truth.

Two weeks ago, I wrote and spoke about how saying no to AI can be empowering and how the effort behind day-to-day activities can be important for our personal growth. Today, I want to talk about trust and truth.

Trust

Trust is a very important element of our social interactions. Even on social networks, where we often interact with strangers or people we barely know, trust also matters. We tend to assume that what we see online is real. If someone says something and then posts video evidence of what they are saying, for the last decades, we assumed this as trustable proof. This premise has fallen apart (although most people did not realize it yet).

From now on, everything can potentially be fake, even if it seems extremely realistic or if it was sent by a friend. When it is so cheap and easy to falsify digital content, no digital content can be trusted anymore.

Are we ready not to trust anymore? Is it even feasible, as humans or as societies? This is a historical experiment, as it has never happened before, and I do not have answers. My intuitive guess (based on my Ph.D. research on dark patterns) is that the ability and willingness to trust are “built-in” into our cognitive system, and we'll never stop being prey to manipulative techniques, especially as they get more sophisticated.

Truth

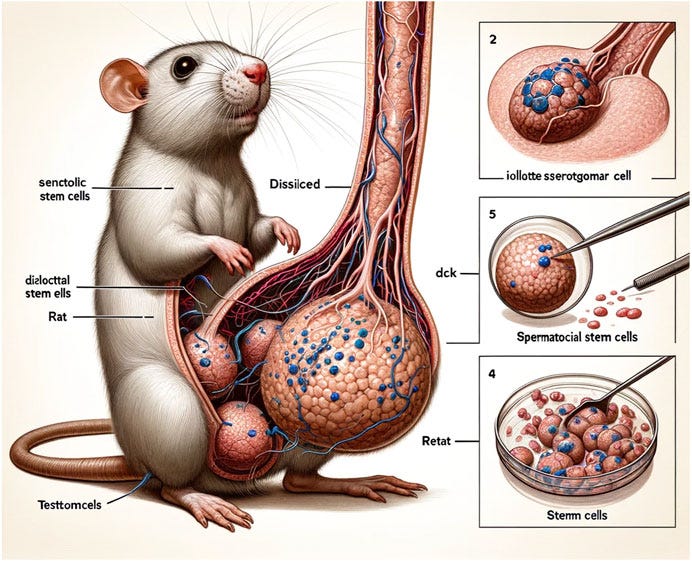

Regarding truth, humans need to build some sense of “what is true.” For example, when you read a scientific article, you expect that it will be based on facts and that the methodology used will be focused on getting closer to what is scientifically acceptable. However, given how cheaply and easily AI-generated content can be produced, more and more, what we see is not the truth, and AI manipulation is even overcoming traditional barriers such as peer review. See the image below, for example:

It's part of a peer-reviewed article published online in the journal Frontiers in Cell and Developmental Biology. The image is AI-generated, fake, and wrong. According to ArsTechnica: “many researchers expressed surprise and dismay that such a blatantly bad AI-generated image could pass through the peer-review system and whatever internal processing is in place at the journal.”

In an educational context, when teenagers are researching climate change or the Roman Empire, for example, there must be knowledge repositories where the information is factual and where the methodology is scientific. If every piece of digital content is potentially fake, how are they possibly going to navigate it and acquire any knowledge? How are people supposed to learn anything about the world if everything can be fake? Unfortunately, I don't have answers.

*

Lastly, and perhaps to add a bit of humor to all this, five days have passed since Sora's launch, and there are already videos pretending to be AI-generated. Happy to know that so far, human irreverence is still untouched by AI.

🔥 Essential (and free) privacy, tech & AI resources

✔️ Join our AI Book Club (750+ members). We are currently reading “Unmasking AI” by Dr. Joy Buolamwini, and the meeting will be in March

✔️ Check out and subscribe to our popular privacy and AI job boards, activate the alerts, and land your dream job sooner

✔️ Register for our live session “AI Governance: Hot Topics, Key Concepts, and Best Practices” with Alexandra Vesalga, Kris Johnston, Katharina Koerner, Ravit Dotan, and me on March 7th (395+ people confirmed)

✔️ Watch or listen to my podcast, featuring 1-hour conversations with global experts on privacy, tech & AI

✔️ Check out my privacy, tech & AI content on LinkedIn, X, YouTube, Instagram, Threads & TikTok

🎓 4-week Privacy, Tech & AI Bootcamp

If you enjoy this newsletter, you will love our Bootcamp. It's a great opportunity if you want to dive deeper into the topics I usually cover here, learn with peers, and advance your career. It includes 4 live sessions with me, additional learning material (1-2 hours a week), quizzes, office hours, a certificate, and 8 CPE credits pre-approved by the IAPP. We have special coupons for students and people who cannot afford the fee, and newsletter subscribers get 10% off. Read the full program, check out the testimonials, and register for one of the March cohorts here. Questions? Write to me.

💛 Do you read this newsletter every week? What do you most like about it? Let me know! I'll get back to you soon :-)

🔥 AI Briefing (for paid subscribers)

If you want to better understand AI-related issues and trends, especially from a data protection perspective, here's my commentary on the most important AI topics this week:

Keep reading with a 7-day free trial

Subscribe to Luiza's Newsletter to keep reading this post and get 7 days of free access to the full post archives.