🏛️ How EU lawmakers define AI

All you need to know about AI Policy & Regulation | Luiza's Newsletter #98

👋 Hi, Luiza Jarovsky here. Welcome to the 98th edition of this newsletter, read by 21,550+ subscribers in 125+ countries. I hope you enjoy reading it as much as I enjoy writing it.

A special thanks to Usercentrics, this week's sponsor. Check out their webinar:

Are you still using Google Ads or Google Analytics with EU/EEA audiences, but without Google Consent Mode? You’re at risk of losing data and access to audience building, remarketing, conversion modeling, and more. Join tech experts from Usercentrics on April 11 for a free webinar. In just 30 minutes, learn how to comply with Google's EU user consent policy and grow revenue with Consent Mode. Register now

🏛️ How EU lawmakers define AI

➡️ The EU AI Act was approved by the European Parliament on March 13. It contains definitions everyone should be familiar with as they are legally relevant and will likely spread to other AI legislations around the world.

➡️ There is still time until the full text of the AI Act becomes enforceable (2 years after it officially becomes law), so this is a great time to learn and implement changes.

➡️ I've selected a few definitions below (from the AI Act's Article 3), which reflect how EU lawmakers approach the technical aspects behind AI development. Check them out:

➵ AI System: "a machine-based system designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments"

➵ Post-market monitoring system: "all activities carried out by providers of AI systems to collect and review experience gained from the use of AI systems they place on the market or put into service for the purpose of identifying any need to immediately apply any necessary corrective or preventive actions"

➵ Validation data: "data used for providing an evaluation of the trained AI system and for tuning its non-learnable parameters and its learning process, among other things, in order to prevent underfitting or overfitting; whereas the validation dataset is a separate dataset or part of the training dataset, either as a fixed or variable split"

➵ Serious incident: "any incident or malfunctioning of an AI system that directly or indirectly leads to any of the following:

- the death of a person or serious damage to a person’s health;

- a serious and irreversible disruption of the management and operation of critical infrastructure;

- breach of obligations under Union law intended to protect fundamental rights;

- serious damage to property or the environment"

➵ General Purpose AI model: "an AI model, including when trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable to competently perform a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications. This does not cover AI models that are used before release on the market for research, development and prototyping activities"

➵ General Purpose AI system: "an AI system which is based on a general purpose AI model, that has the capability to serve a variety of purposes, both for direct use as well as for integration in other AI systems"

➡️ To learn more about the topic, check out my 4-week EU AI Act Bootcamp (launching today) and this newsletter's EU AI Act Lab.

📜 Draft of federal US privacy law unveiled

The draft of a potentially historic federal US privacy law was unveiled: The American Privacy Rights Act of 2024 (APRA). What you need to know:

➡️ According to the official release, the APRA:

➵ Establishes foundational uniform national data privacy rights for Americans

➵ Gives Americans the ability to enforce their data privacy rights

➵ Protects Americans’ civil rights

➵ Holds companies accountable and establishes strong data security obligations

➵ Focuses on the business of data, not mainstreet business

➡️ You can read the section-by-section summary here.

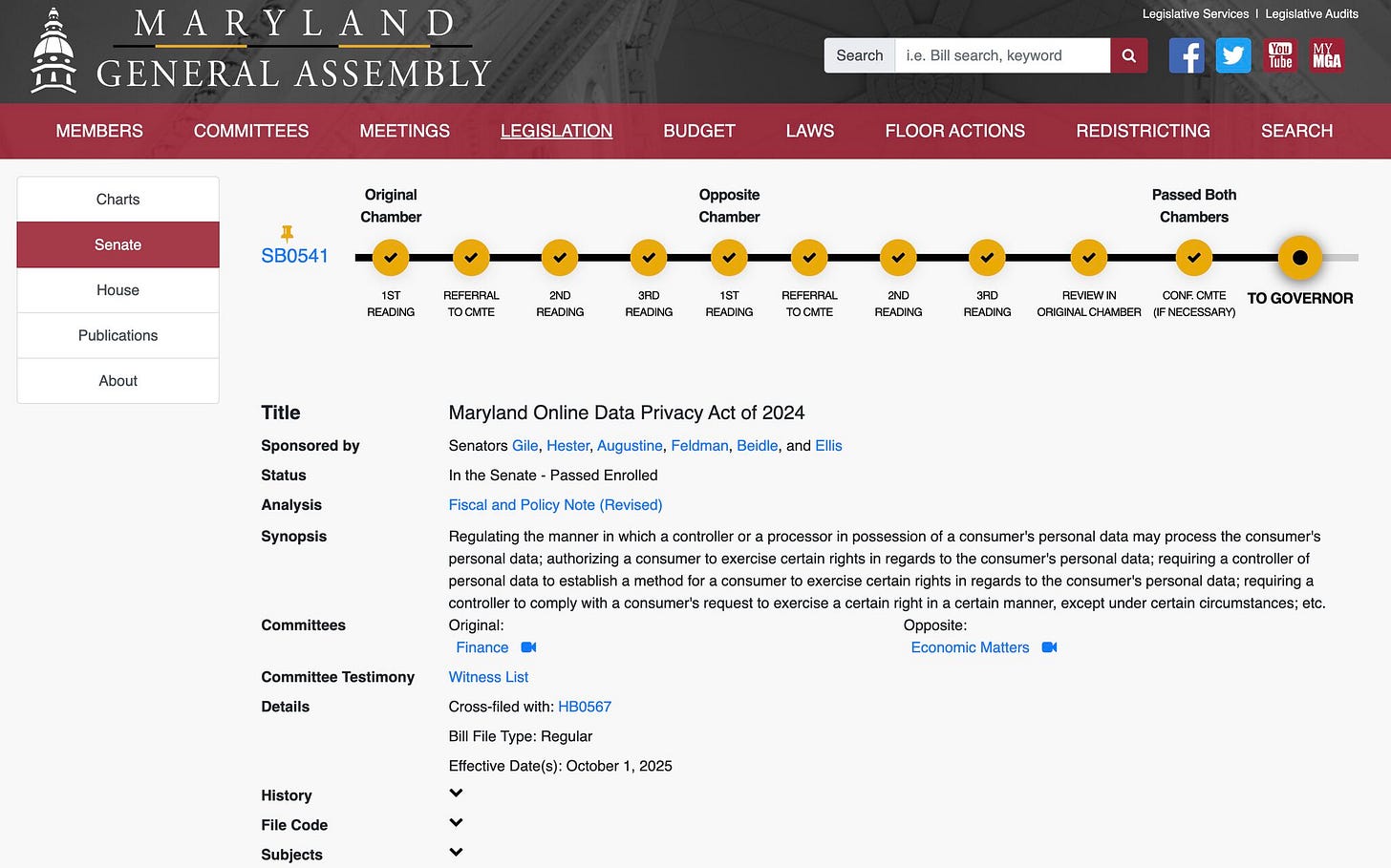

⚖️ Maryland Online Data Privacy Act of 2024

➡️ Maryland enacts the Maryland Online Data Privacy Act of 2024, one of the strongest privacy laws in the US so far. The bill goes into effect on October 1, 2025.

🎼 Artists against the AI-based devaluation of music

➡️ A group of 200+ artists, including Billie Eilish, Katy Perry, Nicki Minaj, Jon Bon Jovi, Pearl Jam, Sheryl Crow, Luis Fonsi, Elvis Costello, Greta Van Fleet, Imagine Dragons, the Jonas Brothers, Kacey Musgraves, Mac DeMarco, Miranda Lambert, Mumford & Sons, Noah Kahan, Norah Jones, and Zayn Malik signed the letter "Stop Devaluing Music" calling on tech companies and music services to stop using AI to devalue the rights of human artists.

➡️ I quote their letter: "This assault on human creativity must be stopped. We must protect against the predatory use of Al to steal professional artists' voices and likenesses, violate creators' rights, and destroy the music ecosystem."

➡️ Hopefully, the movement claiming lawfulness, ethics, fairness, and compensation in the context of generative AI will spread to other creative fields.

🚨 Excellent AI paper alert

"Theory Is All You Need: AI, Human Cognition, and Decision Making" by Teppo Felin & Matthias Holweg is an excellent paper discussing the intersection of AI and human cognition. Interesting quotes:

"The differences between human and machine learning—when it comes to language (as well as other domains)—are stark. While LLMs are introduced to and trained with trillions of words of text, human language “training” happens at a much slower rate. To illustrate, a human infant or child hears—from parents, teachers, siblings, friends and their surroundings—an average of roughly 20,000 words a day (e.g., Gilkerson et al., 2017; Hart and Risley, 2003). So, in its first five years a child might be exposed to—or “trained” with—some 36.5 million words. By comparison, LLMs are trained with trillions of tokens within a short time interval of weeks or months. The inputs differ radically in terms of quantity (sheer amount), but also in terms of their quality." (pages 12-13)

-

"But can an LLM—or any prediction-oriented cognitive AI—truly generate some form of new knowledge? We do not believe they can. One way to think about this is that an LLM could be said to have “Wiki-level knowledge” on varied topics in the sense that these forms of AI can summarize, represent, and mirror the words (and associated ideas) it has encountered in myriad different and new ways. On any given topic (if sufficiently represented in the training data), an LLM can generate indefinite numbers of coherent, fluent, and well-written Wikipedia articles. But just as a subject-matter expert is unlikely to learn anything new about their specialty from a Wikipedia article within their domain, so an LLM is highly unlikely to somehow bootstrap knowledge beyond the combinatorial possibilities of the data and word associations it has encountered in the past." (page 16)

-

"AI is anchored on data-driven prediction. We argue that AI’s data and prediction-orientation is an incomplete view of human cognition. While we grant that there are some parallels between AI and human cognition—as a (broad) form of information processing—we focus on key differences. We specifically emphasize the forward-looking nature of human cognition and how theory-based causal logic enables humans to intervene in the world, to engage in directed experimentation, and to problem solve." (page 37)

Read the paper here.

🚨 Meta changes its AI labeling policy

Meta announced it has changed its approach to AI-generated media on Facebook, Instagram, and Threads. What you need to know:

➡️ Meta will label a wider range of video, audio, and image content as "Made with AI" in two cases:

➵ when it detects "industry standard AI image indicators";

➵ when users disclose it's AI-generated content.

➡️ If it determines that digitally altered images, videos, or audio can deceive the public on a matter of importance, it might add a more prominent label.

➡️ It plans to implement these changes in May.

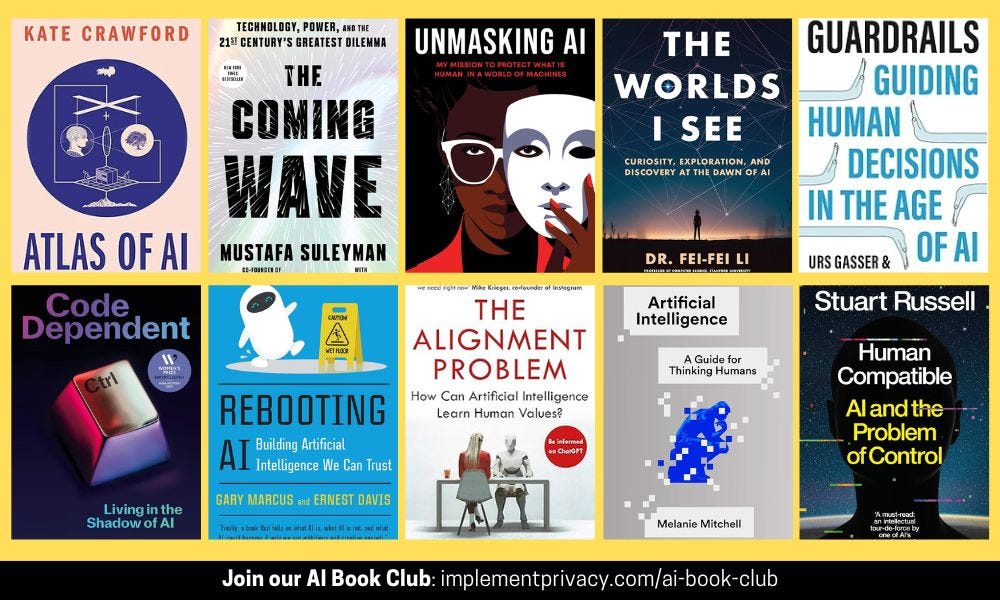

📚 Join our AI Book Club (910+ members)

➡️ If you are overwhelmed with the amount of AI-related information, here is a list of some of the most interesting books on AI published in the last few years - which might help make better sense of the topic:

➵ Atlas of AI, by Kate Crawford

➵ The Coming Wave, by Mustafa Suleyman & Michael Bhaskar

➵ Unmasking AI, by Dr. Joy Buolamwini

➵ The Worlds I See, by Fei-Fei Li

➵ Guardrails, by Urs Gasser & Viktor Mayer-Schoenberger

➵ Code Dependent, by Madhumita Murgia

➵ Rebooting AI, by Gary Marcus & Ernest Davis

➵ The Alignment Problem, by Brian Christian

➵ Artificial Intelligence: A Guide for Thinking Humans, by Melanie Mitchell

➵ Human Compatible, by Stuart Russell

➡️ You're also welcome to join our free AI Book Club (910+ members), which will help you keep a reading schedule. The next meeting will be on May 16 at 1pm ET, and we'll discuss “The Worlds I See” by Fei-Fei Li.

🔎 Job board: Privacy & AI Governance

If you are looking for job opportunities in privacy & AI governance, check out the links below (I have no connection to these organizations, please apply directly):

➡️ European AI Policy Lead Program Manager at OpenAI - Belgium (remote): "As the AI Policy Lead, you’ll focus on the AI Act implementation and adjacent legislative and standardization efforts focused on regulating AI models. In addition, you’ll be a Program Manager for the Global Affairs team in the region to streamline work within our team and with our partners in legal, product and sales." Apply here

➡️ Senior Privacy Counsel at Wolt - Finland: "We’re looking for someone who has the ability to tackle complex privacy issues across different countries and business functions. A person who is able to maneuver multiple cases and stakeholders simultaneously, understand core business needs and support our people with the Wolt way of doing things will excel in this role." Apply here

➡️ Director, Risk Lead for Generative AI at Capital One - US: "As a Director Risk Leader supporting the AI Foundations Program, you will partner with colleagues across AI product, design, and tech to deliver results that have a direct impact on customer experience and implement risk solutions to ensure Capital One’s continued stability and success." Apply here

➡️ Senior Privacy Project Manager at Booking(.)com - Netherlands: "Booking(.)com is currently looking for a world-class Snr Privacy Project Manager to join the Snr Privacy Programme Manager’s team, within Legal and Public Affairs to manage the delivery of the privacy aspects of a large data migration project, and support Privacy Programme Office operations.” Apply here

➡️ Subscribe to our privacy and AI governance job boards to receive our weekly email alerts with the latest job opportunities.

🎓 Advance your career, get a certificate

If you enjoy this newsletter, you can't miss my Bootcamps:

➡️ LAST CALL: The 5th edition of the 4-week Bootcamp on Emerging Challenges in Privacy Tech & AI starts in 2 days. There are 2 spots left: read the program and register here.

➡️ NEW: Today, we are launching the 4-week Bootcamp on the EU AI Act. 15 people have pre-registered for it. Read the program and register here (limited seats).

➡️ If you are not ready to join the Bootcamps, you can sign up for the waitlist and stay informed of ongoing and future programs.

🙏 Thank you for reading!

If you have comments on this week's edition, I'll be happy to hear them! Reply to this email, and I'll get back to you soon.

Have a great day!

Luiza