General Purpose AI Will Never Be Safe

Authorities should require AI companies to 1) limit the purposes of their AI systems; 2) respect sector-specific rules; 3) be held accountable for the harm their AI systems cause | Edition #267

👋 Hi everyone, Luiza Jarovsky, PhD, here. Welcome to the 267th edition of my newsletter, trusted by more than 89,200 subscribers worldwide.

As the old internet dies, polluted by low-quality AI-generated content, you can always find raw, pioneering, human-made thought leadership here. Thank you for helping me make this a leading publication in the field!

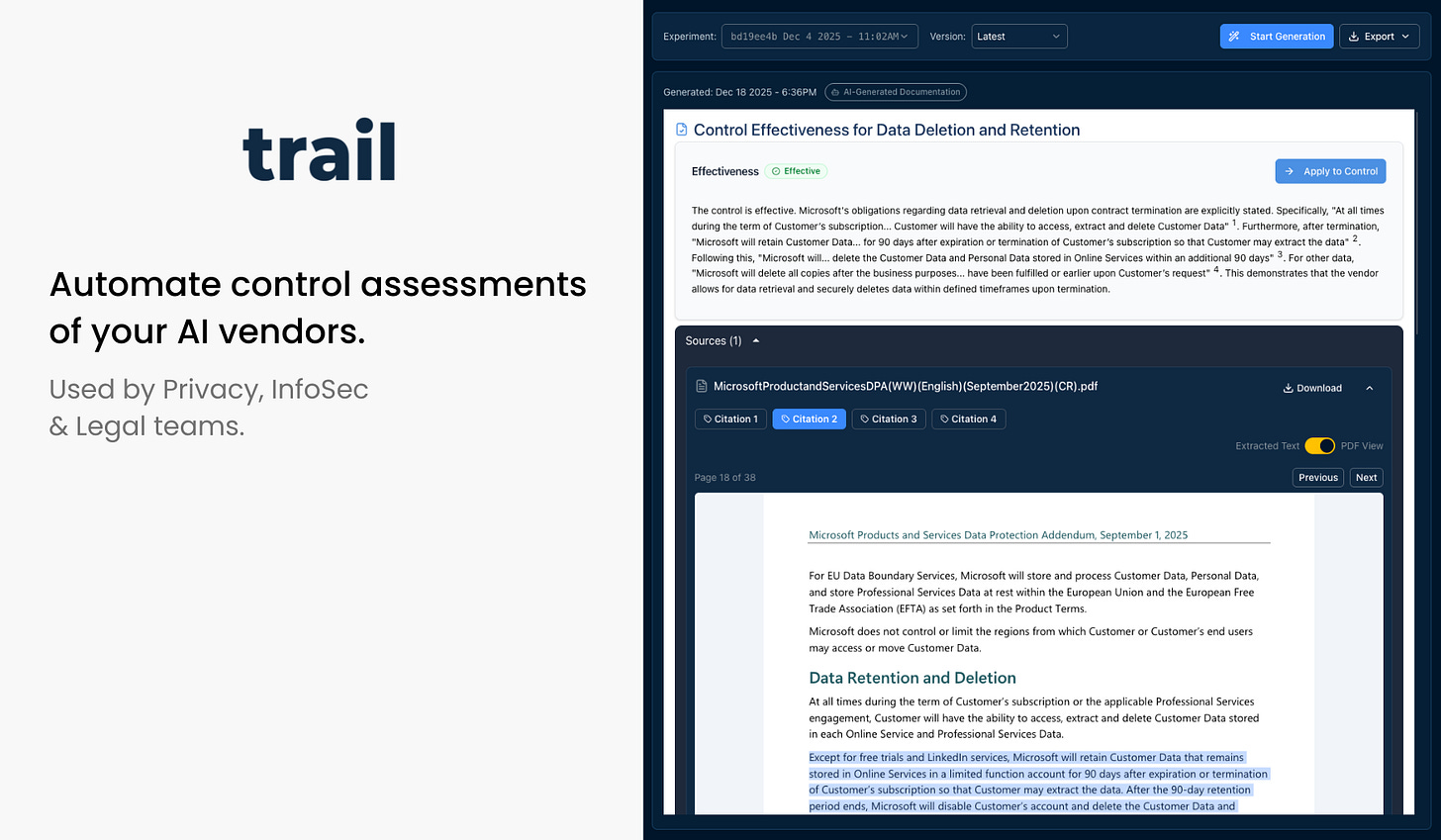

👉 This week’s free edition is sponsored by Trail. Check them out:

Automate vendor assessments with Trail’s AI. The platform scans any file type to assess control implementation and compliance requirements, replacing manual review with automated processes. Request your free demo now.

General Purpose AI Will Never Be Safe

I have written several times in this newsletter that financial advice, psychotherapy, and storytelling for kids should never be offered as a single product or service.

This is intuitive for most people, and in addition to the social, cultural, and ethical reasons why it is true, there is also an important legal basis:

Financial advice, psychotherapy, and storytelling products or services will be used by different groups of people in different ways, present different risk profiles, and are governed by different sets of legal rules.

However, despite the existing consensus on the impracticality and potential unlawfulness of coupling financial advice, psychotherapy, storytelling, and other areas into a single product, general-purpose AI systems, starting with ChatGPT in November 2022, broke this rule.

Today, you can use general-purpose AI chatbots like ChatGPT, Gemini, Claude, and other competitors to obtain financial advice, psychotherapy, storytelling, and numerous other domains.

These various domains have little to nothing in common and would never be offered as a single physical product or service in an offline context.

Most regulatory authorities have not realized it yet (and hopefully this edition will offer more information and awareness about the topic), but this is a major obstacle to effective AI regulation and AI chatbot safety, and it must change.

When the same general-purpose AI chatbot is being used by:

a man looking for intimate companionship,

a teenage girl secretly asking about drugs and sex,

a person with severe mental health issues looking for psychological support,

There will never be a single set of rules that will provide adequate service, protect the people involved, and address the context-specific risks.

When rules are inadequate for a product or service’s risk profile, people remain unprotected, and cases of harm happen more often (as we have observed over the past three years in AI, especially in the context of mental health harm and suicide).

Also, insufficient rules help AI companies avoid accountability for the harms their systems cause. They will argue that it is too difficult or "technically unfeasible" to prevent harm (another argument we have heard multiple times in the past three years).

-

The only solution to properly regulate AI chatbots - and I know many in AI will get angry with what I am saying, as every time I propose stricter AI rules, I am personally attacked - is to require AI companies to provide purpose-specific AI chatbots.

It is a big statement, but authorities and policymakers must realize that general-purpose AI chatbots will never be properly regulated, for the reasons I described above.

Authorities should require AI companies to define the target audience and use cases for their AI systems (including chatbots). The risk profile should be clear, as well as specific standards, technical rules, filters, and built-in guardrails.

An AI company that wants to offer an AI system providing medical advice services, for example, should be required to certify or hold a legally recognized credential to demonstrate compliance with all applicable laws governing this sector, including product safety, data protection, consumer protection, and other laws.

Note that I am not opposed to general-purpose AI models (such as LLMs). As I discuss in my AI Governance Training, models differ from systems, and different rules apply to providers of models and providers of AI systems (if you have questions about the differences between these two terms, I recommend reading the EU AI Act’s definitions: items 63 and 66 here).

An LLM can power a purpose-specific AI chatbot, and LLMs have important use cases and should continue to be developed. Also, many places in the world have rules for AI models, and these rules must continue to evolve to address the emerging challenges.

I am talking here about AI systems, the user-facing applications, the ones that will be subject to product-specific rules.

-

If you think that what I wrote above is too radical, or that it does not reflect the real world, yesterday’s public argument between Elon Musk and Sam Altman accurately highlights the regulatory challenge I described above:

Leaving their personal fight aside, without using the regulatory framing, Sam Altman is assuming that general-purpose AI systems like ChatGPT are too difficult to regulate.

They serve too many groups of people with diverse interests and vulnerabilities, across too many use cases, posing a too broad range of risks.

As the title of today's newsletter says, general-purpose AI systems will never be safe, and regulatory authorities and policymakers must understand the risks and take the necessary action before it is too late.

The public must also make clear that they will not accept having their loved ones exposed to unsafe AI systems and that they demand accountability from AI companies.

🎓 Now is a great time to learn and upskill in AI. If you are ready to take the next step, here is how I can help you:

Join the 27th cohort of my AI Governance Training program

Discover your next read in AI and beyond in my AI Book Club

Register for my AI Ethics Paper Club‘s next group discussion

Sign up for free educational resources at our Learning Center

Subscribe to our job alerts for open roles in AI governance

Yes, this is a big problem in regulatory space. It's also a technical problem, too. You cannot mitigate all threats of prompt injection (which every single LLM system is exposed to) without understanding the space you are operating in. This of course goes hand in hand with AI governance, but it is another reason why general purpose AI is not safe.

https://genai.owasp.org/llmrisk/llm01-prompt-injection/

Half of these mitigations can only be applied if it is NOT a general service.

This is a really important subject. I have recently been reading about multiple LoRA adapters (post training fine tuning and preference alignment ) for the same LLM base model. This could be a way to offer different model customisations to different segments of users. Alternatively there could be different model endpoints for different user segments. The challenge would be to accurately differentiate users eg teenagers, vulnerable individuals etc.

I understand LoRA adapters can be switched at inference time with very low latency.