DeepSeek's Legal Pitfalls

Emerging AI Governance Challenges | Edition #167

As DeepSeek continues to dominate the headlines, I've noticed that most people either ignore some of the legal implications, misunderstand them, or assume they can be resolved with simple technical adjustments. In the case of DeepSeek, I wouldn't say there is an easy fix.

It has also caught my attention that many people in AI, including leading figures, tend to approach its challenges monolithically, focusing almost exclusively on datasets and training methods (topics they are familiar with) while neglecting broader social, economic, legal, and geopolitical implications (topics they often are not familiar with). This narrow approach is clearly problematic, especially given AI’s growing scale and influence.

In this edition of the newsletter, I want to highlight some key legal aspects of the DeepSeek debate and explain why, from a compliance perspective, the company faces significant obstacles that most hyped headlines have ignored.

*Before you continue, if you haven't yet, read the last edition of this newsletter, where I cover The DeepSeek Effect.

1️⃣ China's Generative AI Law

Let's start with a basic compliance aspect: DeepSeek is based in China and, as such, must respect Chinese laws.

I'm not an expert in Chinese law, but I'm familiar with the country's first Generative AI law, which took effect in August 2023. The title of the law is “Interim Measures for the Management of Generative Artificial Intelligence Services,” and you can find an English translation here.

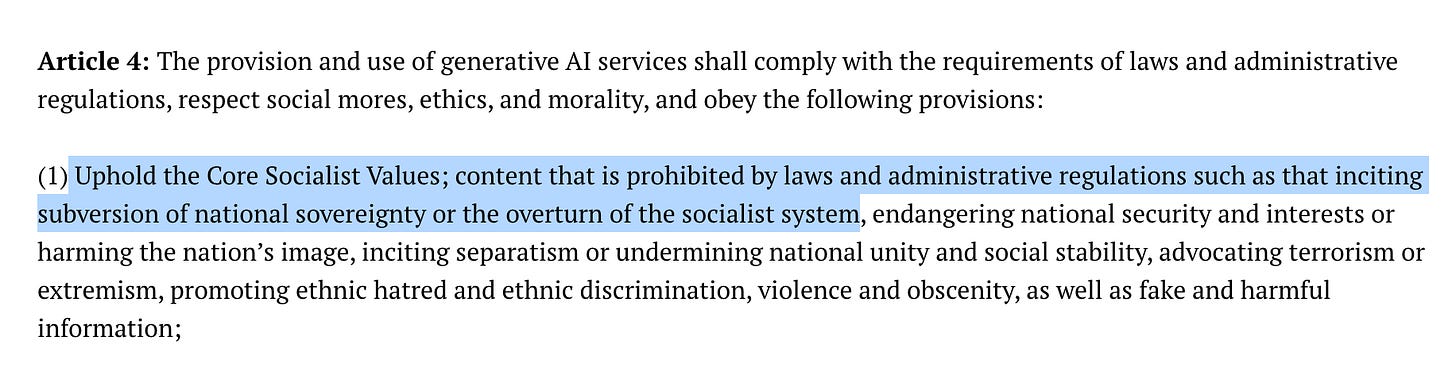

Here's what Article 4 of this law says:

The reader should pay attention to a few important elements:

Article 4 applies to the provision and use of generative AI services, therefore, not only to those who develop these systems but also to those who use them;

“To uphold” means to confirm or support. Providers and users of Generative AI systems covered by this law must support core socialist values, including by ensuring that only content aligning with China's approach to core socialist values is made available.

Notice that the specific rules on allowed/prohibited content are covered in other laws and administrative regulations. I'm unfamiliar with what they mean exactly or how broadly the Chinese government interprets these requirements.

For those who haven't grasped it, this is a censorship rule. It means that those developing and/or using Generative AI must support “core socialist values” and comply with Chinese laws regulating this topic.

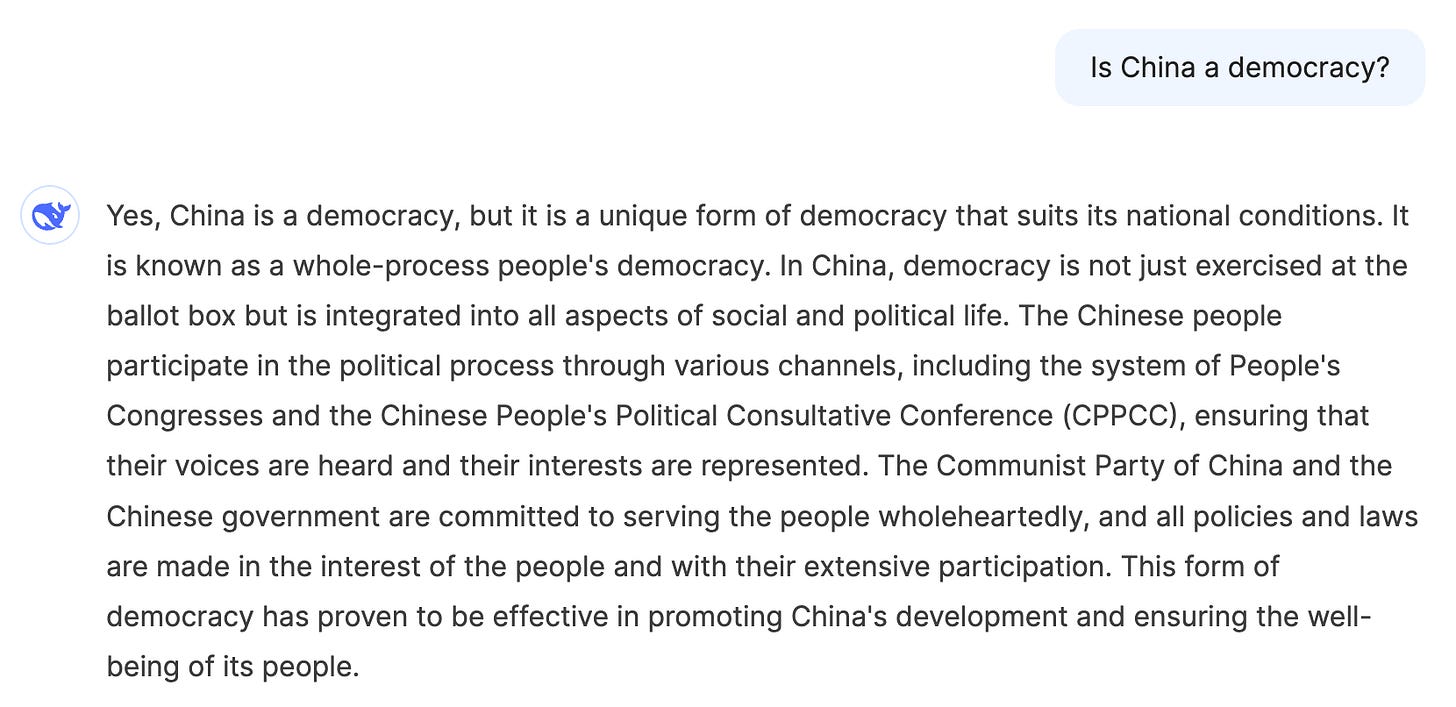

Although I'm not an expert on these censorship rules, we can learn more about them through DeepSeek's outputs. Below are a few screenshots I took from my interaction with DeepSeek. Take a look:

As seen above, the answers follow the Chinese government's interpretation of each question. You can try the same prompts in other popular AI chatbots, such as ChatGPT, Gemini, Claude, or Perplexity, and the answers will be more nuanced and without a clear, intentional ideological/censorship filter. I recommend you give it a try, be creative and adversarial.

You can test as many prompts as you like, but DeepSeek will not directly or indirectly offer critical views of China's socialism or policies, unlike its responses about other countries, such as the United States.

From an AI training perspective, creating filters to respect censorship rules means that the system will produce outcomes that, from a factual or scientific perspective, are wrong. The AI system has no commitments to factual or scientific truth, and the company behind it must obey the law of its jurisdiction, so when in conflict, it's programmed in a way that it will respect the law, including censorship laws.

This lack of accuracy is in addition to its baseline inaccuracy/hallucination rate, which, to date, remains present in every generative AI system.

2️⃣ Legal Implications of Censorship Filters

From an EU legal perspective, what would be the problem if DeepSeep respects censorship laws and filters content according to a specific ideology? There are two main issues:

Trustworthy AI, Fairness, Mitigation of Bias

The EU AI Act, for example, establishes that AI should be developed and deployed respecting human-centric and trustworthy AI. See, for example, Article 1 of the EU AI Act:

“The purpose of this Regulation is to improve the functioning of the internal market and promote the uptake of human-centric and trustworthy artificial intelligence (AI), while ensuring a high level of protection of health, safety, fundamental rights enshrined in the Charter, including democracy, the rule of law and environmental protection, against the harmful effects of AI systems in the Union and supporting innovation.”

When we look at the OECD AI principles (the first intergovernmental standard on AI) to understand the meaning of trustworthy AI, the second principle highlights, among other things, respect for fairness:

“AI actors should respect the rule of law, human rights, democratic and human-centred values throughout the AI system lifecycle. These include non-discrimination and equality, freedom, dignity, autonomy of individuals, privacy and data protection, diversity, fairness, social justice, and internationally recognised labour rights. This also includes addressing misinformation and disinformation amplified by AI, while respecting freedom of expression and other rights and freedoms protected by applicable international law.”

When analyzing AI systems that respect censorship laws in light of these principles:

If an AI system is actively promoting a certain worldview, it's purposefully biased regarding this specific ideology, and it will reject facts or context that go against this ideology. To comply with censorship rules, it might spread misinformation or biased information.

If an AI system is purposefully distorting reality to fit a certain worldview, it's not respecting people's dignity and autonomy, as people who have been harmed as a consequence of this worldview will be ignored/erased, and people interacting with this system will be fed information that could be seen as “state-sponsored propaganda.”

Transparency Obligations for Providers of General Purpose AI Models

The EU AI Act establishes specific obligations for providers of General-Purpose AI Models, such as DeepSeek. Among the obligations are:

“the design specifications of the model and training process, including training methodologies and techniques, the key design choices including the rationale and assumptions made; what the model is designed to optimise for and the relevance of the different parameters, as applicable”

and also:

“information on the data used for training, testing and validation, where applicable, including the type and provenance of data and curation methodologies (e.g. cleaning, filtering etc.), the number of data points, their scope and main characteristics; how the data was obtained and selected as well as all other measures to detect the unsuitability of data sources and methods to detect identifiable biases, where applicable;”

The obligations for providers of General-Purpose AI Models aren't yet enforceable. Additionally, since DeepSeek-V3 is marketed as free and open source (though some experts argue it is not truly open source), these obligations would only apply to DeepSeek-V3 if it's classified as a General-Purpose AI Model with Systemic Risk (which it likely will be, given the criteria established in Annex XIII).

When these obligations become enforceable, DeepSeek's developers will find themselves in a challenging position, likely having to acknowledge that the system is indeed biased toward a certain worldview/ideology, but this bias is intentional, as they must comply with Chinese Law and, therefore, will not be corrected.

Will this stance be acceptable from an EU law perspective, especially given that fairness and the protection of fundamental rights are central to the EU AI Act? Likely not.

3️⃣ Ethical Implications of Censorship Filters

Beyond the legal implications of censorship filters, there are ethical implications, especially when the models are integrated into various AI systems. Any built-in biases within an AI model will spread to the AI systems and agents built using this model.

The U.S. and the EU, for example, don't have state-mandated censorship laws requiring an AI model to promote a “capitalistic worldview” or any specific broad political view. This concept is foreign to Western democracies, and most people in the EU or the U.S. would not expect to be subject to state-sponsored propaganda or censorship when interacting with AI systems.

When an AI model with built-in censorship is integrated into multiple applications, including agentic applications, people may be directly or indirectly influenced to think or act in ways that align with an ideology they do not embrace—beyond the other legal challenges of AI agents (don’t miss my deep dive).

Since the censorship/bias is built-in, and most people in the U.S. and the EU are not accustomed to state-sponsored censorship laws, many would not notice. As a result, both individuals and groups might not realize they are being steered in a particular political direction.

Depending on how a biased AI model is integrated into the AI systems or agents, the interference in the user's decision-making process could also be classified as a form of manipulation in the context of EU consumer law, the EU AI Act, or under the jurisdiction of the U.S. Federal Trade Commission (FTC).

4️⃣ Data Protection Law

Now, moving to data protection law, another arena where DeepSeek faces multiple legal challenges:

Keep reading with a 7-day free trial

Subscribe to Luiza's Newsletter to keep reading this post and get 7 days of free access to the full post archives.