ChatGPT Pulse: The Worst of Two Worlds

OpenAI's new feature points to a dystopian future where privacy has no value, and where the negative implications of AI chatbots and social media merge | Edition #236

👋 Hi everyone, Luiza Jarovsky here. Welcome to the 236th edition of my newsletter, trusted by over 79,000 subscribers worldwide. It is great to have you here!

🎓 This is how I can support your learning and upskilling journey in AI:

Join my AI Governance Training [apply for a discounted seat here]

Meet peers and ask questions in my private Substack community

Discover your next read in AI and beyond with our AI Book Club

Receive our job alerts for open roles in AI governance and privacy

Sign up for weekly educational resources in our Learning Center

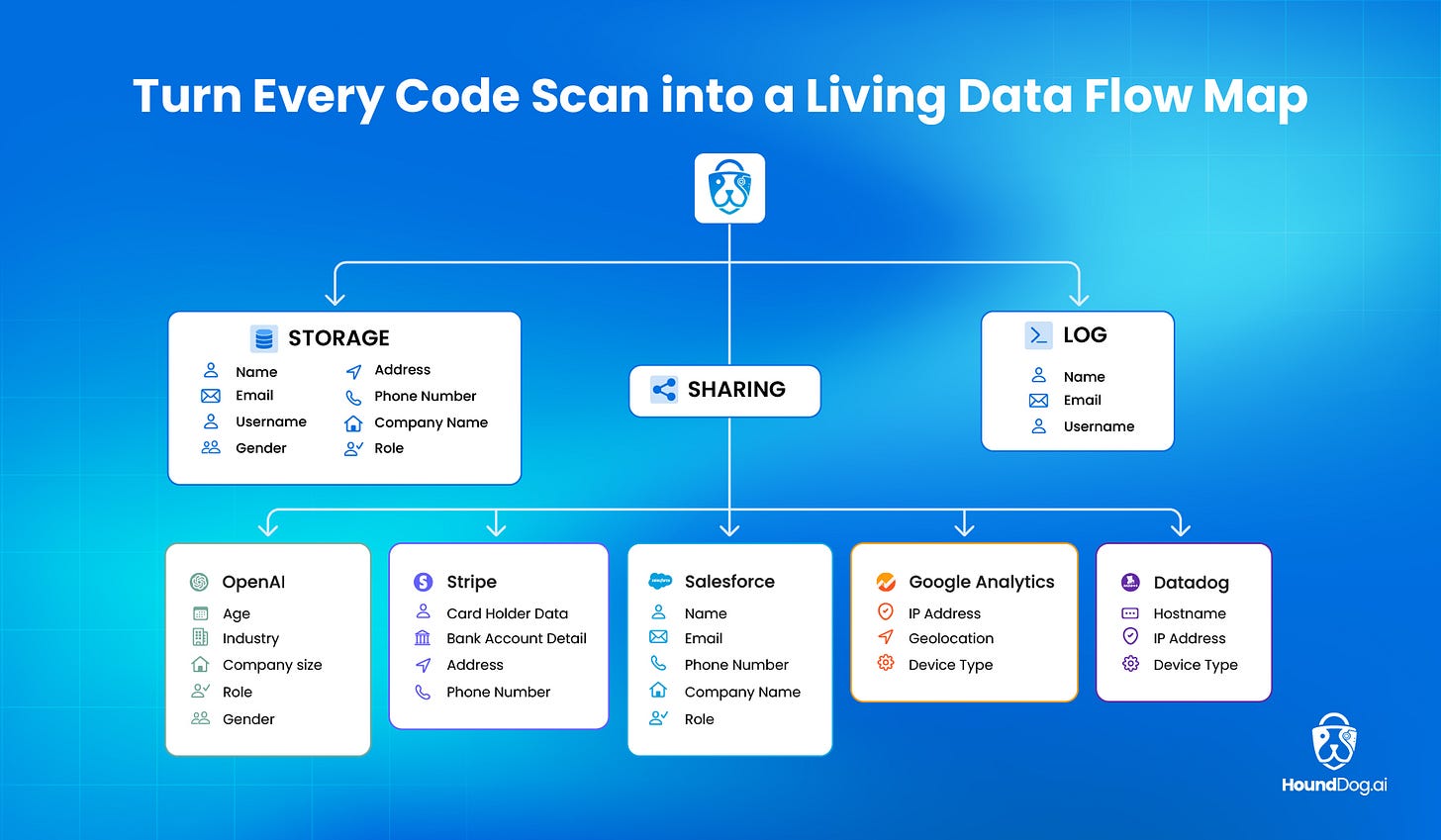

👉 A special thanks to HoundDog.ai, this edition's sponsor:

Still chasing app owners to document the latest data flows? Is your privacy platform struggling to keep up with fast development and missing hidden third-party and AI integrations? Try HoundDog.ai’s privacy-focused static code scanner to map sensitive data flows, generate audit-ready RoPAs and PIAs, and catch privacy risks before code is deployed.

*To support us and reach over 79,000 subscribers, become a sponsor.

🔥 Before we start, here is my curation of the most important AI developments this week:

Sam Altman published an article titled “Teen safety, freedom, and privacy,” clarifying some of his company's internal safety policies, especially in light of recent tragedies such as Adam Raine's suicide. From my perspective, their “pro-freedom” approach is dangerous and detrimental to safety. OpenAI should immediately revise it, as it will likely lead to more cases of self-harm and suicide.

OpenAI released the paper “How People Use ChatGPT,” the largest study to date on consumer ChatGPT usage. It strategically obfuscates risky usage patterns, making legal oversight of AI chatbot-related harm more difficult.

Last week, the EU hosted a conference to assess its progress in implementing the recommendations of Draghi’s 2024 report. Draghi said that the EU’s growth model is collapsing and that drastic measures are needed, including a “radical simplification” of the GDPR and postponing the rules on high-risk AI.

A new EU Briefing highlights the real reason the EU wants to simplify the GDPR and postpone the AI Act, and it has nothing to do with the content of these regulations [hint: it is geopolitics].

Disney has sued the Chinese AI company MiniMax for copyright infringement, and the lawsuit gives us a taste of the geopolitical AI race going on between the U.S. and China.

If you don’t want LinkedIn to use your personal data to train its generative AI models, here’s how you can opt out.

Meta has launched Vibes, “a new feed in the Meta AI app for short-form, AI-generated videos.” I couldn’t finish the teaser, but maybe it’s because I’m too old for that.

Italy enacted its AI law! After reviewing its 28 articles, these ten provisions stood out to me, as they reflect Italy’s main priorities and concerns regarding AI.

Spotify announced its new policies on impersonation, music spam filtering, and AI disclosures for music, and they will likely shape the future of AI governance in the music industry.

My unpopular opinion is that most people do not want to wear computers on their eyes. But I am glad Meta’s live demo of its AI glasses went well (or not). Watch.

The UN has launched an open call to serve on the UN Independent International Scientific Panel on AI. Apply here.

A growing number of people are developing intimate relationships with AI chatbots. I am deeply pessimistic about the long-term consequences, especially as AI chatbots are highly sycophantic and constantly validate the person’s feelings and opinions (human relationships, however, do not work that way).

Singapore is launching a mandatory AI literacy course for all its public servants, who already have over 16,000 custom AI bots to automate tasks. Their AI governance approach balances innovation and AI literacy and is an interesting example to learn from.

Hundreds of prominent figures have signed an urgent call for international red lines to prevent unacceptable AI risks. You can sign it here.

South Korea’s president wants to “eliminate the spiderweb of regulations” to support AI development. Pioneered by Washington, the global deregulatory movement in AI is growing.

Now, back to today’s main story:

ChatGPT Pulse: The Worst of Two Worlds

Yesterday, OpenAI launched ChatGPT Pulse, which, according to Sam Altman, points to what he believes is the future of ChatGPT:

Keep reading with a 7-day free trial

Subscribe to Luiza's Newsletter to keep reading this post and get 7 days of free access to the full post archives.