✨ AI Meets the Brussels Effect

AI Governance Must-Reads | Edition #159

👋 Hi, Luiza Jarovsky here. Welcome to the 159th edition of this newsletter on the latest developments in AI policy, compliance, and regulation, read by 44,600+ subscribers in 160+ countries. I hope you enjoy reading it as much as I enjoy writing it. This is the last edition of 2024. Happy New Year!

🛣️ Step into 2025 with a new career path! In January, join me for a three-week intensive AI Governance Training (eight live sessions; 12 hours total), already in its 16th cohort. Join over 1,000 professionals who have benefited from our programs: don't miss it! Students, NGO members, and professionals in career transition can request a discount.

✨ AI Meets the Brussels Effect

South Korea has become the world's second market to enact a comprehensive AI law, drawing significant inspiration from the EU AI Act. Here's what you need to know, including how the Brussels Effect is already shaping AI governance and regulation:

➡️ South Korea's AI law, officially titled the "Basic Law on AI Development and Trust-Based Establishment," was recently approved by the national parliament with 260 votes in favor, 1 against, and 3 abstentions.

➡️ Here are some of the main characteristics of the new law, according to the official summary:

→ It defines AI, high-impact AI, generative AI, AI ethics, and AI business operators (Article 2);

→ AI business operators who provide products or services that use high-impact AI or generative AI must notify users of this in advance. Additionally, when providing generative AI or products or services utilizing it, they must indicate that the results were generated by generative AI. If virtual results created by an AI system are difficult to distinguish from reality, operators must clearly notify or indicate this fact to users (Article 31).

→ AI business operators must implement matters such as risk identification, assessment, and mitigation to ensure the safety of AI systems when the cumulative computational resources used for learning exceeds the standard prescribed by Presidential Decree (Article 32).

→ AI business operators must implement measures to ensure safety and reliability when providing high-impact AI or products or services utilizing such systems (Article 34).

→ If the Minister of Science and ICT discovers or suspects a violation of the law, they may request that the AI business operator submit relevant materials or allow public officials to conduct the necessary investigation. If a violation is confirmed, they may order appropriate measures to stop or correct the violation (Article 40).

➡️ Among the main similarities with the EU AI Act, I would highlight:

→ The risk-based approach (with stricter obligations for high-risk/high-impact AI systems);

→ Focus on ethical guidelines/trustworthy AI;

→ Protection of fundamental rights;

→ Transparency obligations (e.g., in the context of deepfakes, anthropomorphism, similar to Article 50 of the EU AI Act);

→ Provisions on standardization;

→ The establishment of oversight bodies and procedures.

➡️ What is the Brussels Effect?

→ The phrase was coined by Prof. Anu Bradford in 2012 and is also the title of one of her books (“The Brussels Effect: How the European Union Rules the World”). According to Bradford:

“the EU remains an influential superpower that shapes the world in its image. By promulgating regulations that shape the international business environment, elevating standards worldwide, and leading to a notable Europeanization of many important aspects of global commerce, the EU has managed to shape policy in areas such as data privacy, consumer health and safety, environmental protection, antitrust, and online hate speech. And in contrast to how superpowers wield their global influence, the Brussels Effect (…) absolves the EU from playing a direct role in imposing standards, as market forces alone are often sufficient as multinational companies voluntarily extend the EU rule to govern their global operations.”

➡️ Is the Brussels Effect influencing AI?

I've been actively discussing this topic in the last few months, including in some of my monthly live talks (such as recent ones with Raymon Sun and Gary Marcus). Using South Korea as a case study (or even Brazil, which I analyzed a few weeks ago), my take is that, yes, the Brussels Effect is indeed happening.

For many countries, it's easier and faster to import EU rules on a heavyweight (and controversial) topic like AI regulation. The EU spent years discussing and developing its AI regulation, and these rules already have global influence. Their geographical scope goes beyond the EU (See Art. 2 of the EU AI Act). In my view, more countries will follow suit, and the Brussels Effect will continue to unfold in 2025.

🛣️ Step Into 2025 with a New Career Path

If you are dealing with AI-related challenges at work, don't miss our acclaimed live online AI Governance Training—now in its 16th cohort—and start the new year ready to excel.

This January, we’re offering a special intensive format for participants in Europe and Asia-Pacific: all 8 sessions (12 hours of live learning with me) condensed into just 3 weeks, allowing participants to catch up with recent developments and upskill.

→ Our unique curriculum, carefully curated over months and constantly updated, focuses on AI governance's legal and ethical topics, helping you elevate your career and stay competitive in this emerging field.

→ Over 1,000 professionals from 50+ countries have benefited from our programs, and alumni consistently praise their experience—check out their testimonials. Students, NGO members, and people in career transition can request a discount.

→ Are you ready? Register today to secure your spot before the cohort fills up:

*If this is not the right time, join our Learning Center to receive AI governance professional resources and updates on training programs and live sessions.

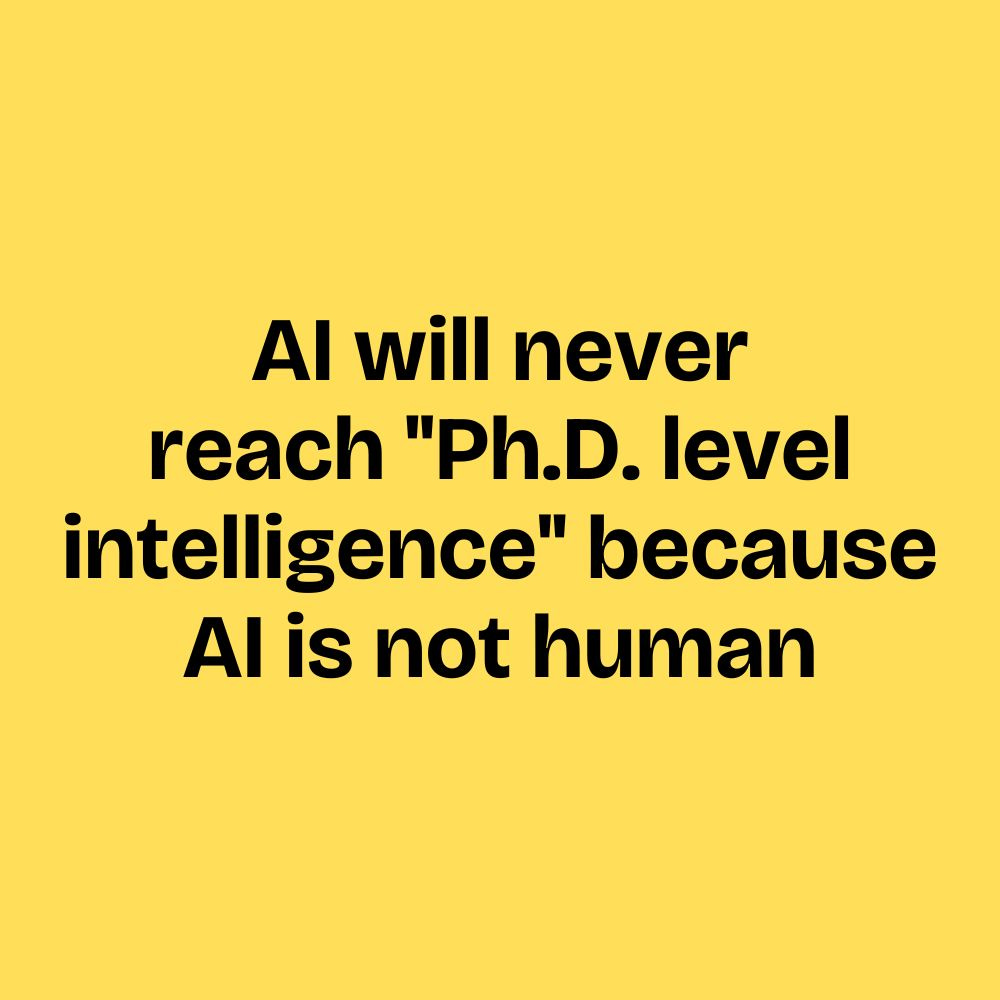

💬 Unpopular Opinion

➡️ Share your thoughts on LinkedIn, X/Twitter, Bluesky, or Substack.

🏛️ Illinois Supreme Court Policy on AI

The Supreme Court of Illinois published its official AI policy (effective January 1st, 2025), and it adopts an extremely lenient approach to AI use; here's why:

➡️ The Supreme Court of Illinois chose to adopt a lenient approach to transparency and the relevant parties' right to know if they're being impacted by AI outputs and outcomes. It has effectively neglected responsible AI principles. Take a look at this paragraph:

"The use of Al by litigants, attorneys, judges, judicial clerks, research attorneys, and court staff providing similar support may be expected, should not be discouraged, and is authorized provided it complies with legal and ethical standards. Disclosure of Al use should not be required in a pleading."

➡️ They highlight that the use of AI is:

→ Expected;

→ Should not be discouraged;

→ Is authorized (provided it complies with 'legal & ethical standards');

→ Disclosure of Al use should not be required in a pleading.

╰┈➤ There are 4 main problems:

1️⃣ With this policy, they are:

→ Encouraging the use of AI in law and legal procedure work;

→ Avoiding basic transparency standards and minimum thresholds, referring broadly to ethical and legal standards.

2️⃣ Law and legal procedure deserve higher transparency standards

→ Transparency is a basic ethical and legal requirement in the context of AI use;

→ AI use in law and legal procedure is sensitive, as it can directly impact fundamental rights. There should be stricter transparency requirements (take, for example, the transparency requirements established in the EU AI Act, Art. 50); the policy should go beyond that, especially given the potential harm to fundamental rights.

3️⃣ This policy ignores the OECD AI principles, which states that transparency and explainability are core AI principles:

"AI Actors should commit to transparency and responsible disclosure regarding AI systems. To this end, they should provide meaningful information, appropriate to the context, and consistent with the state of art:

→ to foster a general understanding of AI systems, including their capabilities and limitations;

→ to make stakeholders aware of their interactions with AI systems, including in the workplace;

→ where feasible and useful, to provide plain and easy-to-understand information on the sources of data/input, factors, processes and/or logic that led to the prediction, content, recommendation or decision, to enable those affected by an AI system to understand the output;

→ to provide information that enable those adversely affected by an AI system to challenge its output."

4️⃣ This AI policy might be replicated by courts in other US states and countries, setting a negative precedent. Hopefully, it will be reviewed before it takes effect.

🏆 Top EU AI Act Guides

The EU AI Act entered into force this year, and everyone in AI should be familiar with it. Below are 5 excellent guides to help you learn more. Download, bookmark, and read before the year ends:

1️⃣ The William Fry AI Guide (William Fry LLP) - read

2️⃣ European Union Artificial Intelligence Act: a guide (Bird & Bird) - read

3️⃣ EU AI Act A Pioneering Legal Framework On Artificial Intelligence - Practical Guide (Cuatrecasas) - read

4️⃣ EU AI Act: Navigating a Brave New World (Latham & Watkins) - read

5️⃣ Decoding the EU Artificial Intelligence Act (KPMG) - read

🇺🇸 U.S.: AI Task Force Report

The U.S. House of Representatives published its AI Task Force Report, and it's a must-read for everyone in AI. Bookmark and download it here. Important highlights:

╰┈➤ What's the vision behind this report?

"This report articulates guiding principles, 66 key findings, and 89 recommendations, organized into 15 chapters. It is intended to serve as a blueprint for future actions that Congress can take to address advances in AI technologies. The Task Force members feel strongly that Congress must develop and maintain a thoughtful long-term vision for AI in our society. This vision should serve as a guide to the many priorities, legislative initiatives, and national strategies we undertake in the years ahead."

╰┈➤ What are the principles that will frame AI policymaking in the U.S.?

→ Identify AI Issue Novelty

→ Promote AI Innovation

→ Protect Against AI Risks and Harms

→ Empower Government with AI

→ Affirm the use of a Sectoral Regulatory Structure

→ Take an Incremental Approach

→ Keep Humans at the Center of AI Policy

╰┈➤ Any comments on AI and privacy?

Yes. In the context of the intersection between AI and privacy, the report identifies six privacy harms from AI: physical, economic, emotional, reputational, discrimination, and autonomy (I would say very much inspired by Daniel Solove and Danielle Citron's seminal paper on the topic). It then adds these two recommendations:

1️⃣ Explore mechanisms to promote access to data in privacy-enhanced ways:

"Access to privacy-enhanced data will continue to be critical for AI development. The government can play a key role in facilitating access to representative data sets in privacy-enhanced ways, whether through facilitating the development of public datasets or the research, development, and demonstration of privacy-enhancing technologies or synthetic data. Congress can also support partnerships to improve the design of AI systems that consider privacy-by-design and utilize new privacy-enhancing technologies and techniques."

2️⃣ Ensure privacy laws are generally applicable and technology-neutral: "Congress should ensure that privacy laws in the United States are technology-neutral and can address many of the most salient privacy concerns with respect to the training and use of advanced AI systems. Congress should also ensure that general protections are flexible to meet changing concerns and technology and do not inadvertently stymie AI development."

🎙️ AI and Copyright Infringement

AI copyright lawsuits are piling up, and companies are racing to adapt. Is there a way to protect human creativity in the age of AI? You can't miss my first live talk of 2025; here’s why:

I invited Dr. Andres Guadamuz, associate professor of Intellectual Property Law at the University of Sussex, editor-in-chief of the Journal of World Intellectual Property, and an internationally acclaimed IP expert to discuss with me:

- Copyright infringement in the context of AI development and deployment;

- The legal debate unfolding in the EU and U.S.;

- Potential solutions;

- Protecting human creativity in the age of AI.

If you are an AI enthusiast, passionate about copyright, or determined to ensure human creativity thrives in the age of AI, I invite you to join us live for this unmissable conversation!

👉 To join the live session, register here.

🎬 Find all my previous live conversations with privacy and AI governance experts on my YouTube Channel.

🔔 AI Companies: The GDPR Is Knocking

If you're interested in AI, be sure to check out my analysis of relying on legitimate interest in the context of AI development and deployment, GDPR compliance, and why EDPB's Opinion 28/2024 is a game-changer for AI companies.

👉 Read the preview here. If you're not a paid subscriber, upgrade your subscription to access all previous and future analyses in full.

🔥 Job Opportunities in AI Governance

Below are 10 new AI Governance positions posted in the last few days. This is a competitive field: if it's a relevant opportunity, apply today:

🇺🇸 Xerox: Generative AI Governance & Process Manager - apply

🇧🇪 People-Powered Agency: Human-AI Governance - apply

🇺🇸 Dropbox: Senior AI Governance Program Manager - apply

🇨🇭 AXA: Werkstudent in AI Governance - apply

🇮🇹 EY: AI Governance - apply

🇦🇺 Optus: Director, Responsible AI & Capability - apply

🇸🇬 GovTech Singapore: AI Engineer, Responsible AI - apply

🇺🇸 Amazon: Principal Applied Scientist, Responsible AI - apply

🇮🇳 Accenture: Responsible AI Tech Lead - apply

🇺🇸 TikTok: Research Scientist, Responsible AI - apply

🔔 More job openings: subscribe to our AI governance and privacy job boards to receive weekly job opportunities. Good luck!

🙏 Thank you for reading!

AI is more than just hype—it must be properly governed. If you found this edition valuable, consider sharing it with friends and colleagues and help spread awareness about AI policy, compliance, and regulation. Thank you!

Happy New Year!

Luiza