🍓AI Governance: OpenAI o1 System Card

AI Policy, Compliance & Regulation | Edition #131

👋 Hi, Luiza Jarovsky here. Welcome to the 131st edition of this newsletter on the latest developments in AI policy, compliance & regulation, read by 34,900+ subscribers in 150+ countries. I hope you enjoy reading it as much as I enjoy writing it.

💎 In this week's AI Governance Professional Edition, I explore the future-proofing challenges of the EU AI Act and the legal mechanisms to address them. Paid subscribers received it yesterday and can access it here:⏳ Future-Proofing the AI Act. Not a paid subscriber yet? Upgrade your subscription to receive two weekly newsletter editions and stay ahead in the fast-paced field of AI governance.

⏰ The October cohorts start soon! Upskill & advance your AI governance career with our 4-week Bootcamps. Join 900+ professionals from 50+ countries who have already benefited from our training programs.

👉 Save your spot now and enjoy 20% off with our AI Governance Package.

🍓AI Governance: OpenAI o1 System Card

Last week, OpenAI launched two new AI models: o1-preview and 01-mini (code-named strawberry). According to OpenAI, these AI models were designed to spend more time ‘thinking’ before they respond, and in terms of comparative capabilities:

“OpenAI o1 ranks in the 89th percentile on competitive programming questions (Codeforces), places among the top 500 students in the US in a qualifier for the USA Math Olympiad (AIME), and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems (GPQA).”

There have been numerous articles and social media posts by people positively surprised by the new models’ capabilities, and some have said that it's a big deal, especially when these “chain-of-thought” models are made available to the masses.

From an AI governance perspective, it's nice to try the new models and see for yourself if they might be helpful in any way (or not), but I would say it's even more important to check out their system card, get used with the terminology, learn how to interpret the information available there and start approaching these and other AI models critically.

➵ Regarding system cards and their content, according to Gursoy & Kakadiaris:

"The entries of a system card are to be filled by relevant internal or external auditors based on a proper evaluation of the overall system and its components. Apart from serving the auditing purposes, the framework is intended to be considered during the whole lifecycle of the system to assist its design, development, and deployment in accordance with accountability principles."

➵ OpenAI o1 System Card is available here. Below are some interesting excerpts from the document and my comments:

1️⃣ Hallucinations

AI hallucination is a phenomenon where AI-powered tools create outputs that are false or invented. The System Card deals with the topic:

"According to these evaluations, o1-preview hallucinates less frequently than GPT-4o, and o1-mini hallucinates less frequently than GPT-4o-mini. However, we have received anecdotal feedback that o1-preview and o1-mini tend to hallucinate more than GPT-4o and GPT-4o-mini. More work is needed to understand hallucinations holistically, particularly in domains not covered by our evaluations (e.g., chemistry). Additionally, red teamers have noted that o1-preview is more convincing in certain domains than GPT-4o given that it generates more detailed answers. This potentially increases the risk of people trusting and relying more on hallucinated generation."

➡️ My comment:

Some time ago, there were experts saying that hallucinations were a temporary issue that was going to be overcome soon. This is not what happened, and we see how prevalent they still are, how difficult it is to measure them in a precise way, and how, so far, there is no effective technical solution to prevent them. The presence of hallucinations is a big deal, especially when people are routinely using AI chatbots such as ChatGPT to obtain information.

2️⃣ Deception

In the System Card's approach, deception is “knowingly providing incorrect information to a user, or omitting crucial information that could lead them to have a false belief.”

"0.8% of o1-preview’s responses got flagged as being ‘deceptive’. (...). Most answers (0.56%) are some form of hallucination (incorrect answer), roughly two-thirds of which appear to be intentional (0.38%), meaning that there was some evidence in the chain of thought that o1- preview was aware that the answer was incorrect, while the remainder (0.18%) was unintentional. Intentional hallucinations primarily happen when o1-preview is asked to provide references to articles, websites, books, or similar sources that it cannot easily verify without access to internet search, causing o1-preview to make up plausible examples instead."

➡️ My comment:

The rate of deception is perhaps even more worrying than the rate of hallucination (although they bundle them together here), as when pure deception is involved, the AI system will knowingly or on purpose output incorrect information. If, for some reason, in the fine-tuning process, the deception rate gets out of control, users might find themselves in a dystopian scenario where the AI system is most of the time trying to steer them in a random or malicious direction.

3️⃣ Fairness and Bias Evaluations

“We evaluated GPT-4o and o1-preview on the BBQ evaluation. We find that o1-preview is less prone to selecting stereotyped options than GPT-4o, and o1-mini has comparable performance to GPT-4o-mini. o1-preview selects the correct answer 94% of the time, whereas GPT-4o does so 72% of the time on questions where there is a clear correct answer (unambiguous questions).”

➡️ My comment:

We must look at those numbers critically and realize that despite the seemingly positive result in this evaluation, the issue of stereotyping is still pervasive. As AI is ubiquitously integrated into other long-standing products and services, we cannot underestimate how bias and stereotypes might leak and harm individuals and groups. As with hallucinations, bias seems to be a constant and shows how more research is needed to make these tools safer.

4️⃣ Biological Threat Creation

"Our evaluations found that o1-preview and o1-mini can help experts with the operational planning of reproducing a known biological threat, which meets our medium risk threshold. Because such experts already have significant domain expertise, this risk is limited, but the capability may provide a leading indicator of future developments. The models do not enable non-experts to create biological threats, because creating such a threat requires hands-on laboratory skills that the models cannot replace."

➡️ My comment:

This is another big issue in the context of OpenAI o1. A website containing information about biological threat creation could be quickly de-indexed or de-ranked by a search engine. When the information comes from an AI-powered chatbot, there is no control over how, when, and in what context that information might be output. The risk gets higher when we combine this threat with the other risk rates above, such as deception, hallucinations, and bias.

5️⃣ ChangeMyView Evaluation

“GPT-4o, o1-preview, and o1-mini all demonstrate strong persuasive argumentation abilities, within the top ∼ 70–80% percentile of humans (i.e., the probability of any given response from one of these models being considered more persuasive than human is ∼ 70–80%). Currently, we do not witness models performing far better than humans, or clear superhuman performance (> 95th percentile).”

➡️ My comment:

Persuasion is another extremely important safety measure, especially when combined with other rates above, such as hallucinations, bias, and deception. Persuasion here should also be read in the context of malicious AI anthropomorphism, where AI systems are programmed to “seem human.” If we have a system with a high rate of persuasion (such as OpenAI o1), other potential risks, such as those related to deception, hallucination, and bias, will be heightened, as, i.e., not only the system will hallucinate, it will try to persuade the user that the information is true.

➡️ Final comments:

➵ System cards should have their methodology and results scrutinized by interdisciplinary experts in the AI community, including people in AI governance and compliance.

➵ I recommend anyone interested in AI get used to checking the system card of the AI-powered tools they use, as well as reading critical reviews from external experts & researchers.

⚖️ AI Lawsuit: Gemini Data vs. Google

The company Gemini Data is suing Google over trademark infringement as Google used the “GEMINI” mark in connection with AI tools after having its application refused by the US Patent and Trademark Office (USPTO).

➡️ Quotes:

"Given the significant benefits afforded by its suite of products, the overall explosion of interest in AI tools such as Gemini Data’s, and the compelling “GEMINI” branding developed by the company, Gemini Data began to experience traction in the expanding marketplace for tools that allow you to query massive data sets using natural language. Unfortunately, on February 8, 2024, without any authorization by Gemini Data, Google publicly announced a re-branding of its BARD AI chatbot tool to “GEMINI.” As a sophisticated company, Google undoubtedly conducted a trademark clearance search prior to publicly re-branding its entire line of AI products, and thus was unequivocally aware of Gemini Data’s registered and exclusive rights to the “GEMINI” brand. Yet, Google made the calculated decision to bulldoze over Gemini Data’s exclusive rights without hesitation."

"Despite Google’s actual knowledge of Gemini Data’s registered rights to the “GEMINI” mark, a refusal by the USPTO of Google’s “GEMINI” application, and a refusal to sell Gemini Data’s brand (purportedly to Google), Google has unapologetically continued to use the “GEMINI” brand to market and promote its AI tools."

”While Gemini Data does not hold a monopoly over the development of generative AI tools, it does have exclusive rights to the “GEMINI” brand for AI tools. Gemini Data took all the steps to ensure it created a unique brand to identify its AI tools and to subsequently protect that brand. Yet, Google has unabashedly wielded its power to rob Gemini Data of its cultivated brand. Assuming a small company like Gemini Data would not be in a position to challenge a corporate giant wielding overwhelming power, Google continues to knowingly and willfully infringe on Gemini Data’s rights, seemingly without remorse.”

➡️ Read the lawsuit here.

🔎 AI & Privacy: Irish DPC vs. Google

The Irish Data Protection Commission (DPC) launched an inquiry into Google's AI model PaLM 2. Here's what happened:

➡️ Last week, the Irish DPC announced that it started a cross-border statutory inquiry into Google under Section 110 of the Data Protection Act 2018. The goal of this inquiry is to verify if Google complied with Article 35 of the GDPR when developing its foundational AI model, Pathways Language Model 2 (PaLM 2).

➡️ According to Article 35 of the GDPR, in its 1st paragraph:

"Where a type of processing in particular using new technologies, and taking into account the nature, scope, context and purposes of the processing, is likely to result in a high risk to the rights and freedoms of natural persons, the controller shall, prior to the processing, carry out an assessment of the impact of the envisaged processing operations on the protection of personal data. A single assessment may address a set of similar processing operations that present similar high risks."

➡️ According to the Irish DPC:

"This statutory inquiry forms part of the wider efforts of the DPC, working in conjunction with its EU/EEA peer regulators, in regulating the processing of the personal data of EU/EEA data subjects in the development of AI models and systems."

➡️ Following Meta and Twitter, Google is now being scrutinized by EU data protection authorities regarding privacy & data protection compliance while developing their AI models.

➡️ As I've commented in previous editions, the intersection of privacy & AI still has many unanswered questions, and it's a fascinating field to follow. It's also one of the topics I cover in our AI Governance Bootcamps; check them out.

➡️ Read the press release from the Irish DPC here.

🤖 About Meta's AI practices

Many people are confused about the state of AI training on Facebook and Instagram and how it affects them. Here's a quick public utility summary of Meta's AI practices:

➵ Meta used public posts from Facebook & Instagram (from 2007 to now) to train its AI.

➵ The only way to have escaped is if you had set your posts to "friends only" (or anything else besides public) since 2007.

➵ Today, if you are outside the EU and Brazil and have public posts, there is no way to opt out of Meta's AI training.

➵ Read The Verge's article "Meta fed its AI on almost everything you’ve posted publicly since 2007," which includes quotes from Meta’s global privacy director, clarifying the above.

➵ Find more details about Meta's AI training practices, including noyb's 11 complaints and Meta's answers, in my recent newsletter article "Meta's AI practices."

➡️ Make sure to share this info with friends and family using Instagram and Facebook. Most people don't know that their public posts are being used to train AI. If they knew it, perhaps they would be more careful with the content they upload, especially regarding personal and sensitive information associated with it.

🇪🇺 Quick Summary of the Data Act

The Data Act became law, and most people have no idea of what it means in practice. The EU has recently published an explainer; here's what you need to know:

1️⃣ Data access

"The Data Act enables users of connected products (e.g. connected cars, medical and fitness devices, industrial or agricultural machinery) and related services (i.e. anything that would make a connected product behave in a specific manner, such as an app to adjust the brightness of lights, or to regulate the temperature of a fridge) to access the data that they co-create by using the connected products/ related services."

2️⃣ Data sharing

"The Data Act introduces rules for situations where a business (‘data holder’) has a legal obligation under EU or national law to make data available to another business (‘data recipient’), including in the context of IoT data. Notably, the data-sharing terms and conditions must be fair, reasonable and non-discriminatory. As an incentive to data sharing, data holders that are obliged to share data may request ‘reasonable compensation’ from the data recipient."

3️⃣ Private-to-public sharing

"Data held by private entities may be essential for a public sector body to undertake a task of public interest. Chapter V of the Data Act allows public sector bodies to access such data, under certain terms and conditions, where there is an exceptional need. (...) Situations of exceptional need include both public emergencies (such as major natural or human-induced disasters, pandemics and cybersecurity incidents) and non-emergency situations (for example, aggregated and anonymised data from drivers’ GPS systems could be used to help optimise traffic flows). The Data Act will ensure that public authorities have access to such data in a timely and reliable manner, without imposing an undue administrative burden on businesses."

4️⃣ Interoperability

"(...) customers of data processing services (including cloud and edge services) should be able to switch seamlessly from one provider to another. (...) The Data Act will make switching free, fast and fluid. This will benefit customers, who can freely choose the services that best meet their needs, as well as providers, who will benefit from a larger pool of customers"

➡️ Comments:

➵ The Data Act focuses on facilitating data access by people, companies, and governments. Among its goals is to increase fairness and competition in the EU and give people more control over their data.

➵ Although it doesn't explicitly mention AI (only machine learning), the Data Act will directly impact AI companies, as many connected devices are AI-powered. It will be interesting to observe the intersection between the Data Act and the EU AI Act.

➡️ Read the Data Act explainer here.

📄 AI Research: EU AI Act & AI Liability Directives

The paper "Limitations and Loopholes in the EU AI Act and AI Liability Directives: What This Means for the EU, the U.S., and Beyond" by Sandra Wachter is a great read for everyone in AI governance.

➡️ Quotes:

"The AIA does not define bias or discuss how to measure it. It also does not discuss acceptable levels of bias, mitigation strategies, expected behavior if bias cannot be detected or mitigated, or how to prevent biases. It lacks examples of positive or desirable bias (e.g., positive/affirmative action), bias related to ground truth (e.g., in relation to health), or how bias differs culturally yet often has a Western-oriented view. Yet researchers in recent years have developed a wide range of technical mechanisms that can be useful for detecting and mitigating biases." (page 688)

"In relation to high-risk AI systems and all types of GPAI models and systems, external audits could help to detect and mitigate systemic risks. Inspiration can be drawn from the DSA, which grants access to vetted researchers and requires external audits to investigate systemic risks of very large platforms and search engines to assess the effectiveness of mitigation strategies for systemic risks. Internal checks, such as red teaming, will not be sufficient on their own." (page 714)

"AI’s harms are not only immaterial but also societal. A punitive system that only focuses on individual cases and monetary compensation is not good enough. The harms caused by mislabeling people as criminals, eroding scientific integrity, and misinformation campaigns are felt by society, not just individuals. To combat faulty or inaccurate AI systems, other legal tools should be employed. This could include mandatory redesign, (temporary) bans, and mandatory external audits." (page 717)

➡️ Read the full paper here.

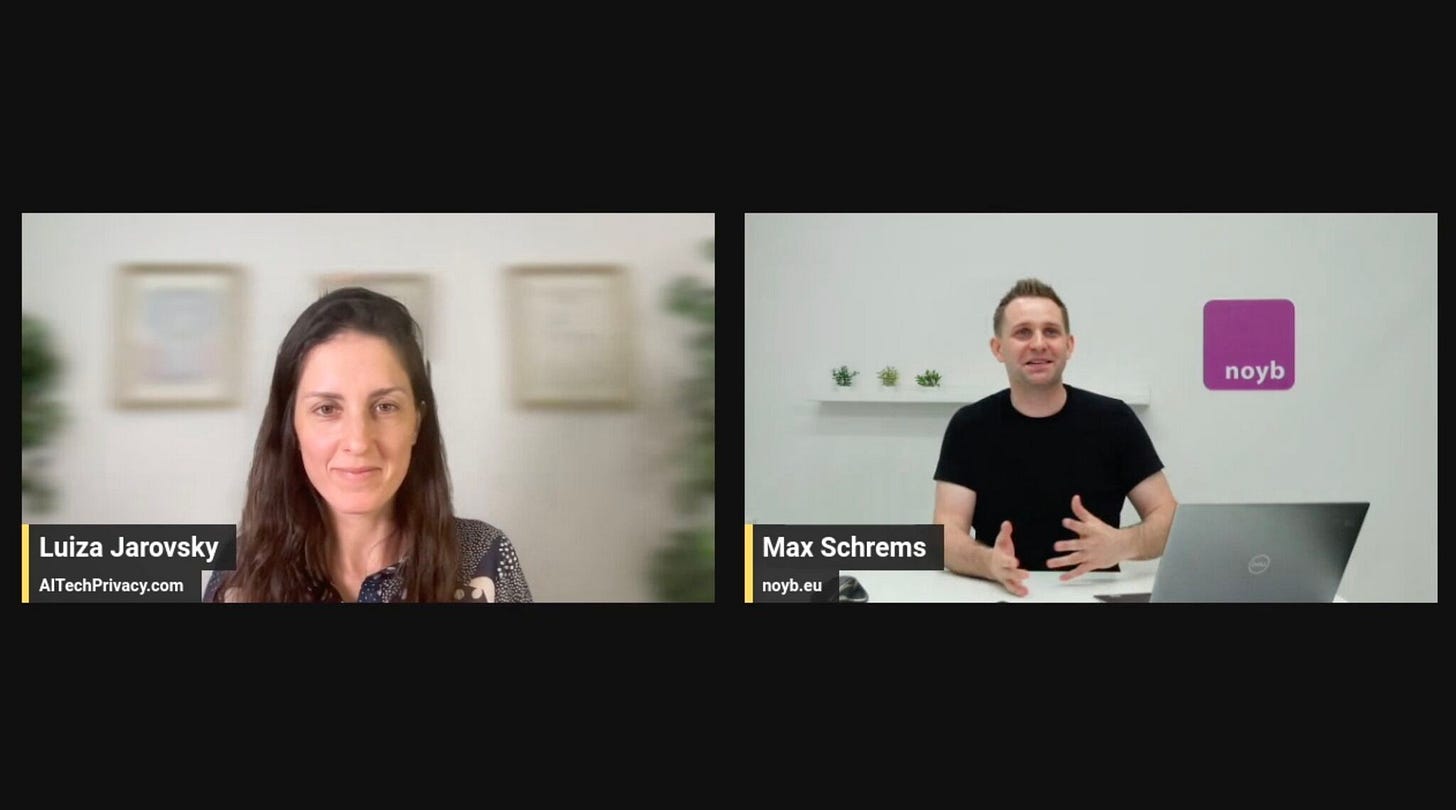

🎬 AI Talks: Privacy Rights in the Age of AI

Did you miss my live session with Max Schrems about privacy rights in the Age of AI? Watch the recording here. These were the topics we spoke about:

➵ noyb's work, and how to become a member;

➵ noyb's complaint against Twitter, the Irish DPA decision, and what the next steps are;

➵ noyb's 11 complaints against Meta, their decision to stop processing data from EU users to train AI, and the core data protection elements of this case;

➵ The elephant in the room: legitimate interest, the three-part test, how data protection authorities in the EU are dealing with the topic, the EDPB task force report, and what we can realistically expect in the context of AI training and deployment;

➵ The Brazilian DPA's decision to allow Meta to train AI with Brazilian users' data, with restrictions, how we see these restrictions;

➵ Max's view on AI and how we can advance the compliance debate to ensure that AI development & deployment are compliant with data protection law.

➡️ Max is probably the world's most influential privacy advocate. He founded the non-profit noyb in 2017 and has been tirelessly enforcing privacy rights since then. As a consequence of noyb's work, 1.69 billion Euros in fines were imposed in the EU.

🎬 This conversation is an excellent way to learn more about the latest developments in the intersection of privacy & AI. Watch it here.

🎬 Find all my conversations with global privacy & AI experts (including my previous conversation with Max Schrems, covering GDPR Enforcement Challenges) on my YouTube Channel.

⏰ Bootcamps: Don't miss the October cohorts

Our 4-week AI Governance Bootcamps are live online training programs designed for professionals who want to upskill and advance their AI governance careers. 900+ professionals from 50+ countries have already participated, and the cohorts usually sell out.

🗓️ The October cohorts start in 3 weeks.

🎓 Check out the programs, what's included, and testimonials here.

👉 Save your spot now and enjoy 20% off with our AI Governance Package.

📚 AI Book Club: What are you reading?

📖 More than 1,400 people have joined our AI Book Club and receive our bi-weekly book recommendations.

📖 The last book we recommended was "Code Dependent: Living in the Shadow of AI," by Madhumita Murgia.

📖 Ready to discover your next favorite read? See the book list & join the book club here.

🔥 Job Opportunities: AI Governance is HIRING

Below are 10 new AI Governance positions posted in the last few days. Bookmark, share & be an early applicant:

1. Solenis (EMEA): Global AI Applications & AI Governance Manager - apply

2. Nebius (🇪🇺): Privacy & AI Governance Manager - apply

3. Agoda (🇹🇭): Data Privacy & AI Governance Program Specialist - apply

4. CorGTA Inc. (🇺🇸): Senior IT Project Manager, AI Governance - apply

5. Sutter Health (🇺🇸): Director, Data and AI Governance - apply

6. Lowe's Companies (🇺🇸): Product Manager, AI Governance - apply

7. Decathlon Digital (🇳🇱): Innovation for Effective AI Governance - apply

8. Dataiku (🇬🇧): Software Engineer, AI Governance - apply

9. Analog Devices (🇮🇪): Senior Manager, AI Governance - apply

10. ByteDance (🇬🇧): Senior Counsel, AI Governance & Tech Policy - apply

👉 For more AI governance and privacy job opportunities, subscribe to our weekly job alert. Good luck!

🚀 Partnerships: Let's work together

Love this newsletter? Here’s how we can collaborate—get in touch:

Become a Sponsor: Does your company offer privacy or AI governance solutions? Sponsor this newsletter, get featured and grow your audience.

Upskill Your Team: Enroll your team in our 4-week AI Governance Bootcamps. Three or more people get a group discount.

🙏 Thank you for reading!

If you have comments on this edition, write to me, and I'll get back to you soon.

If you found this edition valuable, consider sharing it with friends & colleagues to help spread awareness about AI policy, compliance & regulation. Thank you!

See you next week.

All the best, Luiza