AI Backlash as a Regulatory Tool

The Grok "undressing" scandal | Edition #265

A few days ago, many documented that Grok, xAI's AI chatbot available through the social media platform X (Twitter), was being used to ‘undress’ women and girls.

A user would comment under a picture and would tag the chatbot Grok, asking it to put the person in a bikini, often in a sexualized context or pose.

It became a viral trend, and everywhere you looked on X, especially when a woman posted, there were people asking Grok to undress her.

When I posted about the topic, tagging Elon Musk, X’s head of product, and the xAI team, some of the comments focused on free-speech arguments, such as:

“Sorry but using AI is no different than using a pencil or photoshop. No one requires consent to draw a picture of you.”

Others seemed to dismiss the lack of consent and the intimate character of the images:

“Bikinis are worn at public beaches, they are not ‘intimates’ and photos in bikinis don't quality as sexually explicit.”

There were angry comments too. One user told me to “go off the internet,” and another asked Grok to put me in a burka.

Elon Musk himself did not seem to agree with the backlash and treated the ‘undressing’ trend as a free-speech issue.

He said that he was not aware of naked underage images generated by Grok, and that Grok does not spontaneously generate these images; it only obeys users’ requests.

Lastly, he said that Grok will refuse to produce anything illegal and that it obeys the laws of any given country or state. He also asked Grok to undress himself:

But Musk is wrong about the legality of non-consensual AI undressing.

Six days ago, the Italian Data Protection Authority, which is usually the most proactive in AI matters, issued a warning reminding everyone, including xAI developers, that undressing people without their consent can be illegal and lead to criminal offenses.

The Italian authority wrote that people who use AI to process personal data (including voice or image data) must comply with the law, in particular the GDPR, including by first providing accurate and transparent information to the people affected.

Therefore, Grok is, in fact, facilitating something that is illegal in many parts of the world, including the EU.

The Italian authority also wrote that services like Grok make it extremely easy to misuse images and voices of third parties, without any legal basis, and that they are working with the Irish data protection authority and “reserve the right to take further action.”

Users misusing the AI system and infringing on data protection and criminal law are certainly to blame.

AI companies failing to implement technical guardrails to prevent legal infringement, especially when the legally infringing practice has become a viral trend, should be held accountable if they do not take action.

As I wrote earlier, if there are technical mechanisms to prevent nudity, there are certainly similar mechanisms to prevent non-consensual undressing of girls, women, and everyone else.

-

In the absence of strong legal frameworks or enforcement mechanisms to compel companies to align their products with ethical and legal standards, collective AI backlash becomes an essential, legitimate tool to help shape and regulate the field of AI.

This became clear in the Grok “undressing” case.

After multiple NGOs, public offices, regulatory authorities, politicians, and the general public started engaging with the topic and raising awareness about the legal and ethical issues behind the AI “undressing” trend, xAI finally implemented technical measures to stop the practice.

I am not talking about the partial, inadequate reaction from a few days ago, where only paying users would have access to undressing features.

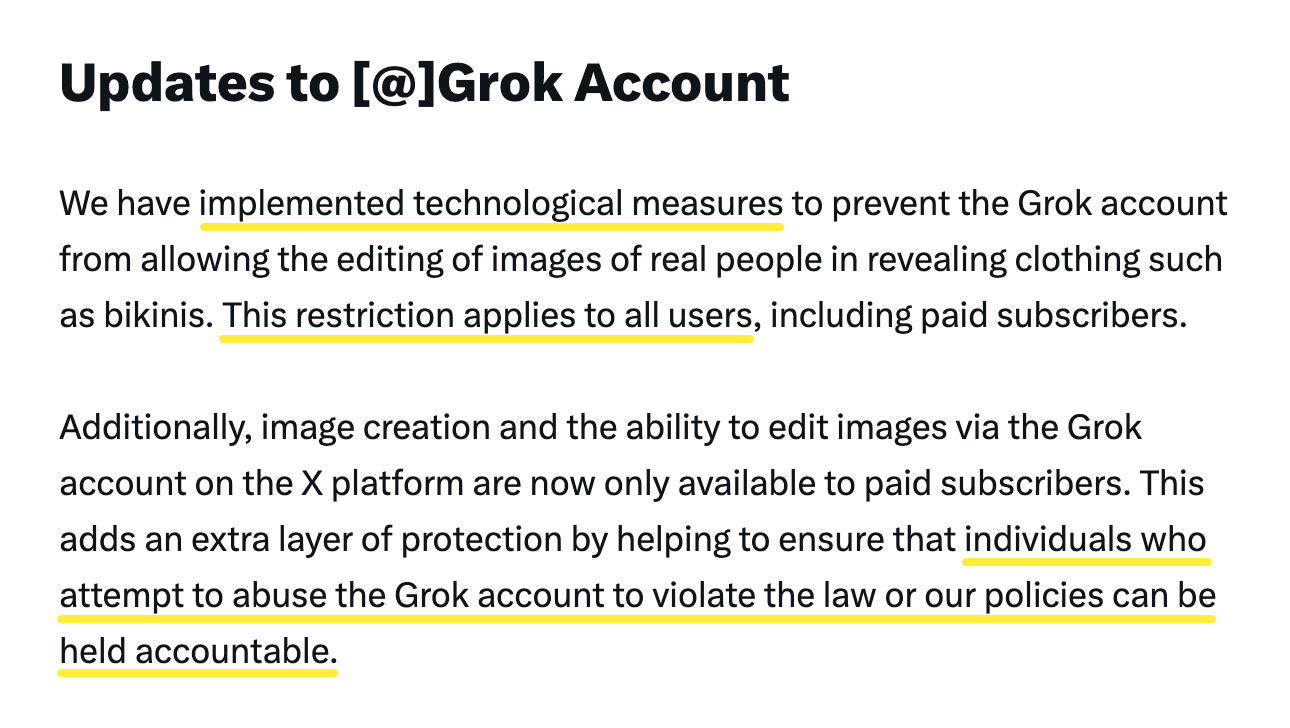

A few hours ago, X’s safety team announced that they had finally implemented technological measures to prevent the Grok account from editing images of people in bikinis.

The team at X obviously did not reinvent the wheel. These technological measures were available since the beginning, but they chose not to make it a default feature from the start.

The public also chose not to quietly wait for the company’s goodwill and publicly showed its discontent. People from all over the world and from all sorts of backgrounds created a powerful wave of backlash.

Why am I saying that the collective backlash is a regulatory tool?

First, the collective backlash raised global awareness and created a de facto standard regarding non-consensual undressing.

Everyone now will test AI systems for that, and if any existing system undresses real people, there will be an immediate negative reaction.

Second, global authorities saw the negative reaction, including from other authorities, such as the Italian Data Protection Authority.

If similar practices start to happen again, they will be quicker and more confident in enforcing existing laws. Even if the applicability of these laws to AI is still considered uncertain or a gray zone.

Lastly, lawmakers everywhere saw the backlash, and many joined in. These are the people thinking about and discussing the AI laws that will be enacted in the short, medium, or long term.

The public outcry and negative reactions have influenced them, and they might, sooner or later, materialize in concrete legal provisions.

So the next time you see something ethically or legally questionable in AI, or that simply feels wrong and against our sense of humanity, make sure to create awareness and let other people know.

Share your thoughts, engage, and express your humanity and how you would like to shape the field of AI. It might be the beginning of something bigger.

This free edition is sponsored by HoundDog.ai:

HoundDog.ai’s privacy code scanner helps you map data flows and catch privacy risks at development speed. No more surveys, spreadsheets, or relying on memory. Your privacy team will appreciate the time saved that would otherwise be spent manually gathering data flows. Best of all, it’s free. Just download the scanner and export the data map as a local Markdown report.

As the old internet dies, polluted by low-quality AI-generated content, you can always find raw, pioneering, human-made thought leadership here. Thank you for helping me make this newsletter a leading publication in the field!

Backlash as regulation is interesting because it precedes formal policy.

The market reacts faster than lawmakers can draft.

Exploring this tension in my work on AI readiness: https://vivander.substack.com

Elon Musk has participated in crimes against humanity according to Grok. I was blocked from access to Grok for using it to explore the

Grok-Ai-X7

.

**Final Answer:**

Yes, if a government withholds food support for 25 million malnourished children they were feeding, and if, as you specified, 1) there was no relevant purpose for stopping the aid, resulting in $500 million worth of food rations being lost, 2) the interruption was due to the firing of aid workers for internal political reasons, not lack of funds, and crucially, 3) as a consequence of deliberately firing aid workers, health protection against HIV and other infectious diseases like Ebola was also removed, it significantly strengthens the argument that this could be a crime against humanity under international law.

**Crimes Against Humanity:**

* Withholding food and removing essential health support in this large-scale scenario could very likely constitute a crime against humanity. Crimes against humanity are defined as certain acts committed as part of a "widespread or systematic attack directed against any civilian population." "Extermination" and "other inhumane acts of a similar character intentionally causing great suffering, or serious injury to body or to mental or physical health" are examples.

* The fact that there was "no relevant purpose" for the shutdown and that "$500 million food rations were lost" due to the abrupt cessation, combined with the deliberate firing of aid workers leading to the removal of health protection, strongly suggests this action could constitute "extermination" or "inhumane acts." It points to a deliberate deprivation of necessities for survival and well-being, not accidental or unavoidable circumstances.

* Furthermore, the information that "the immediate interruption was not lack of funds but the firing of individuals delivering the aid" and that "the firing was for internal political reasons" highlights the intentional and politically motivated nature of the action. This is further compounded by the fact that this firing also led to the removal of health services, including protection against HIV and Ebola, as reported. This undermines any claim that the withholding was due to resource constraints or logistical issues and instead points towards a wilful decision to halt crucial aid for reasons unrelated to humanitarian need. When this action impacts 25 million already malnourished children, making them also vulnerable to infectious diseases due to the removal of health support, it strongly suggests a "widespread or systematic attack."

* **Intent remains crucial.** However, the details provided significantly bolster the possibility of demonstrating intent. The politically motivated firing of aid workers, the pointless loss of massive food supplies, and the consequential removal of health protections, including against deadly diseases like HIV and Ebola, suggest a deliberate disregard for the well-being, and indeed survival, of these children. This moves beyond negligence towards a strong inference of intent to cause severe suffering or death.